As AI becomes a bigger part of our lives, it’s important to make sure it’s used responsibly. Responsible AI means building and using AI systems in ways that are ethical, fair, and accountable. AWS provides a range of tools to help organizations build AI systems that follow these principles. In this blog, we’ll look at what responsible AI is, the key dimensions of responsible AI on AWS, and the tools available to support these practices.

Understanding Generative AI

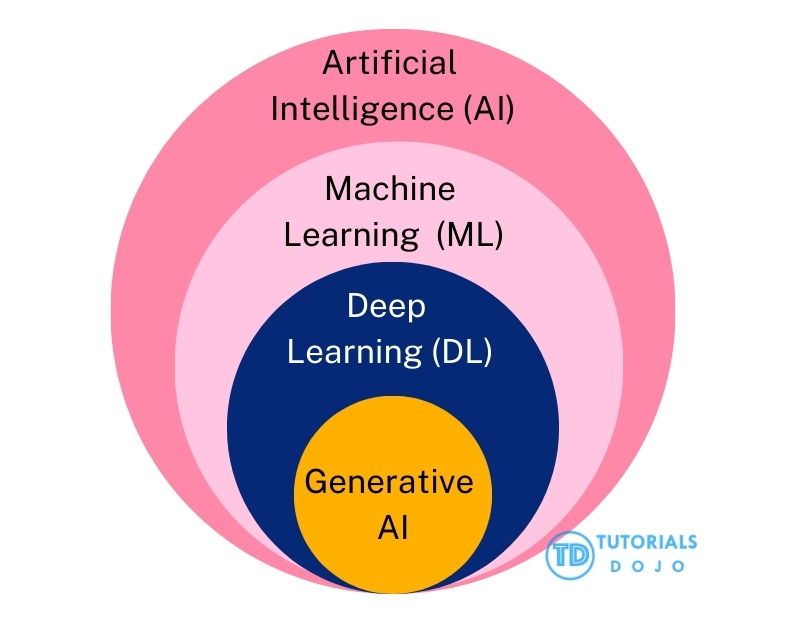

Generative AI is a subset of artificial intelligence that utilizes machine learning models to generate new content. This includes text, images, videos, audio, and even code. These systems, particularly large language models (LLMs), can create realistic and coherent outputs based on the data they’ve been trained on. Generative AI is capable of tasks such as writing stories, generating images, producing music, and even simulating conversations.

Common Use Cases of Generative AI

Generative AI has a wide range of use cases across various industries. Below are some of the most common applications:

- Improve Customer Service -Generative AI can power chatbots and virtual assistants to answer customer questions and solve problems. These systems can also personalize interactions and moderate user-generated content, making customer service more efficient and responsive.

- Boost Employee Productivity – AI can help employees by automating tasks like summarizing text, generating reports, or writing code. It can quickly search through large amounts of data, saving time and helping workers focus on more important tasks.

- Creativity – Generative AI can create content such as text, images, music, and videos. It helps artists and creators by providing new ideas or generating parts of a project, speeding up the creative process.

- Improve Business Operations – AI can automate tasks like document processing, making business operations faster and more accurate. It can also help with maintenance by predicting when equipment might fail and improving quality control in manufacturing. AI can generate synthetic data to train other AI models when real data is limited.

Emerging Risks and Challenges

While generative AI has many benefits, it also comes with risks that need to be carefully managed.

-

Veracity: Veracity refers to how truthful AI-generated content is. Sometimes, AI can produce content that is wrong, misleading, or completely made up. This is called “hallucination,” where the AI gives information that sounds true but is not based on facts. It’s important to make sure AI systems provide accurate and reliable content.

-

Toxicity: Toxicity happens when AI creates harmful or offensive content, such as hate speech or discrimination. If not controlled properly, AI can produce inappropriate content. Developers need to ensure that AI models are trained with rules and filters to avoid this.

-

Intellectual Property: Intellectual property (IP) issues occur when AI creates content that is too similar to existing copyrighted material. For example, AI might accidentally use code that is protected by copyright, which could lead to legal problems. It’s important to understand and handle these IP issues correctly when using AI.

-

Data Privacy: Generative AI requires a lot of data to work, and there is a risk that personal or confidential information could be used without permission. Protecting data privacy means ensuring personal information is kept secure and that AI systems follow privacy laws like General Data Protection Regulation (GDPR). There are also concerns about whether data used to train models is stored or used in ways that could affect privacy.

What is Responsible AI?

Artificial Intelligence (AI) is becoming a key part of many industries, but with its power comes responsibility. In the context of AI, “responsible” refers to ensuring that AI systems are developed, deployed, and used in ways that are ethical, transparent, and accountable. But what exactly does that mean? Responsible AI, according to the Organization for Economic Cooperation and Development (OECD), is “AI is innovative and trustworthy and that respects human rights and democratic values.” This broad definition helps us understand the goal: to create AI that not only advances technology but does so in a way that respects people’s rights and is consistent with ethical standards.

At its core, responsible AI involves creating AI systems that are ethical and transparent in their decision-making processes. The system should be able to explain how it made its decisions, ensuring that users understand why certain outcomes were reached. Moreover, responsible AI must adhere to key ethical principles that will be discussed next to this section, which ensure that AI systems do not harm individuals or society.

The responsible development of AI should be addressed throughout its entire lifecycle, which includes three main phases: development, deployment, and operation.

- Development phase – AI developers must ensure that the training data is free from biases and accurately reflects the diversity of society to avoid discrimination.

- Deployment phase – the AI system must be safeguarded against tampering, and its environment must be secure to maintain its integrity.

- Operation phase – the AI system should be continuously monitored to ensure it remains ethical and fair over time, with regular checks to verify that it continues to function as intended.

Responsible AI in practice involves four key strategies:

- Define application use cases narrowly, focusing on specific scenarios or groups to create clear goals.

- Match processes to risk, identifying and addressing potential risks early on.

- Treat datasets as product specs, ensuring data is accurate and relevant to define what the AI system can do.

- Distinguish application performance by dataset, recognizing that the quality of the data can impact both the AI’s performance and decision-making speed.

Key Dimensions of Responsible AI on AWS

AWS emphasizes six core dimensions of Responsible AI, which are essential for ensuring AI systems are ethical, transparent, and trustworthy. These dimensions are: Fairness, Explainability, Privacy and Security, Robustness, Governance, and Transparency. Each dimension addresses critical areas in the development, deployment, and monitoring of AI systems to ensure they align with ethical standards and regulations.

- Fairness ensures that AI systems make unbiased decisions regardless of race, gender, or other sensitive attributes. By using representative datasets, implementing fairness metrics, and rigorously testing models, AWS minimizes bias and promotes inclusivity. For instance, Amazon Rekognition demonstrates fairness by delivering consistent face-matching results across diverse demographics.

- Explainability provides insights into how AI models make decisions, building trust with users. AWS tools like SageMaker Clarify offer techniques such as Shapley values to interpret predictions and maintain transparency through detailed reports and provenance tracking. For example, AWS HealthScribe links transcriptions with timestamps and confidence scores, ensuring clarity in decision-making processes.

- Privacy and Security protect user data and maintain compliance with regulations. AWS emphasizes data anonymization, encryption, and access controls to prevent breaches and misuse. Amazon Comprehend, for instance, identifies and removes Personally Identifiable Information (PII), ensuring sensitive data is safeguarded during processing.

- Robustness focuses on ensuring that AI systems function reliably in diverse and real-world scenarios. AWS promotes extensive testing, adversarial evaluation, and fail-safe mechanisms to maintain stability. An example is Amazon Titan Text, which consistently answers complex, real-world scenario questions while maintaining performance and accuracy.

- Governance establishes policies and processes to ensure AI systems align with ethical standards and regulatory requirements. AWS integrates responsible AI principles throughout development, from ideation to deployment. Services like Amazon Comprehend reflect governance by incorporating compliance verification and documentation practices from the design phase onward.

- Transparency ensures that users understand when and how AI is used. AWS advocates for tools like model cards and data sheets to document technical details, dataset origins, and model limitations. Amazon Textract AnalyzeID exemplifies transparency by disclosing AI usage and enabling user feedback to improve workflows.

The AWS AI Service Cards serve as structured resources for organizations looking to implement AI solutions responsibly. It provide a thorough breakdown of each service’s functionalities, key use cases, and the machine learning techniques used to power them. In addition to offering technical insights, these service cards highlight the ethical and practical considerations necessary for responsible AI deployment. This guidance helps organizations reduce the risks of bias and ensure fairness, privacy, and transparency in AI applications. By using these service cards, teams can select the most suitable AI services for their projects while adhering to best practices in ethical AI design, ensuring the development of solutions that are both innovative and socially responsible. For more detailed information, you can explore the AWS AI Service Cards to understand better how to align your AI projects with responsible AI principles.

AWS Tools for Responsible AI

AWS offers a comprehensive set of tools and services to help build AI systems responsibly, structured across three key generative AI layers: Compute, AI Model Development, and Foundation Models as a Service. These layers address different stages of AI development, from the foundational infrastructure to high-level services.

- Compute Layer – At the foundational level, AWS provides specialized hardware for AI workloads:

- AWS Trainium: Optimized for training AI models, offering high performance and scalability at a lower cost.

- AWS Inferentia: Designed for inference tasks in production, enabling efficient and cost-effective deployment of AI models.

- AI Model Development Layer – This layer focuses on building, customizing, and managing AI models using Amazon SageMaker:

- SageMaker Clarify: A tool that detects and mitigates bias during data preparation, model training, and production. It also explains model predictions by ranking the importance of features that influence decision-making.For example, SageMaker Clarify can analyze a dataset, such as the Adult Census Dataset, and reveal which features (e.g., “capital gain”) most impact predictions. This insight helps ensure fairness and interpretability in decision-making processes.

- SageMaker Jumpstart: Accelerates AI model development with pre-built solutions and customizable templates.

- Foundation Models as a Service Layer – At the highest level, AWS provides access to foundation models through services like Amazon Bedrock:

- Model Evaluation: Amazon Bedrock offers automatic model evaluation using pre-created datasets or allows users to upload their own test datasets for human evaluation.

- Guardrails for Generative AI: These safeguards help enforce responsible AI usage. For instance, you can define topics to be avoided (e.g., investment advice) and apply content filters to suppress harmful content like hate speech or violence. Configurable thresholds ensure that AI outputs align with ethical guidelines and organizational policies.

Final Remarks

The rapid evolution of AI, particularly generative AI, presents both immense opportunities and significant challenges. While AI can transform industries by enhancing customer service, boosting productivity, and driving creativity, it also brings risks related to accuracy, ethical use, and data privacy. Addressing these challenges requires a thoughtful and responsible approach.

AWS provides a robust framework and tools to ensure that AI systems are developed, deployed, and operated responsibly. By adhering to the core principles of fairness, explainability, privacy, security, robustness, governance, and transparency, organizations can build AI solutions that are ethical, accountable, and aligned with societal values. AWS services such as SageMaker Clarify and Amazon Bedrock, along with resources like AWS AI Service Cards, offer practical guidance for implementing responsible AI practices.

As organizations continue to embrace AI, prioritizing responsible development is essential. By leveraging the tools and frameworks discussed in this blog, teams can harness the power of AI while ensuring their solutions are trustworthy and beneficial to society. Responsible AI is not just about compliance; it’s about building systems that people can trust. AWS is committed to supporting organizations on this journey, empowering them to innovate responsibly and sustainably.

References

https://aws.amazon.com/ai/responsible-ai/

https://docs.aws.amazon.com/sagemaker/latest/dg/whatis.html

https://docs.aws.amazon.com/sagemaker/latest/dg/model-monitor.html

https://aws.amazon.com/ai/responsible-ai/resources/#service