Last updated on July 17, 2024

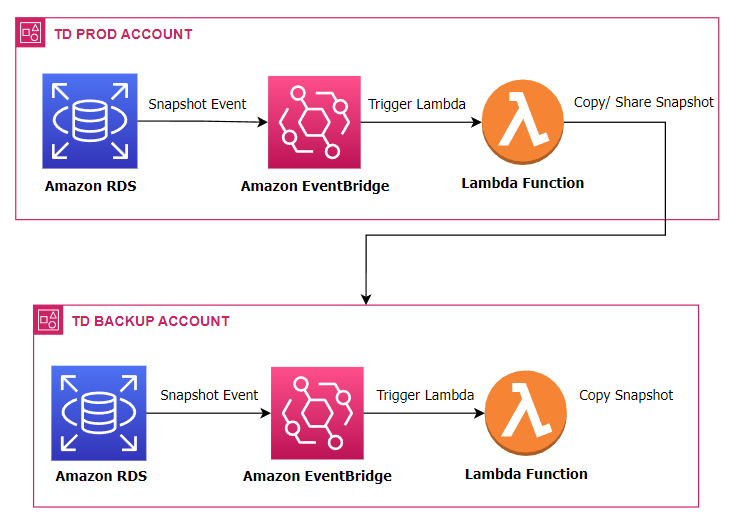

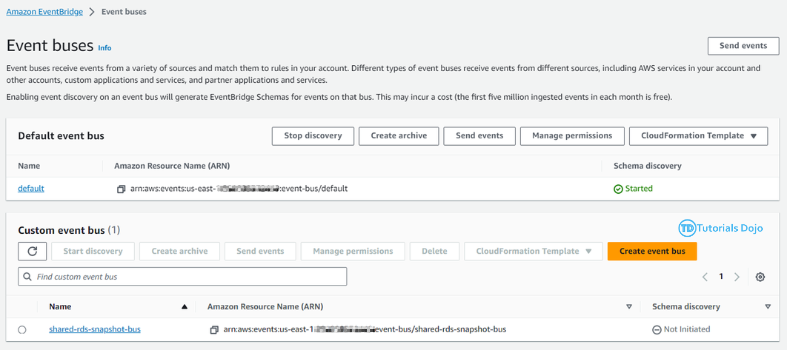

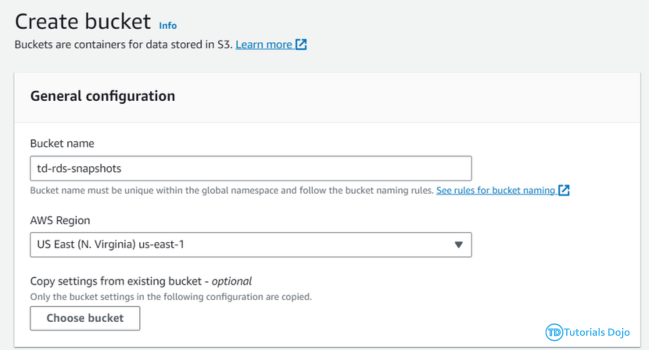

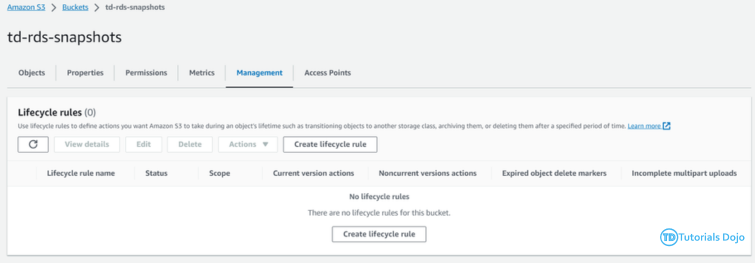

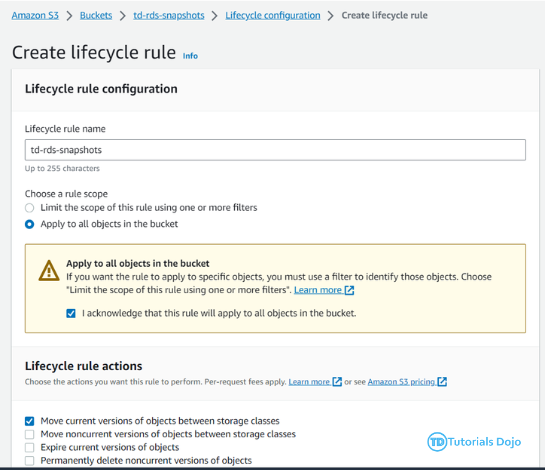

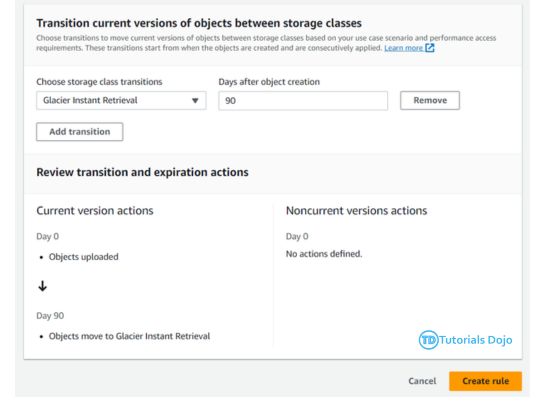

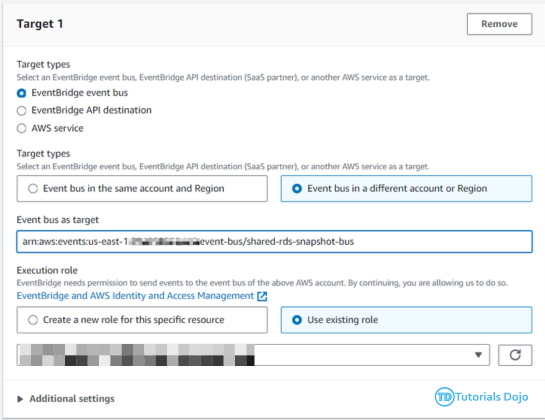

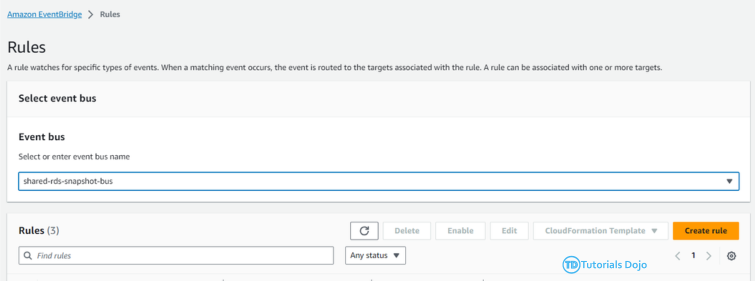

The Automated Daily RDS Export ensures that daily snapshots of Amazon RDS instances are created and made shareable. While RDS takes daily snapshots automatically, these are not shareable by default. To address this, we copy the snapshots and share the copies with a designated backup account. This process uses AWS Lambda Functions and Amazon EventBridge to automate the task. EventBridge triggers the Lambda function daily, which handles copying and sharing the snapshots. This automation ensures that shareable snapshots are created every day without manual intervention, enhancing the reliability and accessibility of database backups. The Automated Daily RDS Export has several important benefits for managing database backups. By automating the creation of shareable snapshots, it reduces the risk of mistakes and ensures backups are made consistently. This process saves time and allows teams to focus on other important work. Shareable snapshots make it easier to recover data quickly in case of a disaster. Storing backups in a separate account also improves security, reducing the risk of data loss or corruption. Overall, this automated process provides a reliable and efficient way to handle database backups. Step 1: On the backup account, create a Lambda Function that will copy the newly shared RDS Snapshot to a different region. Function name: td-shared-rds-snapshot (Replace with desired name) Runtime: Python 3.9 Ensure to replace the following: Step 2: Add this role to the Lambda Function. Name it as td-shared-rds-snapshot (Replace it with your desired function name) Step 3: Create an Event Bus Note: As per the AWS documentation, RDS Shared Snapshot event is currently unavailable. Therefore, we will opt for cross-account event buses using EventBridge. To implement this, we will create an EventBridge rule that will transfer snapshot copy events to the backup account’s EventBridge bus. Then, we can attach a Lambda function to the Event bus in the backup account. Step 4: Under the Created Event bus (td-shared-rds-snapshot-bus), create an Amazon Eventbridge (CloudWatch Events) rule to trigger the Lambda function. Name: td-shared-rds-snapshot (Replace with your desired name) Description: Copy the shared RDS Snapshot to a different region. Step 5: Copy the ARN of the created Event Bus. We will be using it later on the source account. Backup Account: In terms of cost management, it is recommended to keep only one snapshot at the end of each month. Based on the information provided, it is expected that there will be 12 snapshots per year. Move the 12 RDS snapshots to the Amazon S3 Glacier. Step 1: Create a Lambda Function to keep only one RDS snapshot at the end of each month. Function name: td-keep-one-rds-snapshot-each-month Runtime: Python 3.9 Step 2: Add this policy to the Lambda Function. Name it as td-move-rds-snapshots-to-s3-glacier (Replace with your desired name) Step 3: Create a CloudWatch Event to run this function every month. Step 4: Create AWS KMS – We will be needing this in Step 7. Exporting RDS Snapshots to Amazon S3 requires KMS even if the RDS Snapshots are not encrypted. Ensure that the KMS matches the region where the RDS Snapshots are stored. Refer to this KMS: https://us-east-1.console.aws.amazon.com/kms/home?region=us-east-1#/kms/keys Step 5: Create an Amazon S3 Bucket. Note: Ensure that the region in Amazon S3 matches the region where the RDS Snapshots are stored. Step 6: Once created, go to Management → Create Lifecycle rule Follow these configurations: Once done, click the Create rule button. Step 7: Create a Lambda Function that will move the 12 RDS snapshots to the Amazon S3 Glacier every year. Function name: td-move-rds-snapshots-to-s3-glacier-yearly (Replace with your desired name) Runtime: Python 3.9 Note: Make sure to replace the following: Step 8: Add these policies to the Lambda Function Create another one, name it as td-pass-role (Replace with your desired name) Step 9: Create a CloudWatch Event to run this function every year. Step 1: Create a Lambda Function that copies the system snapshots for the purpose of making them shareable. Function name: td-copy-system-rds-snapshot (Replace with your desired name) Runtime: Python 3.9 Ensure to replace the following: Step 2: Add these policies to the Lambda Function Step 3: Utilize Amazon Eventbridge (CloudWatch Events) to trigger this function. Name: td-copy-system-rds-snapshot (Replace with your desired name) Description: Create a copy of the automatically generated RDS snapshot. Step 4: Create a Lambda Function that will send the new RDS snapshot to the backup account. Step 5: Add these policies to the Lambda Function Step 6: Utilize Amazon Eventbridge (CloudWatch Events) to trigger this function. Name: td-share-rds-snapshot (Replace with your desired name) Description: Share the RDS Snapshot with the Backup Account Step 7: Add the created custom event bus from the Backup Account: The Automated Daily RDS Export is a vital solution for managing database backups efficiently. It ensures that daily snapshots of Amazon RDS instances are created and made shareable. While RDS takes daily snapshots automatically, these aren’t shareable by default. To fix this, we copy the snapshots and share the copies with a backup account. This process uses AWS Lambda Functions and Amazon EventBridge to automate the task. EventBridge triggers the Lambda function daily, which handles copying and sharing the snapshots. Automating this process reduces mistakes and ensures backups are made consistently. It saves time and allows teams to focus on other important tasks. Shareable snapshots make it easier to recover data quickly in case of a disaster, and storing backups in a separate account improves security. Overall, this solution provides a reliable and efficient way to manage database backups, enhancing both security and data recovery.

Implementation Steps

Backup Account

import boto3

import time

def lambda_handler(event, context):

try:

# Wait for 10 seconds - This will wait for the RDS Snapshot to be shared.

time.sleep(10)

# Initialize the RDS client for the source region (where the snapshot is shared with you)

rds_source_region = boto3.client('rds', region_name='us-east-2') # Replace with the actual source region

# Specify the source region (us-east-2) and the source AWS account ID

source_region = 'us-east-2' # Replace with the actual source region

source_account_id = 'YOUR_SOURCE_ACCOUNT_ID' # Replace with your AWS account ID

# Initialize the RDS client for the target region (where you want to copy the snapshot)

rds_target_region = boto3.client('rds', region_name='us-east-1') # Replace with the desired target region

# Specify the target region (us-east-1)

target_region = 'us-east-1' # Replace with the desired target region

# List shared snapshots for the specified source region and account

response = rds_source_region.describe_db_snapshots(

SnapshotType='shared',

MaxRecords=500, # Increase the max records to ensure coverage

IncludeShared=True,

IncludePublic=False

)

# Check if there are any shared snapshots found

snapshots = response['DBSnapshots']

if not snapshots:

return {

'statusCode': 404,

'body': 'No shared snapshots found for the specified source region and account.'

}

# Sort the snapshots by creation time (most recent first)

snapshots.sort(key=lambda x: x['SnapshotCreateTime'], reverse=True)

# Get the most recently created shared snapshot identifier

source_snapshot_identifier = snapshots[0]['DBSnapshotIdentifier']

# Specify the target snapshot identifier based on the source identifier

target_snapshot_identifier = 'copy-' + source_snapshot_identifier.replace(':', '-')

# Copy the shared RDS snapshot from the source region (us-east-1) to the target region (us-east-2)

copy_response = rds_target_region.copy_db_snapshot(

SourceDBSnapshotIdentifier=source_snapshot_identifier,

TargetDBSnapshotIdentifier=target_snapshot_identifier,

SourceRegion=source_region

)

# Get the identifier of the newly created snapshot from the copy response

new_snapshot_identifier = copy_response['DBSnapshot']['DBSnapshotIdentifier']

return {

'statusCode': 200,

'body': f'Shared snapshot "{source_snapshot_identifier}" is now copying to {target_region} region as "{new_snapshot_identifier}".'

}

except Exception as e:

return {

'statusCode': 500,

'body': f'Error: {str(e)}'

}

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"rds:DescribeDBSnapshots",

"rds:CopyDBSnapshot",

"rds:ModifyDBSnapshotAttribute"

],

"Resource": "*"

}

]

}

PROD Account: “”

Backup Account: “”

{

"Version": "2012-10-17",

"Statement": [{

"Sid": "sid1",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::54645683453:root"

},

"Action": "events:PutEvents",

"Resource": "arn:aws:events:us-east-1:54645683453:event-bus/td-shared-rds-snapshot-bus" (Replace with your desired name)

}]

}

{

"source": [

"aws.rds"

],

"detail-type": [

"RDS DB Snapshot Event"

],

"detail": {

"SourceType": [

"SNAPSHOT"

],

"EventID": [

"RDS-EVENT-0197"

]

}

}

Deletion of RDS Snapshots (Backup Account):

import boto3

from datetime import datetime, timedelta

def lambda_handler(event, context):

# Initialize the RDS client

rds = boto3.client('rds')

# Specify the maximum number of snapshots to keep each month

max_snapshots_to_keep = 1 # Number of snapshots to keep each month

# Target region

target_region = 'us-east-2'

# Initialize the list of snapshots to delete

snapshots_to_delete = []

# Calculate the current date

current_date = datetime.now()

# Calculate the last day of the previous month

last_day_of_previous_month = current_date.replace(day=1) - timedelta(days=1)

# Calculate the date format for snapshot identifier (e.g., 2023-08-31)

snapshot_identifier_date = last_day_of_previous_month.strftime('%Y-%m-%d')

# List all manual snapshots from the AWS account

response = rds.describe_db_snapshots(

SnapshotType='manual'

)

# Sort the snapshots by creation time (most recent first)

snapshots = response['DBSnapshots']

snapshots.sort(key=lambda x: x['SnapshotCreateTime'], reverse=True)

# Identify the snapshots from the last day of the month

snapshots_to_keep = [snapshot for snapshot in snapshots if snapshot['SnapshotCreateTime'].date() == last_day_of_previous_month.date()]

# Delete extra snapshots if there are more than max_snapshots_to_keep

if len(snapshots_to_keep) > max_snapshots_to_keep:

snapshots_to_delete = snapshots_to_keep[max_snapshots_to_keep:]

for snapshot in snapshots_to_delete:

rds.delete_db_snapshot(DBSnapshotIdentifier=snapshot['DBSnapshotIdentifier'], RegionName=target_region)

return {

'statusCode': 200,

'body': f'Kept {len(snapshots_to_keep)} snapshots from {snapshot_identifier_date} and deleted {len(snapshots_to_delete)} snapshots in region {target_region}.'

}

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": "rds:DescribeDBSnapshots",

"Resource": "*"

}

]

}

import boto3

def lambda_handler(event, context):

# Initialize AWS clients

rds = boto3.client('rds', region_name='us-east-2') # Replace with your

s3_bucket = 'td-rds-snapshots' # Replace with your S3 bucket name

try:

# List all manual snapshots

response = rds.describe_db_snapshots(SnapshotType='manual')

# Export snapshots to Amazon S3

for snapshot in response['DBSnapshots']:

snapshot_arn = snapshot['DBSnapshotArn']

export_task_id = snapshot['DBSnapshotIdentifier']

iam_role_arn = '' # Replace with your IAM role ARN

kms_key_id = ''

s3_prefix = f'rds-snapshots/{export_task_id}' # Replace with your desired S3 prefix

rds.start_export_task(

ExportTaskIdentifier=export_task_id,

SourceArn=snapshot_arn,

S3BucketName=s3_bucket,

IamRoleArn=iam_role_arn,

KmsKeyId=kms_key_id,

S3Prefix=s3_prefix

)

return {

'statusCode': 200,

'body': f'Started export tasks for {len(response["DBSnapshots"])} snapshots.'

}

except Exception as e:

return {

'statusCode': 500,

'body': f'Error: {str(e)}'

}

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"s3:ListAccessPointsForObjectLambda",

"s3:PutObject",

"s3:GetObject",

"rds:DescribeDBSnapshots",

"rds:DescribeExportTasks",

"rds:StartExportTask",

"s3:ListBucket",

"s3:DeleteObject",

"s3:GetBucketLocation"

],

"Resource": "*"

}

]

}

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "iam:PassRole",

"Resource": "*"

}

]

}

Copy the Automated RDS Snapshot to the same region

import boto3

def lambda_handler(event, context):

# Initialize the RDS client

rds = boto3.client('rds')

# Specify the RDS instance identifier

rds_instance_identifier = 'prod-tutorialsdojo-portal-db-v2' # Replace with your RDS instance ID

try:

# List automated snapshots for the specified RDS instance

response = rds.describe_db_snapshots(

DBInstanceIdentifier=rds_instance_identifier,

SnapshotType='automated',

MaxRecords=500, # Increase the max records to ensure coverage

IncludeShared=False,

IncludePublic=False

)

# Check if there are any snapshots found

snapshots = response['DBSnapshots']

if not snapshots:

return {

'statusCode': 404,

'body': 'No completed automated snapshots found for the specified RDS instance.'

}

# Sort the snapshots by creation time (most recent first)

snapshots.sort(key=lambda x: x['SnapshotCreateTime'], reverse=True)

# Get the most recently created snapshot identifier

source_snapshot_identifier = snapshots[0]['DBSnapshotIdentifier']

# Initialize the RDS client for the same region

rds_same_region = boto3.client('rds', region_name='us-east-2') # Replace with your desired region

# Specify the target region (same region)

target_region = 'us-east-2' # Replace with your desired region

# Modify the identifier to meet naming conventions

target_snapshot_identifier = 'copy-' + source_snapshot_identifier.replace(':', '-')

# Copy the most recently created automated RDS snapshot to the same region

copy_response = rds_same_region.copy_db_snapshot(

SourceDBSnapshotIdentifier=source_snapshot_identifier,

TargetDBSnapshotIdentifier=target_snapshot_identifier,

SourceRegion=target_region

)

# Get the identifier of the newly created snapshot from the copy response

new_snapshot_identifier = copy_response['DBSnapshot']['DBSnapshotIdentifier']

return {

'statusCode': 200,

'body': f'Newly created snapshot "{new_snapshot_identifier}" is now copying.'

}

except Exception as e:

return {

'statusCode': 500,

'body': f'Error: {str(e)}'

}

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"rds:DescribeDBSnapshots",

"rds:CopyDBSnapshot"

],

"Resource": "*"

}

]

}

{

"source": [

"aws.rds"

],

"detail-type": [

"RDS DB Snapshot Event"

],

"detail": {

"Message": [

"Automated snapshot created"

]

}

}

Share the RDS Snapshot to the Backup Account

import boto3

def lambda_handler(event, context):

# Initialize the RDS client

rds = boto3.client('rds')

# Specify the AWS account ID

source_aws_account_id = 'YOUR_SOURCE_ACCOUNT_ID' # Replace with PROD AWS account ID

target_aws_account_id = 'YOUR_TARGET_ACCOUNT_ID' # Replace with the Backup Account AWS account ID

# Specify the RDS instance identifier

rds_instance_identifier = 'YOUR_RDS_INSTANCE_IDENTIFIER' # Replace with your RDS instance ID

try:

# List manual snapshots for the specified RDS instance

response = rds.describe_db_snapshots(

DBInstanceIdentifier=rds_instance_identifier,

SnapshotType='manual', # Specify 'manual' for manual snapshots

MaxRecords=500, # Increase the max records to ensure coverage

IncludeShared=False,

IncludePublic=False

)

# Check if there are any snapshots found

snapshots = response['DBSnapshots']

if not snapshots:

return {

'statusCode': 404,

'body': 'No completed manual snapshots found for the specified RDS instance.'

}

# Sort the snapshots by creation time (most recent first)

snapshots.sort(key=lambda x: x['SnapshotCreateTime'], reverse=True)

# Get the most recently created snapshot identifier

source_snapshot_identifier = snapshots[0]['DBSnapshotIdentifier']

# Share the snapshot with the target AWS account

rds.modify_db_snapshot_attribute(

DBSnapshotIdentifier=source_snapshot_identifier,

AttributeName='restore',

ValuesToAdd=[target_aws_account_id]

)

return {

'statusCode': 200,

'body': f'Manual snapshot "{source_snapshot_identifier}" is now shared with Backup Account.'

}

except Exception as e:

return {

'statusCode': 500,

'body': f'Error: {str(e)}'

}

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"rds:DescribeDBSnapshots",

"rds:CopyDBSnapshot",

"rds:ModifyDBSnapshotAttribute"

],

"Resource": "*"

}

]

}

{

"source": [

"aws.rds"

],

"detail-type": [

"RDS DB Snapshot Event"

],

"detail": {

"SourceType": [

"SNAPSHOT"

],

"EventID": [

"RDS-EVENT-0197"

]

}

}

Conclusion