A foundation model is a large-scale, pre-trained artificial intelligence model that serves as a general-purpose system for various downstream tasks. These models, such as GPT for natural language processing or CLIP for vision-language understanding, are trained on extensive datasets and can be fine-tuned or adapted to specific applications. Foundation models are characterized by their ability to generalize across tasks, making them highly versatile and impactful in AI-driven solutions.

Evaluating these models’ performance is essential to understand their reliability, applicability, and potential business value. However, these models’ vast scale and complexity pose unique challenges for evaluation, requiring a multifaceted approach. This article explores various methods and metrics used to assess the performance of foundation models and their alignment with business objectives.

Approaches to Evaluate Foundation Model Performance

Human Evaluation

Human evaluation involves real users or experts interacting with the foundation model to provide qualitative feedback. This method is particularly valuable for assessing nuanced and subjective aspects of model performance that are difficult to quantify.

- Key Aspects Assessed:

- User Experience: Measures how intuitive, satisfying, and seamless the interaction feels from the user’s perspective.

- Contextual Appropriateness: Evaluates whether the model’s responses are relevant to the context, sensitive to nuances, and aligned with human communication expectations.

- Creativity and Flexibility: Analyzes the model’s ability to handle unexpected queries or complex scenarios that require innovative problem-solving.

Human evaluation is used during iterative development to refine and fine-tune models based on real-world user feedback. For instance, businesses often deploy human evaluation after launching models in production, allowing them to identify areas for improvement through live user interactions. This approach complements quantitative evaluations by highlighting subjective user-centric dimensions.

Benchmark Datasets

Benchmark datasets provide a systematic and quantitative method for evaluating foundation models. These datasets consist of predefined tasks, inputs, and associated metrics that measure performance objectively and replicable.

- Metrics Measured:

- Accuracy: Determines how the model performs specific tasks correctly according to predefined standards (e.g., matching expected outputs).

- Speed and Efficiency: Evaluate the model’s response time and impact on operational workflows.

- Scalability: Tests the model’s ability to maintain performance under increased workloads or as the scale of data or users grows.

- Automation in Benchmarking: Modern approaches, such as using a large language model (LLM) as a judge, can automate the grading process. This involves:

- Comparing model outputs to benchmark answers using an external LLM.

- Scoring the model based on metrics like accuracy, relevance, and comprehensiveness.

Benchmark datasets are essential during the initial testing to verify technical performance and enable comparisons across models. They help ensure that the model meets baseline specifications before human evaluation begins.

Combined Approach

A combined approach leverages the strengths of both human evaluation and benchmark datasets to provide a holistic view of performance:

- Benchmark datasets offer quantitative insights into the model’s technical capabilities, such as accuracy and efficiency.

- Human evaluation contributes a qualitative perspective, ensuring the model is effective, engaging, and aligned with user expectations.

For example, benchmark datasets can be used in business use cases to evaluate a model before deployment (e.g., assessing a chatbot’s accuracy). Once deployed, human evaluators can provide feedback based on real-world interactions, enabling the model to adapt and improve over time.

By combining these two methods, organizations can ensure their models are technically proficient, relevant, and impactful in practical applications.

Metrics for Assessing Foundation Model Performance

Evaluating foundation models, particularly for text generation and transformation tasks, requires robust metrics that assess their outputs’ technical and semantic quality. Below are three widely used metrics—ROUGE, BLEU, and BERTScore—that provide valuable insights into the performance of these models.

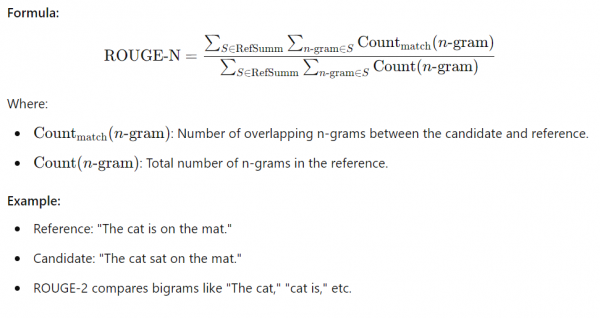

ROUGE (Recall-Oriented Understudy for Gisting Evaluation)

ROUGE is a set of metrics primarily used for evaluating tasks like text summarization and machine translation. ROUGE measures the overlap between generated text and reference text, making it effective for evaluating recall (i.e., how much important information is retained).

-

Key Variants:

- ROUGE-N: Measures the overlap of n-grams between the generated and reference text.

For example:- ROUGE-1: Unigram overlap, useful for capturing key individual words.

- ROUGE-2: Bigram overlap helps understand phrase-level fluency.

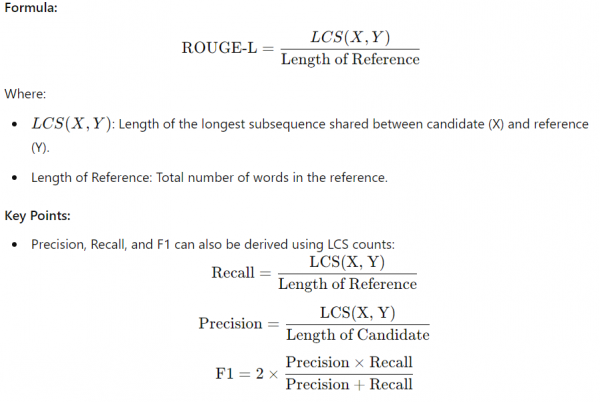

- ROUGE-L: Uses the longest common subsequence (LCS) to evaluate the coherence and logical flow of the generated content. It is particularly useful for assessing narrative consistency.

- ROUGE-N: Measures the overlap of n-grams between the generated and reference text.

-

Advantages:

- Simple and interpretable.

- Correlates well with human judgment for recall-oriented tasks.

- Particularly suited for summarization tasks.

-

Example Use Case: In a business scenario, ROUGE scores can be applied to evaluate AI-generated product descriptions by comparing them to expert-written descriptions. For instance, a ROUGE score of 0.85 might indicate a high overlap with reference descriptions, ensuring that the generated text effectively covers all critical product features.

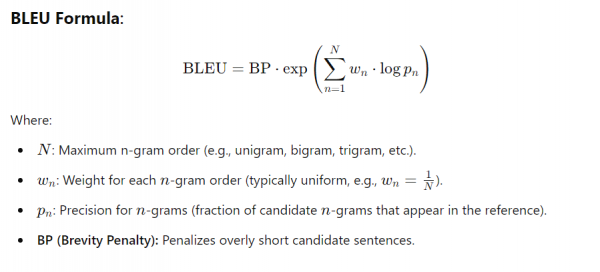

BLEU: Precision-Focused Evaluation

BLEU (Bilingual Evaluation Understudy) is widely used to evaluate machine-translated text by comparing it to high-quality human translations. As a precision-oriented metric, BLEU emphasizes the proportion of generated N-grams (sequences of words) that appear in the reference text while penalizing overly short outputs using a brevity penalty. BLEU is particularly effective when exact matches to reference outputs are crucial.

- Key Features:

- Precision Metric: Focuses on how many N-grams in the machine-generated text match those in the reference.

- N-gram Analysis: Evaluates combinations such as unigrams, bigrams, trigrams, and quadrigrams to assess fluency and coherence.

- Brevity Penalty: Discourages short outputs that might inflate precision scores.

- Advantages:

- Easy to compute and interpret.

- Provides reliable assessments in structured tasks like machine translation.

- Limitations:

- Insensitive to synonyms and paraphrasing.

- It may overlook the grammaticality and fluency of generated text.

- Example Use Case in Business: For customer service chatbots, BLEU can measure the accuracy of responses to common queries by comparing them to pre-approved reference answers. A BLEU score of 0.80 might indicate high alignment, but additional qualitative reviews may be necessary to address nuances.

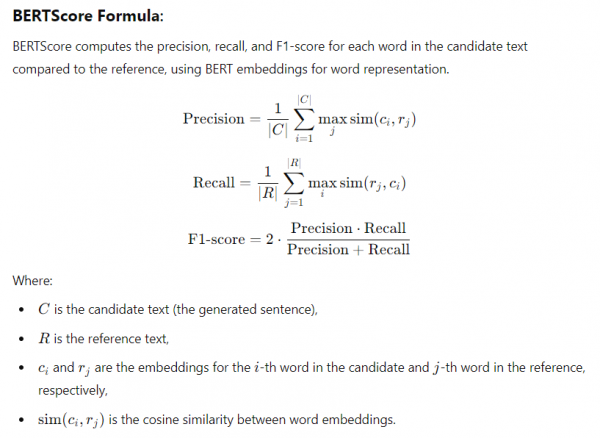

BERTScore: Semantic Similarity Assessment

BERTScore leverages pre-trained embeddings from models like BERT to compute the cosine similarity between contextual embeddings of words in the generated and reference texts. Unlike BLEU, which relies on exact word matches, BERTScore evaluates the semantic alignment of the two texts, making it robust to paraphrasing and nuanced language differences.

- Key Features:

- Semantic Evaluation: Measures the meaning generated text conveys rather than lexical overlap.

- Contextual Embeddings: Utilizes rich embeddings to capture relationships between words and context.

- Nuance Sensitivity: Handles synonymy and minor paraphrasing gracefully.

- Advantages:

- Suitable for tasks where preserving meaning is more important than matching words.

- Complements traditional metrics by providing deeper insight into semantic quality.

- Limitations:

- Computationally intensive compared to BLEU or ROUGE.

- It may require domain-specific fine-tuning of embeddings for optimal results.

- Example Use Case in Business: For e-commerce platforms generating personalized product recommendations, BERTScore can evaluate the semantic relevance of recommendations by comparing generated descriptions with ideal ones. A high BERTS score ensures the recommendations align with user preferences and contextual requirements.

Aligning Model Performance with Business Objectives

Foundation models, due to their general-purpose design and versatility, are increasingly being used to address complex business challenges, ranging from enhancing customer engagement to optimizing operational efficiency. However, ensuring these models deliver measurable value requires a robust alignment between their technical performance and overarching business objectives. This section explores how to evaluate foundation models in the context of business outcomes.

Understanding Business Objectives

The first step in aligning model performance with business goals is clearly defining the objectives the model is expected to achieve. These objectives often fall into the following categories:

- Productivity: Streamlining workflows, reducing manual effort, or increasing the speed of processes (e.g., automating report generation).

- User Engagement: Improving customer interaction through personalized and contextually relevant experiences (e.g., AI-powered chatbots).

- Revenue Impact: Driving sales or increasing average order values through optimized recommendations or compelling content (e.g., AI-generated product descriptions).

- Operational Efficiency: Enhancing scalability and reducing costs through intelligent automation.

Defining these goals helps establish a framework for evaluating the model’s success in practical, business-oriented terms.

Use Cases

Aligning foundation models with business goals can yield significant improvements across various industries. Below are examples of practical applications:

- Customer Service Chatbots:

- Objective: Reduce average handling time while increasing customer satisfaction.

- Approach: Use metrics like BLEU to evaluate chatbot accuracy and customer feedback scores for subjective evaluation.

- E-commerce Product Recommendations:

- Objective: Increase average order value and conversion rates.

- Approach: Combine BERTScore (to ensure semantic relevance of recommendations) with business metrics such as revenue uplift or click-through rates.

- Automated Content Generation:

- Objective: Create engaging and high-quality marketing materials.

- Approach: Use ROUGE to evaluate the coverage and quality of generated content, complemented by engagement metrics like user clicks and shares.

Challenges in Aligning Models with Business Goals

While the potential of foundation models is vast, aligning them with business outcomes is not without challenges:

- Complexity in Mapping Metrics: Translating technical performance metrics into actionable business insights requires a deep understanding of the model and the business domain.

- Scalability and Adaptability: As business needs evolve, the model must remain relevant, scalable, and continuous.

- Bias and Fairness: Addressing biases in model outputs is critical, especially in industries where fairness and inclusivity are paramount (e.g., recruitment, finance).

Conclusion

Foundation models represent a transformative leap in artificial intelligence, offering unparalleled versatility and generalization across various tasks. However, their true potential is unlocked only when their technical capabilities are aligned with real-world business objectives. This article outlined key methods for evaluating foundation models, including quantitative metrics like ROUGE, BLEU, and BERTScore and qualitative human evaluations. By combining these approaches, organizations can assess technical performance and real-world impact.

References:

- https://explore.skillbuilder.aws/learn/course/19613/play/127966/optimizing-foundation-models

- https://aws.amazon.com/what-is/foundation-models/

- https://docs.kolena.com/metrics/bertscore/

- https://haticeozbolat17.medium.com/text-summarization-how-to-calculate-bertscore-771a51022964

- https://www.studysmarter.co.uk/explanations/engineering/artificial-intelligence-engineering/bleu-score/

- https://towardsdatascience.com/foundations-of-nlp-explained-bleu-score-and-wer-metrics-1a5ba06d812b

- https://www.freecodecamp.org/news/what-is-rouge-and-how-it-works-for-evaluation-of-summaries-e059fb8ac840/

- https://docs.kolena.com/metrics/rouge-n/