When it comes to web hosting and server management, facing HTTP 500 errors can be a challenging obstacle. Such errors frequently arise from file descriptor limits, which restrict the server’s capacity to manage incoming requests efficiently. However, by understanding how to expand these limits, you can reduce the occurrence of HTTP 500 errors and guarantee more seamless operations for your web applications. Raising file descriptor limits helps fix HTTP 500 errors by enabling servers to manage more connections at once. This enhances server reliability and efficiency, particularly during high traffic, leading to fewer errors and quicker responses. Additionally, it reduces downtime, ensuring your services stay available for the convenience of your users. Indeed, adjusting these limits is a simple yet effective way to optimize your server’s overall performance. With this, it is necessary to conduct initial checks to grasp the current file descriptor limits before making any adjustments. In this way, these checks provide vital insights into existing configurations and serve as a baseline for subsequent adjustments. An easy way to check the File Descriptor limits is to run this command in GitBash: – Run the command: – Identify the Nginx worker process ID from the output. Ensure to select the process ID of the “worker process” and not the “master process.” – Run the following command below with the use of the process ID that you have extracted from the previous step: – example: – Look for the “Max open files” entry to determine the current file descriptor limit. Alternatively, for a more direct approach: – Check the high limit with: – Check the soft limit with: Here, you will see how to implement changes to increase file descriptor limits effectively: – Add the following line to /etc/sysctl.conf configuration file: – Paste this code at the bottom: -Open the nginx.conf file: -Insert this code below the `pid /run/nginx.pid;` line: – Execute this code to apply changes: Now that the necessary adjustments have been made, it is vital to verify that the changes have taken effect as expected. To ensure the successful implementation of the changes, verify the file descriptor limits: More than just fixing HTTP 500 errors, expanding file descriptor limits makes your web server more reliable and efficient overall. Given the tips and steps outlined in this blog, you are equipped to take charge of your server setup proactively. This leads to smoother experiences for your users and more dependable service delivery, ensuring your web applications run seamlessly. Most importantly, addressing file descriptor limits not only resolves immediate issues but also lays a solid foundation for the future success of your online presence.

Initial Checks

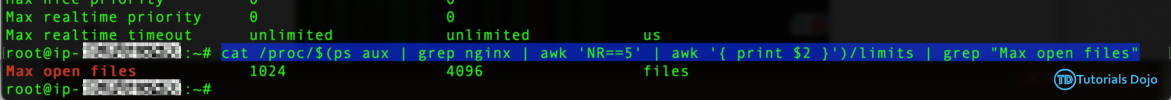

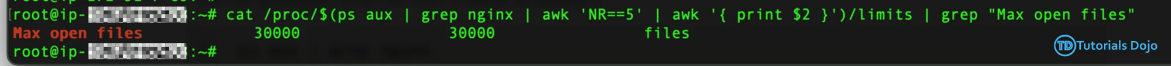

cat /proc/$(ps aux | grep nginx | awk 'NR==5' | awk '{ print $2 }')/limits | grep "Max open files"

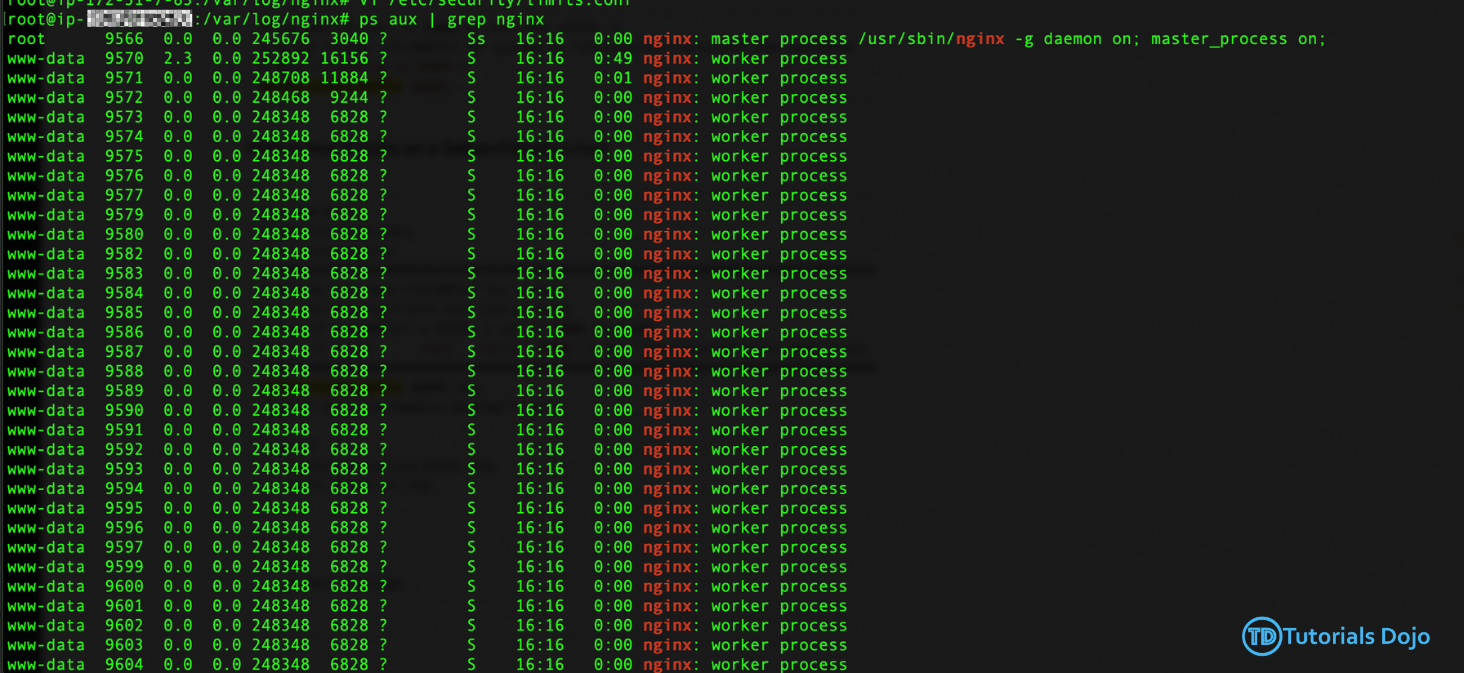

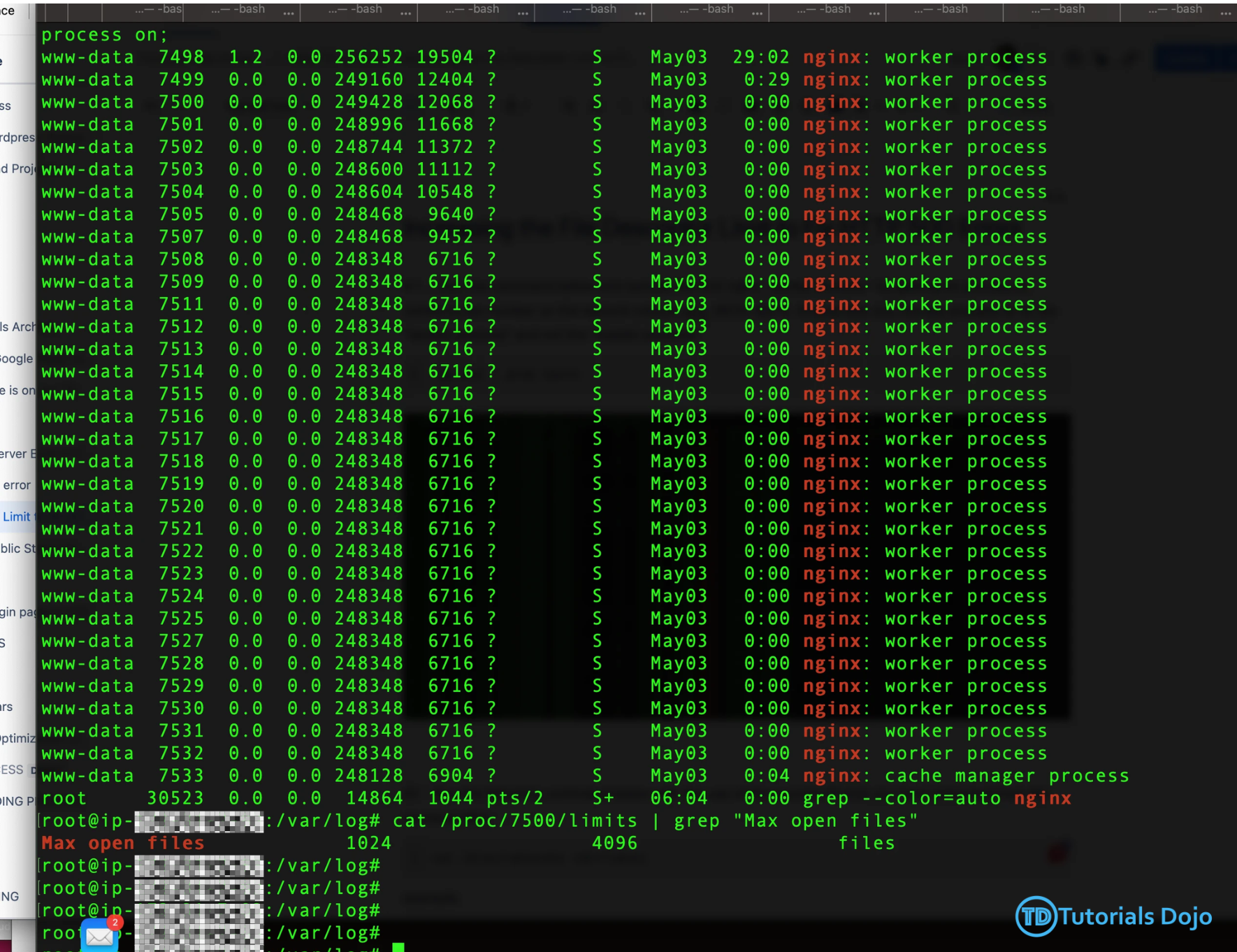

Determine Nginx Worker Process ID:

ps aux | grep nginx

Check File Descriptor Limits:

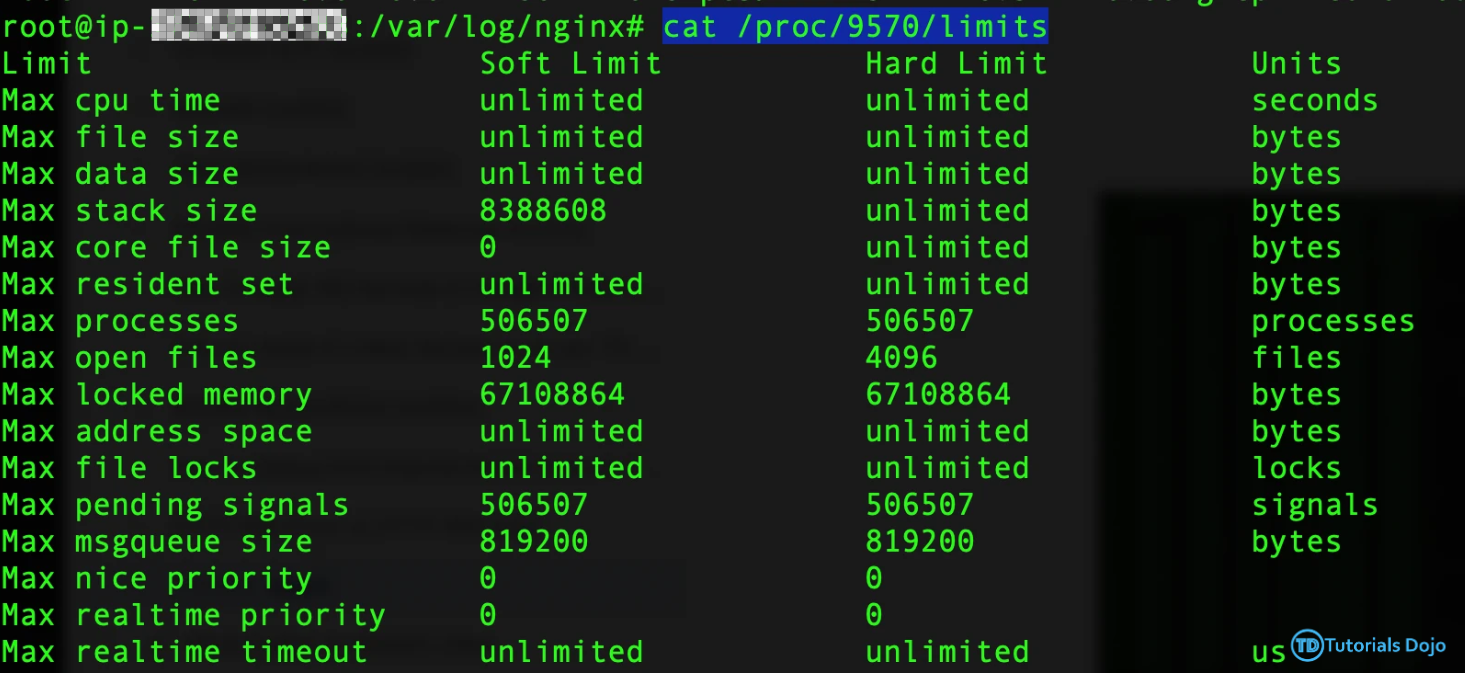

– Run the command:cat /proc/<process id>/limits

cat /proc/9570/limits

– Use the command:cat /proc/<process id>/limits | grep "Max open files"

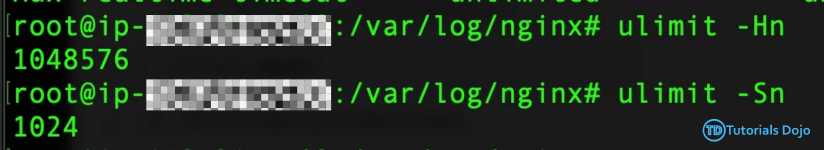

Verify High and Soft Limits:

ulimit -Hn

ulimit -Sn

Increasing File Descriptor Limits Implementation

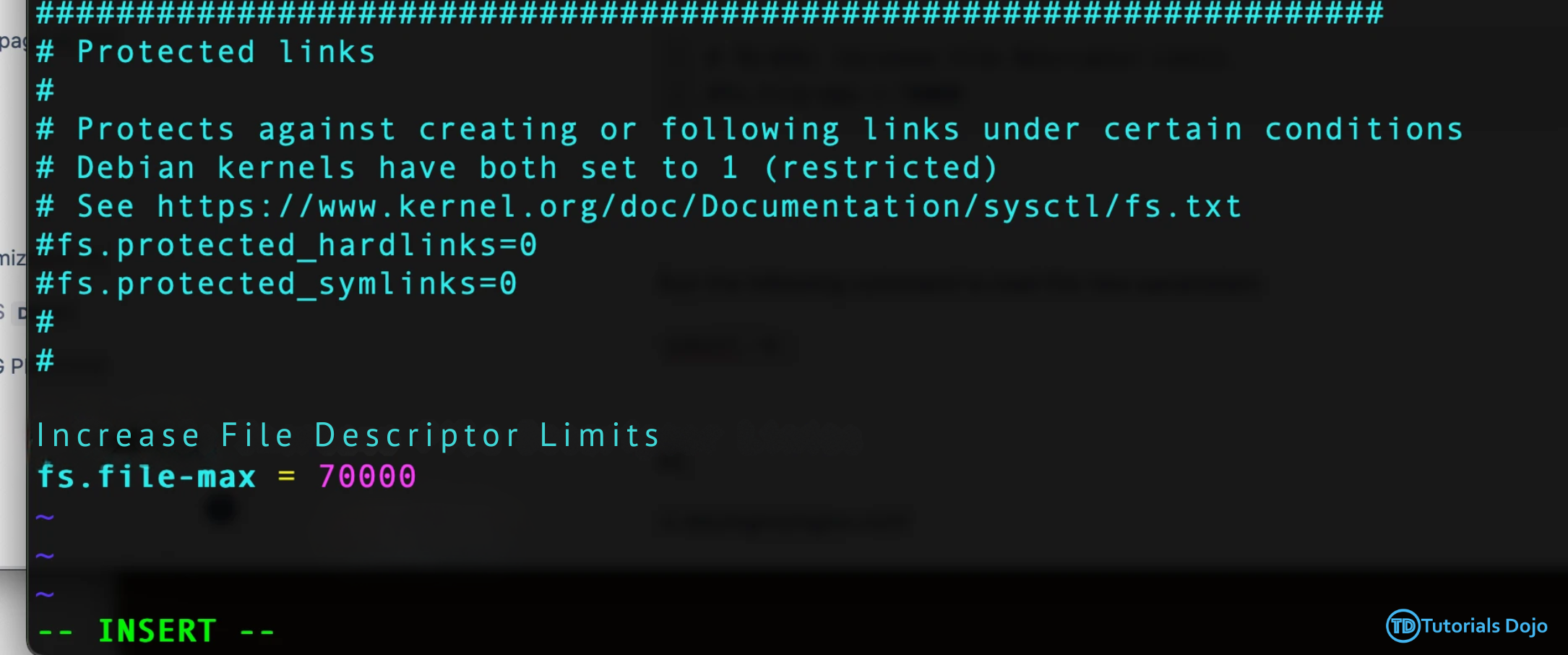

Update System Configuration:

Runsudo vi /etc/sysctl.conf

fs.file-max = 70000

– Execute this code to apply the new parameters:sysctl -p

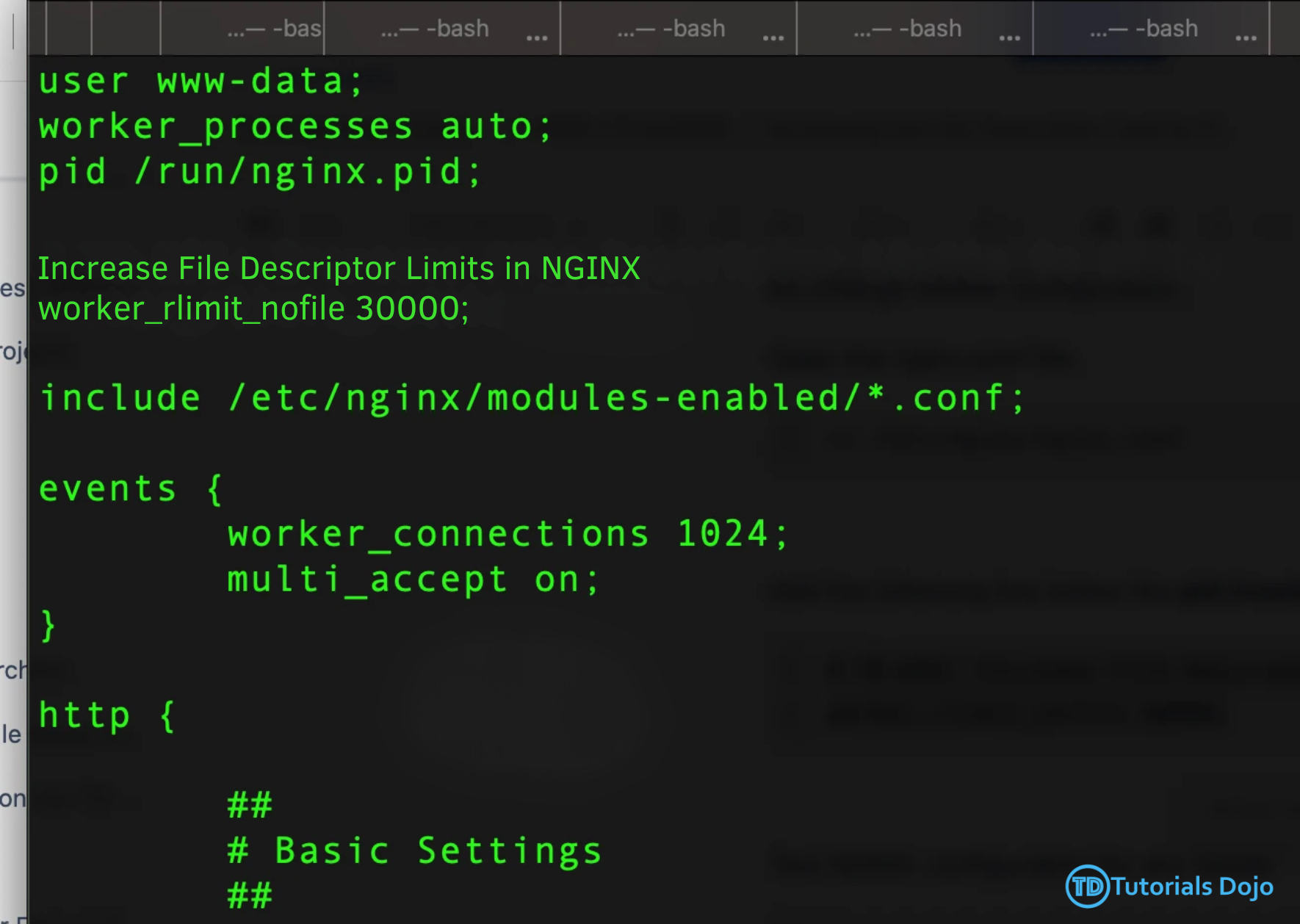

Adjust NGINX Configuration:

vi /etc/nginx/nginx.conf

worker_rlimit_nofile 30000;

– Validate NGINX configuration syntax using:nginx -t

Restart NGINX:

sudo service nginx restart

Increasing File Descriptor Limits Verification

cat /proc/$(ps aux | grep nginx | awk 'NR==5' | awk '{ print $2 }')/limits | grep "Max open files"

Conclusion

Solving HTTP 500 Errors by Increasing File Descriptor Limits

AWS, Azure, and GCP Certifications are consistently among the top-paying IT certifications in the world, considering that most companies have now shifted to the cloud. Earn over $150,000 per year with an AWS, Azure, or GCP certification!

Follow us on LinkedIn, YouTube, Facebook, or join our Slack study group. More importantly, answer as many practice exams as you can to help increase your chances of passing your certification exams on your first try!

View Our AWS, Azure, and GCP Exam Reviewers Check out our FREE coursesOur Community

~98%

passing rate

Around 95-98% of our students pass the AWS Certification exams after training with our courses.

200k+

students

Over 200k enrollees choose Tutorials Dojo in preparing for their AWS Certification exams.

~4.8

ratings

Our courses are highly rated by our enrollees from all over the world.