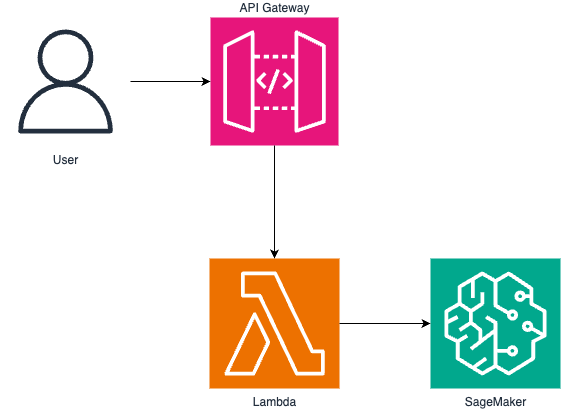

Deploying a Serverless Inference Endpoint with Amazon SageMaker

John Patrick Laurel2023-12-27T11:56:32+00:00Introduction Welcome to our deep dive into the world of serverless machine learning (ML) inference using Amazon SageMaker. In this blog post, we will explore the innovative and efficient approach of deploying ML models without the need for managing servers, a method known as serverless inference. What is Serverless Inference? Serverless inference is a cloud computing execution model where the cloud provider dynamically manages the allocation of machine resources. The key advantage here is that it abstracts the underlying infrastructure, allowing developers and data scientists to focus solely on their application logic. This approach offers several benefits: Cost-Effectiveness: You pay [...]