Last updated on February 12, 2025

The AWS Certified Solutions Architect Professional Exam SAP-C02 Overview

Few years ago, before you can take the AWS Certified Solutions Architect Professional exam (or SA Pro for short), you would first have to pass the associate level exam of this track. This is to ensure that you have sufficient knowledge and understanding of architecting in AWS before tackling the more difficult certification. In October 2018, AWS removed this ruling so that there are no more prerequisites for taking the Professional level exams. You now have the freedom to directly pursue this certification if you wish to.

This certification is truly a leveled-up version of the AWS Solutions Architect Associate certification. It examines your capability to create well-architected solutions in AWS, but on a grander scale and with more difficult requirements. Because of this, we recommend that you go through our exam preparation guide for the AWS Certified Solutions Architect Associate and even the AWS Certified Cloud Practitioner if you have not done so yet. They contain very important materials such as review materials that will be crucial for passing the exam.

AWS Certified Solutions Architect Professional SAP-C02 Study Materials

The FREE AWS Exam Readiness course, official AWS sample questions, Whitepapers, FAQs, AWS Documentation, Re:Invent videos, forums, labs, AWS cheat sheets, AWS practice exams, and personal experiences are what you will need to pass the exam. Since the SA Pro is one of the most difficult AWS certification exams out there, you have to prepare yourself with every study material you can get your hands on. To learn more details regarding your exam, go through the official AWS SAP-C02 Exam Guide, as these documents discuss the various domains they will test you on.

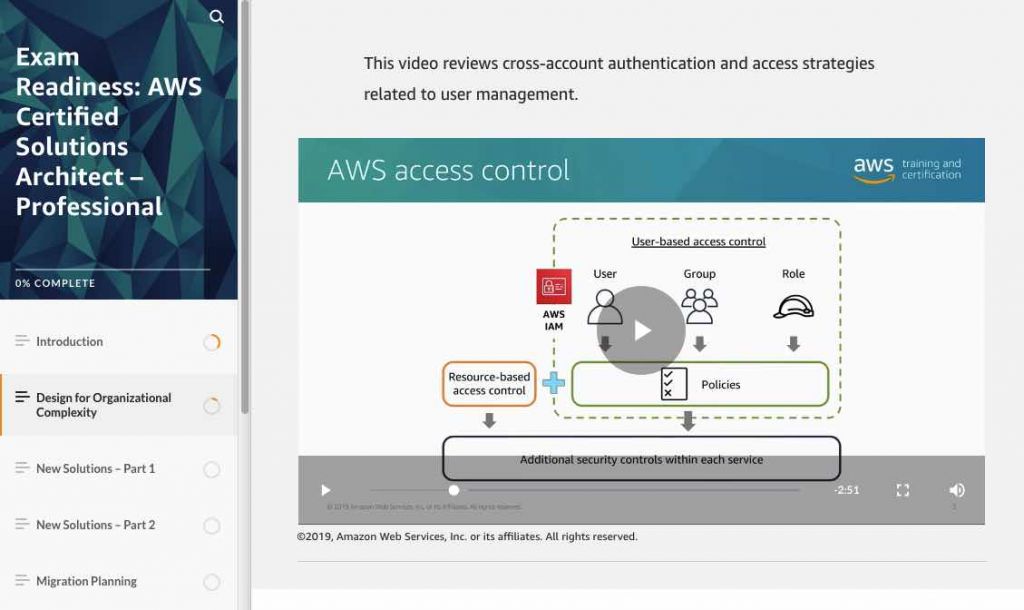

AWS has a digital course called Exam Readiness: AWS Certified Solutions Architect – Professional, which is a short video lecture that discusses what to expect on the AWS Certified Solutions Architect – Professional exam. It should sufficiently provide an overview of the different concepts and practices that you’ll need to know about. Each topic in the course will also contain a short quiz right after you finish its lecture to help you lock in the important information.

For whitepapers, aside from the ones listed down in our Solutions Architect Associate and Cloud Practitioner exam guide, you should also study the following:

- Securing Data at Rest with Encryption

- Web Application Hosting in the AWS Cloud

- Migrating AWS Resources to a New Region

- Practicing Continuous Integration and Continuous Delivery on AWS Accelerating Software Delivery with DevOps

- Microservices on AWS

- AWS Security Best Practices

- AWS Well-Architected Framework

- Security Pillar – AWS Well-Architected Framework

- Using Amazon Web Services for Disaster Recovery

- AWS Architecture Center architecture whitepapers

The instructor-led classroom called “Advanced Architecting on AWS” should also provide additional information on how to implement the concepts and best practices that you have learned from whitepapers and other forms of documentation. Be sure to check it out.

Your AWS exam could also include a lot of migration scenarios. Visit this AWS documentation to learn about the different ways of performing cloud migration.

Also check out this article: Top 5 FREE AWS Review Materials.

AWS Services to Focus On For the AWS Certified Solutions Architect Professional SAP-C02 Exam

Generally, as a soon-to-be AWS Certified SA Pro, you should have a thorough understanding of every service and feature in AWS. But for the purpose of this review, give more attention to the following services since they are common topics in the SA Pro exams:

- AWS Organizations

- Know how to create organizational units (OUs), service control policies (SCPs), and any additional parameters in AWS Organizations.

- There might be scenarios where the master account needs access to member accounts. Your options can include setting up OUs and SCPs, delegating an IAM role, or providing cross-account access.

- Differentiate SCP from IAM policies.

- You should also know how to integrate AWS Organizations with other services such as CloudFormation, Service Catalog, and IAM to manage resources and user access.

- Lastly, read how you can save on costs by enabling consolidated billing in your organizations, and what would be the benefits of enabling all features.

- AWS Application Migration Service

- Study the different ways to migrate on-premises servers to the AWS Cloud.

- Also, study how you can perform the migration in a secure and reliable manner.

- You should be aware of what types of objects AWS SMS can migrate for you i.e. VMs, and what is the output of the migration process.

- AWS Database Migration Service + Schema Conversion Tool

- Aside from server and application migration, you should also know how you can move on-premises databases to AWS, and not just to RDS but to other services as well as Aurora and RedShift.

- Read over what schemas can be converted by SCT.

- AWS Serverless Application Model

- The AWS SAM has a syntax of its own. Study the syntax and how AWS SAM is used to deploy serverless applications through code.

- Know the relationship between SAM and CloudFormation. Hint: You can use these two together.

- AWS EC2 Systems Manager

- Study the different features under Systems Manager and how each feature can automate EC2-related processes. Patch Manager and Maintenance Windows are often used together to perform automated patching. It allows for easier setup and better control over patch baselines, rather than using a cron job within an EC2 instance or using Cloudwatch Events.

- It is also important to know how you can troubleshoot EC2 issues using Systems Manager.

- Parameter Store allows you to securely store a string in AWS, which can be retrieved anywhere in your environment. You can use this service instead of AWS Secrets Manager if you don’t need to rotate your secrets.

- AWS CI/CD – Study the different CI/CD tools in AWS, from function to features to implementation. It would be very helpful if you can create your own CI/CD pipeline as well using the services below.

- AWS Service Catalog

- This service is also part of the automation toolkit in AWS. Study how you can create and manage portfolios of approved services in the Service Catalog, and how you can integrate these with other technologies such as AWS Organizations.

- You can enforce tagging on services using service catalog. This way, users can only launch resources that have the tags you defined.

- Know when Service Catalog is a better option for resource control rather than AWS Cloudformation. A good example is when you want to create a customized portfolio for each type of user in an organization and selectively grant access to the appropriate portfolio.

- AWS Direct Connect (DX)

- You should have a deep understanding of this service. Questions commonly include Direct Connect Gateway, public and private VIFs, and LAGs.

- Direct Connect is commonly used for connecting on-premises networks to AWS, but it can also be used to connect different AWS Regions to a central datacenter. For these kinds of scenarios, take note of the benefits of Direct Connect such as dedicated bandwidth, network security, multi-Region and multi-VPC connection support.

- Direct Connect is also used along with a failover connection, such as a secondary DX line or IPsec VPN. The correct answer will depend on specific requirements like cost, speed, ease of management, etc.

- Another combination that can be used to link different VPCs is Transit Gateway + DX.

- AWS CloudFormation – Your AWS exam might include a lot of scenarios that involve Cloudformation, so take note of the following:

- You can use CloudFormation to enforce tagging by requiring users to only use resources that CloudFormation launched.

- CloudFormation can be used for managing resources across different AWS accounts in an Organization using StackSets.

- CloudFormation is often compared to AWS Service Catalog and AWS SAM. The way to approach this in the exam is to know what features are supported by CloudFormation that cannot be performed in a similar fashion with Service Catalog or SAM.

- Amazon VPC (in depth)

- Know the ins and outs of NAT Gateways and NAT instances, such as supported IP protocols, which types of packets are dropped in a cut connection, etc.

- Study about transit gateway and how it can be used together with Direct Connect.

- Remember longest prefix routing.

- Compare VPC peering to other options such as Site to Site VPN. Know what components are in use: Customer gateway, Virtual Private Gateway, etc.

- Amazon ECS

- Differentiate task role from task execution role.

- Compare using ECS compute instances from Fargate serverless model.

- Study how to link together ECS and ECR with CI/CD tools to automate deployment of updates to your Docker containers.

- Elastic Load Balancer (in-depth)

- Differentiate the internet protocols used by each type of ELB for listeners and target groups: HTTP, HTTPS, TCP, UDP, TLS.

- Know how you can configure load balancers to forward client IP to target instances.

- Know how you can secure your ELB traffic through the use of SSL and WAF. SSL can be offloaded on either the ELB or CloudHSM.

- Elastic Beanstalk

- Study the different deployment options for Elastic Beanstalk.

- Know the steps in performing a blue/green deployment.

- Know how you can use traffic splitting deployment to perform canary testing

- Compare Elastic Beanstalk’s deployment options to CodeDeploy.

- WAF and Shield

- Know at what network layer WAF and Shield operate in

- Differentiate security capabilities of WAF and Shield Advanced, especially with regard to DDoS protection. A great way to determine which one to use is to look at the services that need the protection and if cost is a factor. You may also visit this AWS documentation for additional details.

- Amazon Workspaces vs Amazon Appstream

- Workspaces are best for virtual desktop environments. You can use it to provision either Windows or Linux desktops in just a few minutes and quickly scale to provide thousands of desktops to workers across the globe.

- Appstream is best for standalone desktop applications. You centrally manage your desktop applications on AppStream 2.0 and securely deliver them to any computer.

- Amazon Workdocs – It is important to determine what features make Workdocs unique compared to using S3 and EFS. Choose this service if you need secure document storage where you can collaborate in real-time with others and manage access to the documents.

- Elasticache vs DAX vs Aurora Read Replicas

- Know your caching options especially when it comes to databases.

- If there is a feature that is readily integrated with the database, it would be better to use that integrated feature instead for less overhead.

- Snowball Edge vs Direct Connect vs S3 Acceleration – These three services are heavily used for data migration purposes. Read the exam scenario properly to determine which service is best used. Factors in choosing the correct answer are cost, time allotted for the migration, and how much data is needed to be transported.

- Using Resource Tags with IAM – Study how you can use resource tags to manage access via IAM policies.

We also recommend checking out Tutorials Dojo’s AWS Cheat Sheets which provide a summarized but highly informative set of notes and tips for your review of these services. These cheat sheets are presented mostly in bullet points which will help you retain the knowledge much better vs reading lengthy FAQs.

We expect that you already have vast knowledge on the AWS services that a Solutions Architect commonly uses, such as those listed in our SA Associate review guide. It is also not enough to just know the service and its features. You should also have a good understanding of how to integrate these services with one another to build large-scale infrastructures and applications. It’s why it is generally recommended to have hands-on experience managing and operating systems on AWS.

Common Exam Scenarios For SAP-C02

You have objects in an S3 bucket that have different retrieval frequencies. To optimize cost and retrieval times, what change should you make?

S3 has a new storage class called “S3 Intelligent-Tiering”. S3 IT moves data between two access tiers — frequent access and infrequent access — when access patterns change and is ideal for data with unknown or changing access patterns. What makes this relatively cost-effective is that there are no retrieval fees in S3 Intelligent-Tiering, unlike the S3 IA storage class.

|

SCENARIO |

SOLUTION |

|

SAP-C02 Exam Domain 1: Design Solutions for Organizational Complexity |

|

|

You are managing multiple accounts and you need to ensure that each account can only use resources that it is supposed to. What is a simple and reusable method of doing so? |

AWS Organizations is a given here. It simplifies a lot of the account management and controls that you would use for this scenario. For resource control, you may use AWS CloudFormation Stacksets to define a specific stack and limit your developers to the created resources. You may also use AWS Service Catalog if you like to define specific product configurations or CloudFormation stacks, and give your developers freedom to deploy them. For permission controls, a combination of IAM policies and SCPs should suffice. |

|

You are creating a CloudFormation stack and uploading it to AWS Service Catalog so you may share this stack with other AWS accounts in your organization. How can your end-users access the product/portfolio while still granting the least privilege? |

Your end-users require appropriate IAM permissions to access AWS Service Catalog and launch a CloudFormation stack. The AWSServiceCatalogEndUser |

|

How can you provide access to users in a different account to resources in your account? |

Use cross-account IAM roles and attach the permissions necessary to access your resources. Have the users in the other account reference this IAM role. |

|

How do you share or link two networks together? (VPCs, VPNs, routes, etc) What if you have restrictions on your traffic e.g. it cannot traverse through the public Internet? |

Sharing networks or linking two networks is a common theme in a very large organization. This ensures that your networks adhere to the best practices all the time. For VPCs, you can use VPC sharing, VPC Peering, or Transit Gateways. VPNs can utilize Site-to-Site VPN for cross-region or cross-account connections. For strict network compliance, you can access some of your AWS resources privately through shared VPC endpoints. This way, your traffic does not need to traverse through the public Internet. More information on that can be found in this article. |

|

You have multiple accounts under AWS Organizations. Previously, each account can purchase their own RIs, but now, they have to request it from one central account for procurement. What steps should be done to satisfy this requirement in the most secure way? |

Ensure that all AWS accounts are part of an AWS Organizations structure operating in all features mode. Then create an SCP that contains a deny rule to the ec2:PurchaseReservedInstancesOffering and ec2:ModifyReservedInstances actions. Attach the SCP to each organizational unit (OU) of the AWS Organizations’ structure. |

|

Can you connect multiple VPCs that belong to different AWS accounts and have overlapping CIDRs? If so, how can you manage your route tables so that the correct traffic is routed to the correct VPC? |

You can connect multiple VPCs together even if they have overlapping CIDRs. What is important is that you are aware of how routing works in AWS. AWS uses longest prefix matching to determine where traffic is delivered to. So to make sure that your traffic is routed properly, be as specific as possible with your routes. |

|

Members of a department will need access to your AWS Management Console. Without having to create IAM Users for each member, how can you provide long-term access? |

You can use your on-premises SAML 2.0-compliant identity provider to grant your members federated access to the AWS Management Console via the AWS single sign-on (SSO) endpoint. This will provide them long term access to the console as long as they can authenticate with the IdP. |

|

Is it possible for one account to monitor all API actions executed by each member account in an AWS Organization? If so, how does it work? |

You can configure AWS CloudTrail to create a trail that will log all events for all AWS accounts in that organization. When you create an organization trail, a trail with the name that you give it will be created in every AWS account that belongs to your organization. Users with CloudTrail permissions in member accounts will be able to see this trail. However, users in member accounts will not have sufficient permissions to delete the organization trail, turn logging on or off, change what types of events are logged, or otherwise alter the organization trail in any way. When you create an organization trail in the console, or when you enable CloudTrail as a trusted service in the Organizations, this creates a service-linked role to perform logging tasks in your organization’s member accounts. This role is named AWSServiceRoleForCloudTrail, and is required for CloudTrail to successfully log events for an organization. Log files for an account removed from the organization that were created prior to the account’s removal will still remain in the Amazon S3 bucket where log files are stored for the trail. |

|

You have 50 accounts joined to your AWS Organizations and you will require a central, internal DNS solution to help reduce the network complexity. Each account has its own VPC that will rely on the private DNS solution for resolving different AWS resources (servers, databases, AD domains, etc). What is the least complex network architecture that you can create? |

Create a shared services VPC in your central account, and connect the other VPCs to yours using VPC peering or AWS Transit Gateway. Set up a private hosted zone in Amazon Route 53 on your shared services VPC and add in the necessary domains/subdomains. Associate the rest of the VPCs to this private hosted zone. |

|

How can you easily deploy a basic infrastructure to different AWS regions while at the same time allowing your developers to optimize (but not delete) the launched infrastructures? |

Use CloudFormation Stacksets to deploy your infrastructure to different regions. Deploy the stack in an administrator account. Create an IAM role that developers can assume so they can optimize the infrastructure. Make sure that the IAM role has a policy that denies deletion for cloudformation-launched resources. |

|

You have multiple VPCs in your organization that are using the same Direct Connect line to connect back to your corporate datacenter. This setup does not account for line failure which will affect the business greatly if something were to happen to the network. How do you make the network more highly available? What if the VPCs span multiple regions? |

Utilize Site-To-Site VPN between the VPCs and your datacenter and terminate the VPN tunnel at a virtual private gateway. Setup BGP routing. An alternative solution is to provision another Direct Connect line in another location if you require constant network performance, at the expense of additional cost. If the VPCs span multiple regions, you can use a Direct Connect Gateway. |

|

SAP-C02 Exam Domain 2: Design for New Solutions |

|

|

You have production instances running in the same account as your dev environment. Your developers occasionally mistakenly stop/terminate production instances. How can you prevent this from happening? |

You can leverage resource tagging and create an explicit deny IAM policy that would prevent developers from stopping or terminating instances tagged under production. |

|

If you have documents that need to be collaborated upon, and you also need strict access controls over who gets to view and edit these documents, what service should you use? |

AWS has a suite of services similar to Microsoft Office or Gsuite, and one of those services is called Amazon Workdocs. Amazon Workdocs is a fully managed, secure content creation, storage, and collaboration service. |

|

How can you quickly scale your applications in AWS while keeping costs low? |

While EC2 instances are perfectly fine compute option, they tend to be pricey if they are not right-sized or if the capacity consumption is fluctuating. If you can, re-architect your applications to use Containers or Serverless compute options such as ECS, Fargate, Lambda and API Gateway. |

|

You would like to automate your application deployments and use blue-green deployment to properly test your updates. Code updates are submitted to an S3 bucket you own. You wish to have a consistent environment where you can test your changes. Which services will help you fulfill this scenario? |

Create a deployment pipeline using CodePipeline. Use AWS Lambda to invoke the stages in your pipeline. Use AWS CodeBuild to compile your code, before sending it to AWS Elastic Beanstalk in a blue environment. Have AWS Codebuild test the update in the blue environment. Once testing has succeeded, trigger AWS Lambda to swap the URLs between your blue and green Elastic Beanstalk environments. More information here. |

|

Your company only allows the use of pre-approved AMIs for all your teams. However, users should not be prevented from launching unauthorized AMIs as it might affect some of their automation. How can you monitor all EC2 instances launched to make sure they are compliant with your approved AMI list, and that you are informed when someone uses an incompliant AMI? |

Utilize AWS Config to monitor AMI compliance across all AWS accounts. Configure Amazon SNS to notify you when an EC2 instance was launched using an un-approved AMI. You can also use Amazon EventBridge to monitor each RunInstance event. Use it to trigger a Lambda function that will cross check the launch configuration to your AMI list and send you a notification via SNS if the AMI used was unapproved. This will give you more information such as who launched the instance. |

|

How can you build a fully automated call center in AWS? |

Utilize Amazon Connect, Amazon Lex, Amazon Polly, and AWS Lambda. |

|

You have a large number of video files that are being processed locally by your custom AI application for facial detection and recognition. These video files are kept in a tape library for long term storage. Video metadata and timestamps of detected faces are stored in MongoDB. You decided to use AWS to further enhance your operations, but the migration procedure should have minimal disruption to the existing process. What should be your setup? |

Use Amazon Storage Gateway Tape Gateway to store your video files in an Amazon S3 bucket. Start importing the video files to your tape gateway after you’ve configured the appliance. Create a Lambda function that will extract the videos from Tape Gateway and forward them to Amazon Rekognition. Use Amazon Rekognition for facial detection and timestamping. Once finished, have Rekognition trigger a Lambda function that will store the resulting information in Amazon DynamoDB. |

|

Is it possible in AWS for you to enlist the help of other people to complete tasks that only humans can do? |

Yes, you can submit tasks in AWS Mechanical Turk and have other people complete them in exchange for a fee. |

|

You have a requirement to enforce HTTPS for all your connections but you would like to offload the SSL/TLS to a separate server to reduce the impact on application performance. Unfortunately, the region you are using does not support AWS ACM. What can be your alternative? |

You cannot use ACM in another region for this purpose since ACM is a regional service. Generate your own certificate and upload it to AWS IAM. Associate the imported certificate with an elastic load balancer. More information here. |

|

SAP-C02 Exam Domain 3: Accelerate Workload Migration and Modernization |

|

|

You are using a database engine on-premises that is not currently supported by RDS. If you wish to bring your database to AWS, how do you migrate it? |

AWS has two tools to help you migrate your database workloads to the cloud: database migration service and schema conversion tool. First, collect information on your source database and have SCT convert your database schema and database code. You may check the supported source engines here. Once the conversion is finished, you can launch an RDS database and apply the converted schema, and use database migration service to safely migrate your database. |

|

You have thousands of applications running on premises that need to be migrated to AWS. However, they are too intertwined with each other and may cause issues if the dependencies are not mapped properly. How should you proceed? |

Use AWS Application Discovery Service to collect server utilization data and perform dependency mapping. Then send the result to AWS Migration Hub where you can initiate the migration of the discovered servers. |

|

You have to migrate a large amount of data (TBs) over the weekend to an S3 bucket and you are not sure how to proceed. You have a 500Mbps Direct Connect line from your corporate data center to AWS. You also have a 1Gbps Internet connection. What should be your mode of migration? |

One might consider using Snow hardware to perform the migration, but the time constraint does not allow you to ship the hardware in time. Your Direct Connect line is only 500Mbps as well. So you should instead enable S3 Transfer Acceleration and dedicate all your available bandwidth for the data transfer. |

|

You have a custom-built application that you’d like to migrate to AWS. Currently, you don’t have enough manpower or money to rewrite the application to be cloud-optimized, but you would still like to optimize whatever you can on the application. What should be your migration strategy? |

Rehosting is out of the question since there are no optimizations done in a lift-and-shift scenario. Re-architecting is also out of the question since you do not have the budget and manpower for it. You cannot retire nor repurchase since this is a custom production application. So your only option would be to re-platform it to utilize scaling and load balancing for example. |

|

How can you leverage AWS as a cost-effective solution for offsite backups of mission-critical objects that have short RTO and RPO requirements? |

For hybrid cloud architectures, you may use AWS Storage Gateway to continuously store file backups onto Amazon S3. Since you have short RTO and RPO, the best storage type to use is File Gateway. File Gateway allows you to mount Amazon S3 onto your server, and by doing so you can quickly retrieve the files you need. Volume Gateway does not work here since you will have to restore entire volumes before you can retrieve your files. Enable versioning on your S3 bucket to maintain old copies of an object. You can then create lifecycle policies in Amazon S3 to achieve even lower costs. |

|

You have hundreds of EC2 Linux servers concurrently accessing files in your local NAS. The communication is kept private by AWS Direct Connect and IPsec VPN. You notice that the NAS is not able to sufficiently serve your EC2 instances, thus leading to huge slowdowns. You consider migrating to an AWS storage service as an alternative. What should be your service and how do you perform the migration? |

Since you have hundreds of EC2 servers, the best storage for concurrent access would be Amazon EFS. To migrate your data to EFS, you may use AWS DataSync. Create a VPC endpoint for your EFS so that the data migration is performed quickly and securely over your Direct Connect. |

|

If you have a piece of software (e.g. CRM) that you want to bring to the cloud, and you have an allocated budget but not enough manpower to re-architect it, what is your next best option to make sure the software is still able to take advantage of the cloud? |

Check in the AWS Marketplace and verify if there is a similar tool that you can use — Repurchasing strategy. |

|

SAP-C02 Exam Domain 4: Continuous Improvement for Existing Solutions |

|

|

You have a running EMR cluster that has erratic utilization and task processing takes longer as time goes on. What can you do to keep costs to a minimum? |

Add additional task nodes, but use instance fleets with the master node in on-Demand mode and a mix of On-Demand and Spot Instances for the core and task nodes. Purchase Reserved Instances for the master node. |

|

A company has multiple AWS accounts in AWS Organizations that has full features enabled. How do you track AWS costs in Organizations and alert if costs from a business unit exceed a specific budget threshold? |

Use Cost Explorer to monitor the spending of each account. Create a budget in AWS Budgets for each OU by grouping linked accounts, then configure SNS notification to alert you if the budget has been exceeded. |

|

You have a Serverless stack running for your mobile application (Lambda, API Gateway, DynamoDB). Your Lambda costs are getting expensive due to the long wait time caused by high network latency when communicating with the SQL database in your on-premises environment. Only a VPN solution connects your VPC to your on-premises network. What steps can you make to reduce your costs? |

If possible, migrate your database to AWS for lower latency. If this is not an option, consider purchasing a Direct Connect line with your VPN on top of it for a secure and fast network. Consider caching frequently retrieved results on API Gateway. Continuously monitor your Lambda execution time and reduce it gradually up to an acceptable duration. |

|

You have a set of EC2 instances behind a load balancer and an autoscaling group, and they connect to your RDS database. Your VPC containing the instances uses NAT gateways to retrieve patches periodically. Everything is accessible only within the corporate network. What are some ways to lower your cost? |

If your EC2 instances are production workloads, purchase Reserved instances. If they are not, schedule the autoscaling to scale in when they are not in use and scale out when you are about to use them. Consider a caching layer for your database reads if the same queries often appear. Consider using NAT instances instead, or better yet, remove the NAT gateways if you are only using them for patching. You can easily create a new NAT instance or NAT gateway when you need them again. |

|

You need to generate continuous database and server backups in your primary region and have them available in your disaster recovery region as well. Backups need to be made available immediately in the primary region while the disaster region allows more leniency, as long as they can be restored in a few hours. A single backup is kept only for a month before it is deleted. A dedicated team conducts game days every week in the primary region to test the backups. You need to keep storage costs as low as possible. |

Store the backups in Amazon S3 Standard and configure cross-region replication to the DR region S3 bucket. Create a lifecycle policy in the DR region to move the backups to S3 Glacier. S3 IA is not applicable since you need to wait for 30 days before you can transition to IA from Standard. |

|

Determine the most cost-effective infrastructure: |

You may use Amazon Kinesis Firehose to continuously stream the data into Amazon S3. Then configure AWS Batch with spot pricing for your worker nodes. Use Amazon Cloudwatch Events to schedule your jobs at night. More information here. |

|

If you are cost-conscious about the charges incurred by external users who frequently access your S3 objects, what change can you introduce to shift the charges to the users? |

Ensure that the external users have their own AWS accounts. Enable S3 Requester Pays on the S3 buckets. Create a bucket policy that will allow these users read/write access to the buckets. |

|

You have a Direct Connect line from an AWS partner data center to your on-premises data center. Webservers are running in EC2, and they connect back to your on-premises databases/data warehouse. How can you increase the reliability of your connection? |

There are multiple ways to increase the reliability of your network connection. You can order another Direct Connect line for redundancy, which AWS recommends for critical workloads. You may also create an IPSec VPN connection over public Internet, but that will require additional configuration since you need to monitor the health of both networks. |

|

You have a set of instances behind a Network Load Balancer and an autoscaling group. If you are to protect your instances from DDoS, what changes should you make? |

Since AWS WAF does not integrate with NLB directly, you can create a CloudFront and attach the WAF there, and use your NLB as the origin. You can also enable AWS Shield Advanced so you get the full suite of features against DDoS and other security attacks. |

|

You have a critical production workload (servers + databases) running in one region, and your RTO is 5 minutes while your RPO is 15 minutes. What is your most cost-efficient disaster recovery option? |

If you have the option to choose warm standby, make sure that the DR infrastructure is able to automatically detect failure on the primary infrastructure (through health checks), and it can automatically scale up/scale out (autoscaling + scripts) and perform an immediate failover (Route 53 failover routing) in response. If your warm standby option does not state that it can do so then you might not be able to meet your RTO/RPO, which means you must use multi-site DR solution instead even though it is costly. |

|

You use RDS to store data collected by hundreds of IoT robots. You know that these robots can produce up to tens of KBs of data per minute. It is expected that in a few years, the number of robots will continuously increase, and so database storage should be able to scale to handle the amount of data coming in and the IOPS required to match performance. How can you re-architect your solution to better suit this upcoming growth? |

Instead of using a database, consider using a data warehousing solution such as Amazon Redshift instead. That way, your data storage can scale much larger and the database performance will not take that much of a hit. |

|

You have a stream of data coming into your AWS environment that is being delivered by multiple sensors around the world. You need real-time processing for these data and you have to make sure that they are processed in the order in which they came in. What should be your architecture? |

One might consider using SQS FIFO for this scenario, but since it also requires you to have real-time processing capabilities, Amazon Kinesis is a better solution. You can configure the data to have a specific partition key so that it is processed by the same Kinesis shard, thereby giving you similar FIFO capabilities. |

|

You’re want to use your AWS Direct Connect to access S3 and DynamoDB endpoints while using your Internet provider for other types of traffic. How should you configure this? |

Create a public interface on your AWS Direct Connect link. Advertise specific routes for your network to AWS, so that S3 traffic and DynamoDB traffic pass through your AWS Direct Connect. |

|

You have a web application leveraging Cloudfront for caching frequently accessed objects. However, parts of the application are reportedly slow in some countries. What cost-effective improvement can you make? |

Utilize Lambda@edge to run parts of the application closer to the users. |

|

If you are running Amazon Redshift and you have a tight RTO and RPO requirement, what improvement can you make so that your Amazon Redshift is more highly available and durable in case of a regional disaster? |

Amazon Redshift allows you to copy snapshots to other regions by enabling cross-region snapshots. Snapshots to S3 are automatically created on active clusters every 8 hours or when an amount of data equal to 5 GB per node changes. Depending on the snapshot policy configured on the primary cluster, the snapshot updates can either be scheduled, or based upon data change, and then any updates are automatically replicated to the secondary/DR region. |

|

You have multiple EC2 instances distributed across different AZs depending on their function, and each of the AZ has its own m5.large NAT instance. A set of EC2 servers in one AZ occasionally cannot reach an API that is external to AWS when there is a high volume of traffic. This is unacceptable for your organization. What is the most cost-effective solution for your problem? |

It would be better if you transition your NAT Instances to NAT Gateways since they provide faster network speeds. Resizing the NAT instance to something higher is not cost-effective anymore since the network speed increase is gradual as you go up. Adding more NAT instances to a single AZ makes your environment too complex. |

|

Most of your vendors’ applications use IPv4 to communicate with your private AWS resources. However, a newly acquired vendor will only be supporting IPv6. You will be creating a new VPC dedicated for this vendor, and you need to make sure that all of your private EC2 instances can communicate using IPv6. What are the configurations that you need to do? |

Provide your EC2 instances with IPv6 addresses. Create security groups that will allow IPv6 addresses for inbound and outbound. Create an egress-only Internet gateway to allow your private instances to reach the vendor. |

Validate Your AWS Certified Solutions Architect Professional SAP-C02 Exam Readiness

After your review, you should take some practice tests to measure your preparedness for the real exam. AWS offers a sample practice test for free which you can find here. You can also opt to buy the longer AWS sample practice test at aws.training, and use the discount coupon you received from any previously taken certification exams. Be aware though that the sample practice tests do not mimic the difficulty of the real SA Pro exam. You should not rely solely on them to gauge your preparedness. It is better to take more practice tests to fully understand if you are prepared to pass the certification exam.

Fortunately, Tutorials Dojo also offers a great set of practice questions for you to take here. It is kept updated by the creators to ensure that the questions match what you’ll be expecting in the real exam. The practice tests will help fill in any important details that you might have missed or skipped in your review. You can also pair our practice exams with our AWS Certified Solutions Architect Professional Study Guide eBook to further help in your exam preparations.

Sample Practice Test Questions:

Question 1

A data analytics startup has been chosen to develop a data analytics system that will track all statistics in the Fédération Internationale de Football Association (FIFA) World Cup, which will also be used by other 3rd-party analytics sites. The system will record, store and provide statistical data reports about the top scorers, goal scores for each team, average goals, average passes, average yellow/red cards per match, and many other details. FIFA fans all over the world will frequently access the statistics reports every day and thus, it should be durably stored, highly available, and highly scalable. In addition, the data analytics system will allow the users to vote for the best male and female FIFA player as well as the best male and female coach. Due to the popularity of the FIFA World Cup event, it is projected that there will be over 10 million queries on game day and could spike to 30 million queries over the course of time.

Which of the following is the most cost-effective solution that will meet these requirements?

Option 1:

- Launch a MySQL database in Multi-AZ RDS deployments configuration with Read Replicas.

- Generate the FIFA reports by querying the Read Replica.

- Configure a daily job that performs a daily table cleanup.

Option 2:

- Launch a MySQL database in Multi-AZ RDS deployments configuration.

- Configure the application to generate reports from ElastiCache to improve the read performance of the system.

- Utilize the default expire parameter for items in ElastiCache.

Option 3:

- Generate the FIFA reports from MySQL database in Multi-AZ RDS deployments configuration with Read Replicas.

- Set up a batch job that puts reports in an S3 bucket.

- Launch a CloudFront distribution to cache the content with a TTL set to expire objects daily

Option 4:

- Launch a Multi-AZ MySQL RDS instance.

- Query the RDS instance and store the results in a DynamoDB table.

- Generate reports from DynamoDB table.

- Delete the old DynamoDB tables every day.

Question 2

A company provides big data services to enterprise clients around the globe. One of the clients has 60 TB of raw data from their on-premises Oracle data warehouse. The data is to be migrated to Amazon Redshift. However, the database receives minor updates on a daily basis while major updates are scheduled every end of the month. The migration process must be completed within approximately 30 days before the next major update on the Redshift database. The company can only allocate 50 Mbps of Internet connection for this activity to avoid impacting business operations.

Which of the following actions will satisfy the migration requirements of the company while keeping the costs low?

- Create a new Oracle Database on Amazon RDS. Configure Site-to-Site VPN connection from the on-premises data center to the Amazon VPC. Configure replication from the on-premises database to Amazon RDS. Once replication is complete, create an AWS Schema Conversion Tool (SCT) project with AWS DMS task to migrate the Oracle database to Amazon Redshift. Monitor and verify if the data migration is complete before the cut-over.

- Create an AWS Snowball Edge job using the AWS Snowball console. Export all data from the Oracle data warehouse to the Snowball Edge device. Once the Snowball device is returned to Amazon and data is imported to an S3 bucket, create an Oracle RDS instance to import the data. Create an AWS Schema Conversion Tool (SCT) project with AWS DMS task to migrate the Oracle database to Amazon Redshift. Copy the missing daily updates from Oracle in the data center to the RDS for Oracle database over the Internet. Monitor and verify if the data migration is complete before the cut-over.

- Since you have a 30-day window for migration, configure VPN connectivity between AWS and the company’s data center by provisioning a 1 Gbps AWS Direct Connect connection. Launch an Oracle Real Application Clusters (RAC) database on an EC2 instance and set it up to fetch and synchronize the data from the on-premises Oracle database. Once replication is complete, create an AWS DMS task on an AWS SCT project to migrate the Oracle database to Amazon Redshift. Monitor and verify if the data migration is complete before the cut-over.

- Create an AWS Snowball import job to request for a Snowball Edge device. Use the AWS Schema Conversion Tool (SCT) to process the on-premises data warehouse and load it to the Snowball Edge device. Install the extraction agent on a separate on-premises server and register it with AWS SCT. Once the Snowball Edge imports data to the S3 bucket, use AWS SCT to migrate the data to Amazon Redshift. Configure a local task and AWS DMS task to replicate the ongoing updates to the data warehouse. Monitor and verify that the data migration is complete.

Click here for more AWS Certified Solutions Architect Professional practice exam questions.

More AWS reviewers can be found here:

Additional Training Materials for the AWS Certified Solutions Architect Professional Exam

There are a few top-rated AWS Certified Solutions Architect Professional video courses that you can check out as well, which can help in your exam preparations. The list below is constantly updated based on feedback from our students on which course/s helped them the most during their exams.

- AWS Certified Solutions Architect Professional by Adrian Cantrill

- Courses from AWS SkillBuilder site which includes:

- Standard Exam Prep Plan – only free resources; and

- Enhanced Exam Prep Plan – includes additional content for AWS SkillBuilder subscribers, such as AWS Builder Labs, game-based learning, Official Pretests, and more exam-style questions.

- Andrew Brown’s Free SAP-C02 Course on FreeCodeCamp YouTube

- Another awesome resource that we recommend for your AWS Certified Solutions Architect – Professional SAP-C02 exam preparation is Andrew Brown’s SAP-C02 course on FreeCodeCamp YouTube. Andrew Brown is the CEO of ExamPro and also an AWS Community Hero as well. Check out his informative 70-hour FREE SAP-C02 course here:

Based on consensus, any of these video courses plus our practice test course and our AWS Certified Solutions Architect Professional Study Guide eBook were enough to pass this tough exam.

In general, what you should have learned from your review are the following:

- Features and use cases of the AWS services and how they integrate with each other

- AWS networking, security, billing and account management

- The AWS CLI, APIs and SDKs

- Automation, migration planning, and troubleshooting

- The best practices in designing solutions in the AWS Cloud

- Building CI/CD solutions using different platforms

- Resource management in a multi-account organization

- Multi-level security

All these factors are essentially the domains of your certification exam. It is because of this difficult hurdle that AWS Certified Solutions Architect Professionals are highly respected in the industry. They are capable of architecting ingenious solutions that solve customer problems in AWS. They are also constantly improving themselves by learning all the new services and features that AWS produces each year to make sure that they can provide the best solutions to their customers. Let this challenge be your motivation to dream high and strive further in your career as a Solutions Architect!

Final Notes About the AWS Certified Solutions Architect Professional SAP-C02 Exam

The SA Professional exam questions always ask for highly available, fault tolerant, cost-effective and secure solutions. Be sure to understand the choices provided to you, and verify that they have accurate explanations. Some choices are very misleading such that they seem to be the most natural answer to the question, but actually contain incorrect information, such as the incorrect use of a service. Always place accuracy above all else.

When unsure of which options are correct in a multi-select question, try to eliminate some of the choices that you believe are false. This will help narrow down the feasible answers to that question. The same goes for multiple choice type questions. Be extra careful as well when selecting the number of answers you submit.

Since an SA Professional has responsibilities in creating large-scale architectures, be wary of the different ways AWS services can be integrated with one another. Common combinations include:

- Lambda, API Gateway, SNS, and DynamoDB

- EC2, EBS/EFS/Elasticache, Auto Scaling, ELB, and SQS

- S3, Cloudfront, WAF

- S3, Kinesis

- On-premises servers with Direct Connect/VPN/VPC Endpoints/Transit Gateway

- Organizations, SSO, IAM roles, Config, Cloudformation, and Service Catalog

- Mobile apps with Cognito, API Gateway, and DynamoDB

- CodeCommit, CodePipeline, CodeBuild, CodeDeploy

- ECR, ECS/Fargate and S3

- EMR + Spot Fleets/Combinations of different instance types for master node and task nodes

- Amazon Connect + Alexa + Amazon Lex

Lastly, be on the lookout for “key terms” that will help you realize the answer faster. Words such as millisecond latency, serverless, managed, highly available, most cost effective, fault tolerant, mobile, streaming, object storage, archival, polling, push notifications, etc are commonly seen in the exam. Time management is very important when taking AWS certification exams, so be sure to monitor the time you consume for each question.