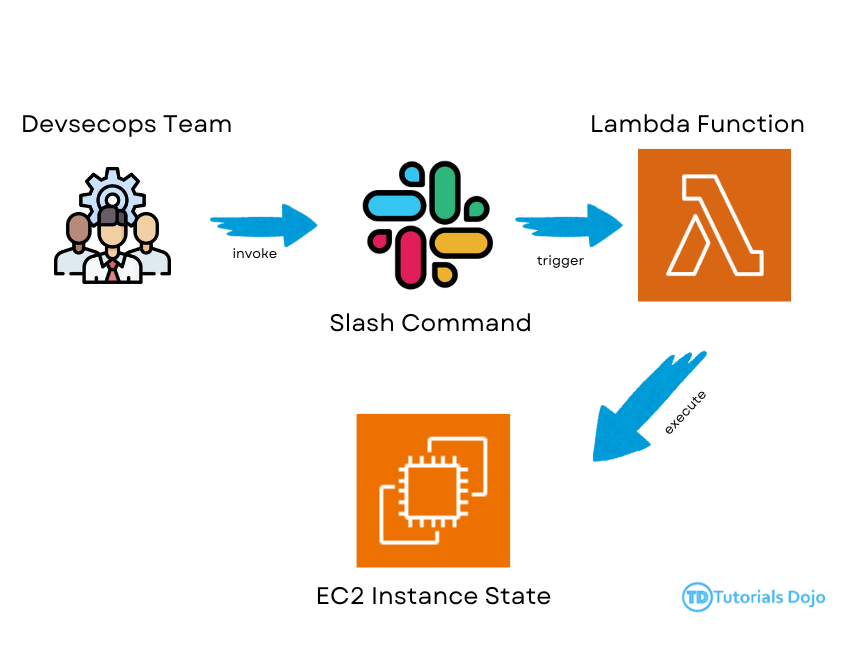

Utilizing Lambda Functions to Control Amazon EC2 Instances via Slack

Nikee Tomas2024-04-11T00:49:47+00:00Managing and controlling resources is crucial in the cloud computing environment. Amazon Web Services (AWS) offers various services, including Amazon Elastic Compute Cloud (EC2), which provides scalable computing capacity in the cloud. Alongside AWS services, communication and collaboration tools like Slack have become integral to modern workflows. This article explores how to seamlessly integrate AWS Lambda functions with Slack to enable users to invoke actions such as rebooting, stopping, and starting EC2 instances directly from Slack channels. Using Lambda functions in combination with AWS services and Slack's messaging platform streamlines operational tasks and enhances team collaboration and productivity. Setting Up [...]