Did you know? Big tech companies, fast-growing startups, and even solo developers are already using Amazon Bedrock to power their AI apps, from chatbots to content generators. But there’s one secret they all share to keep things cost-efficient and fast: Smart token management.

Whether building an AI-powered app or experimenting with GenAI for the first time, understanding how tokens work is the key to saving money and boosting performance in Amazon Bedrock.

Let’s break it all down easily. There is no deep tech talk, just real tips that work.

What Are Tokens in Amazon Bedrock?

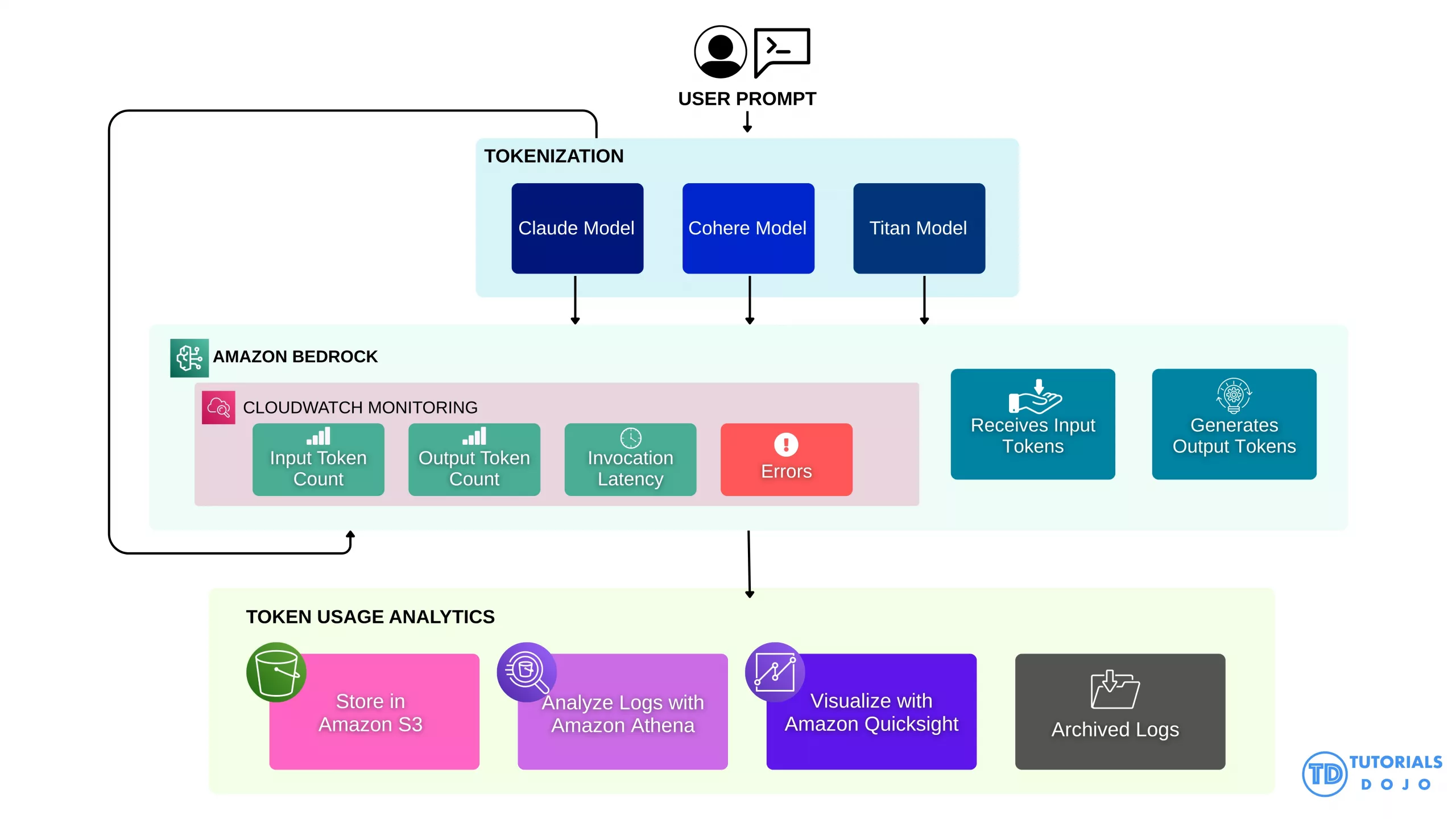

Tokens are the units of text that foundation models (FMs) process. A token can be a whole word, part of a word, or punctuation. When interacting with an Amazon Bedrock model, both the input prompt and the model’s output response are measured in tokens.

Each model family in Bedrock uses its tokenizer and supports different context window sizes. This means, depending on the model used, the same sentence might count as a different number of tokens.

For example, the sentence tokenizes differently in:

• Claude: 8 tokens

• Titan: 9 tokens

• Cohere: 7 tokens

These variations arise from each model’s tokenizer and vocabulary. Some tokenizers may split words differently, include or exclude certain punctuation marks, or add special tokens for sentence boundaries. These differences can lead to variations in token counts for the exact input text.

Token Management in Amazon Bedrock

Understanding Foundation Models and Token Behavior

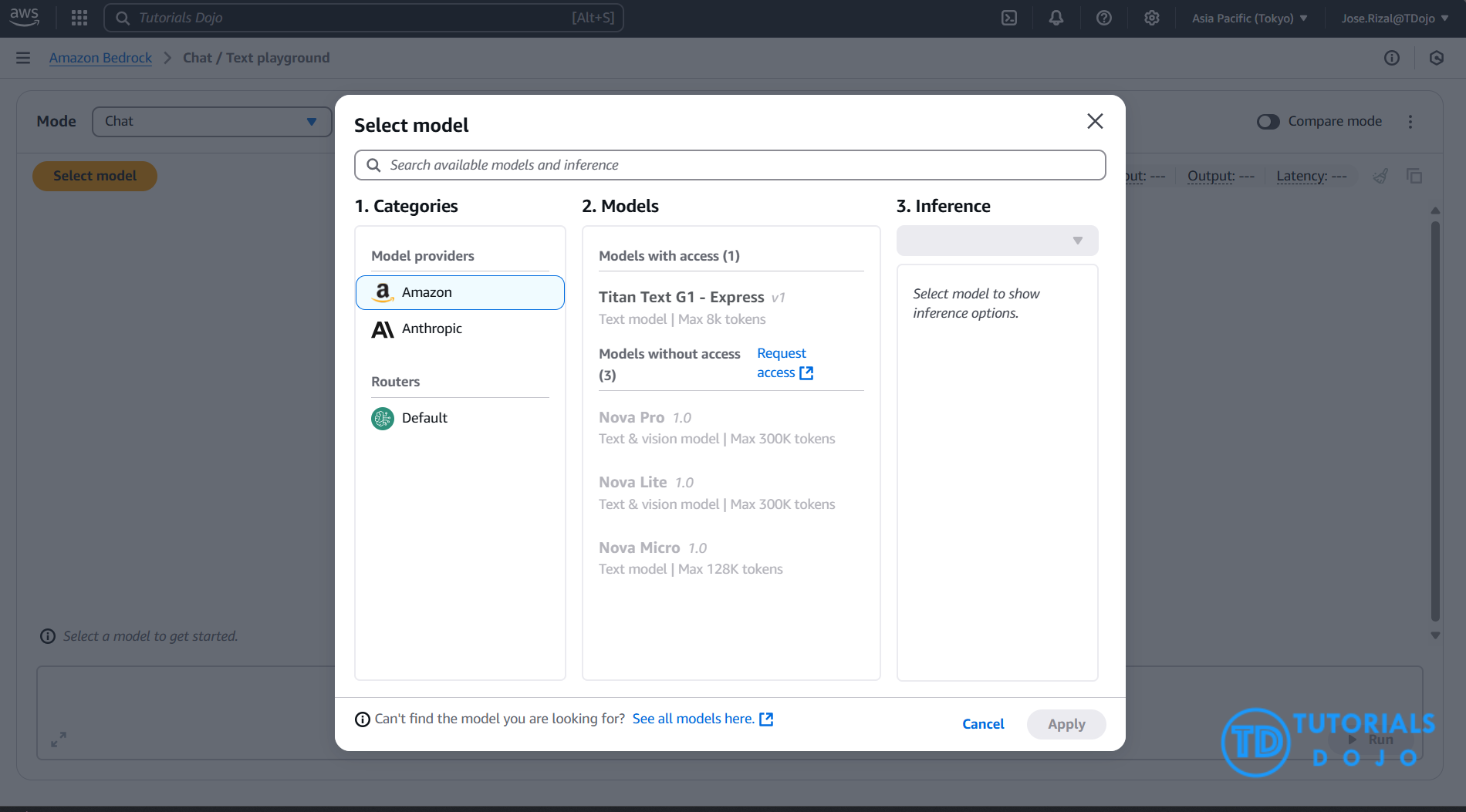

Amazon Bedrock offers access to several FMs from top AI providers. Each model handles tokens differently and is optimized for specific tasks:

| Model Family | Context Window | Use Case | Token Strength |

| Claude (Anthropic) | Up to 200,000 tokens | Long document processing | Efficient for reasoning |

| Titan (Amazon) | Up to 32,000 tokens | General text generation | Cost-effective |

| Cohere Command | Up to 128,000 tokens | Instruction-following | Structured outputs |

| Jurassic (AI21 Labs) | Approximately 8,000 to 32,000 tokens | Text generation | Natural-sounding results |

| Meta Llama | Varies | Open-source experimentation | Lightweight deployment |

| Mistral AI | Varies | Lightweight and fast use cases | High performance at low cost |

How to Track Token Usage

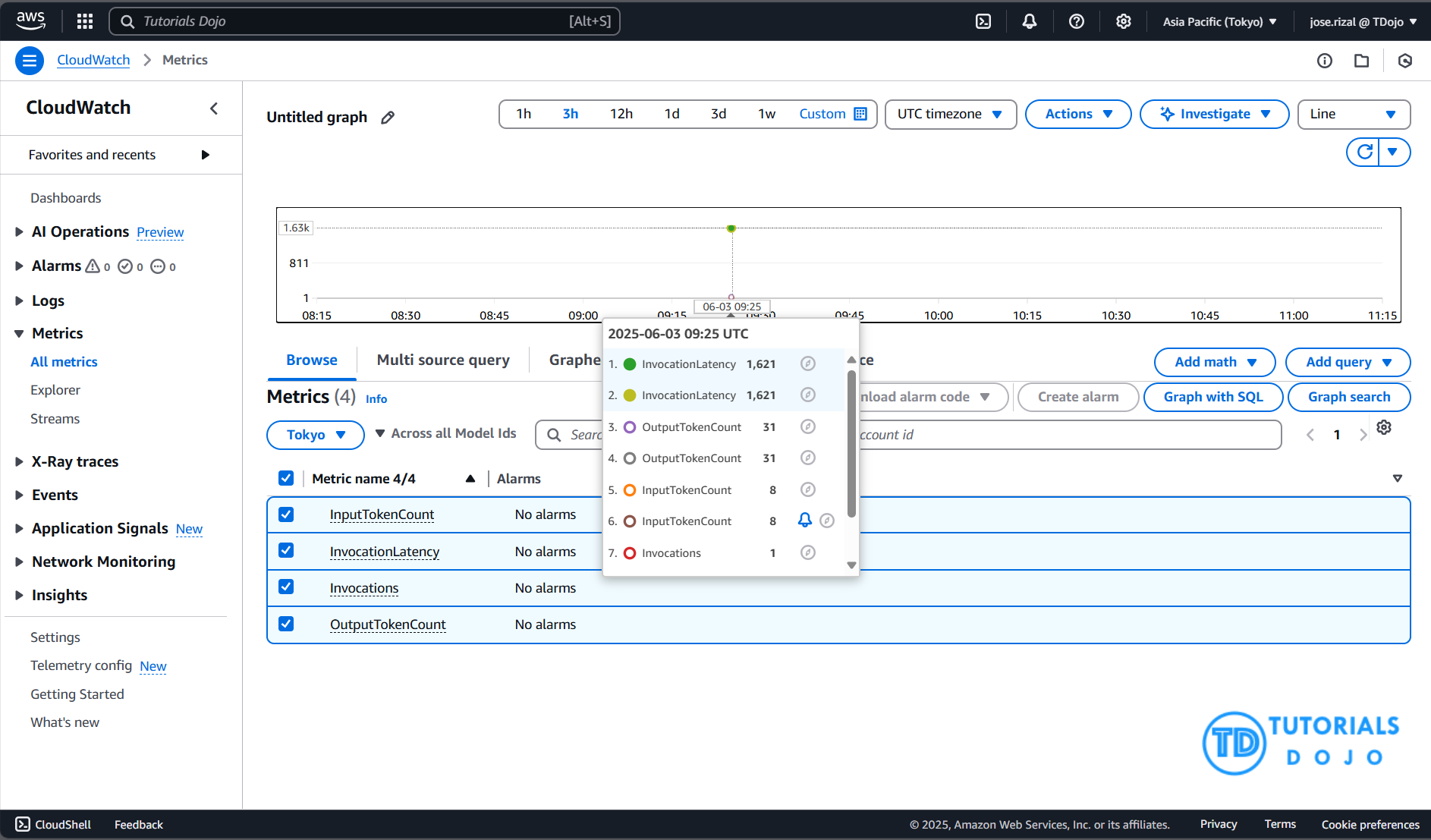

Amazon Bedrock makes it easy to track token usage using Amazon CloudWatch, a monitoring tool from AWS.

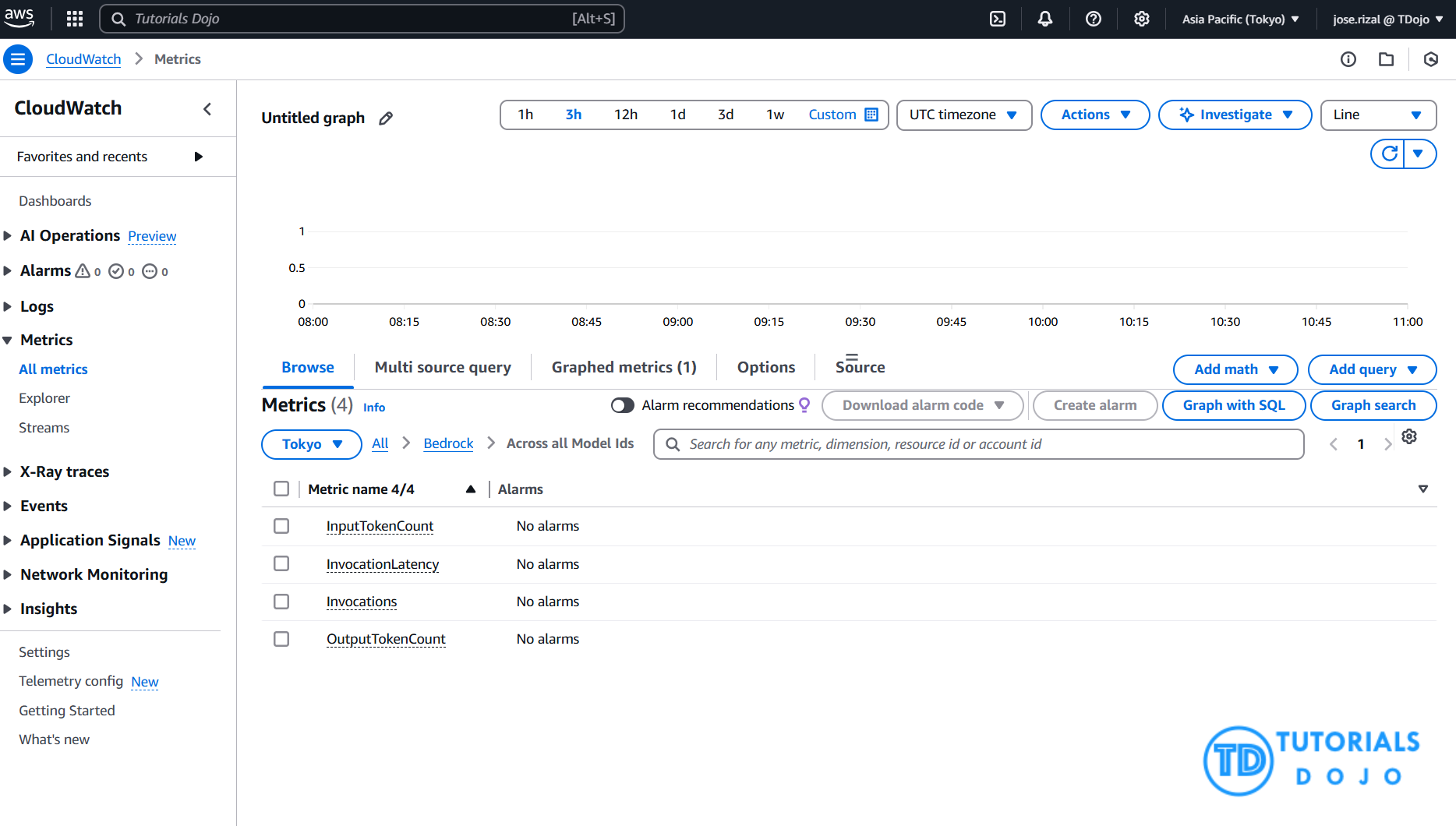

CloudWatch gives you metrics like:

- InputTokenCount – tokens you send to the model

- OutputTokenCount – tokens you get back

- InvocationLatency – how fast the model responds

- Invocations – The total number of times the model was called or invoked.

- Errors – if something goes wrong

With these, you can monitor how many tokens you use and where.

Step-by-Step to Track Amazon Bedrock Token Usage in CloudWatch

- Sign in to the AWS Management Console

- Go to https://console.aws.amazon.com/ and log in with your AWS credentials.

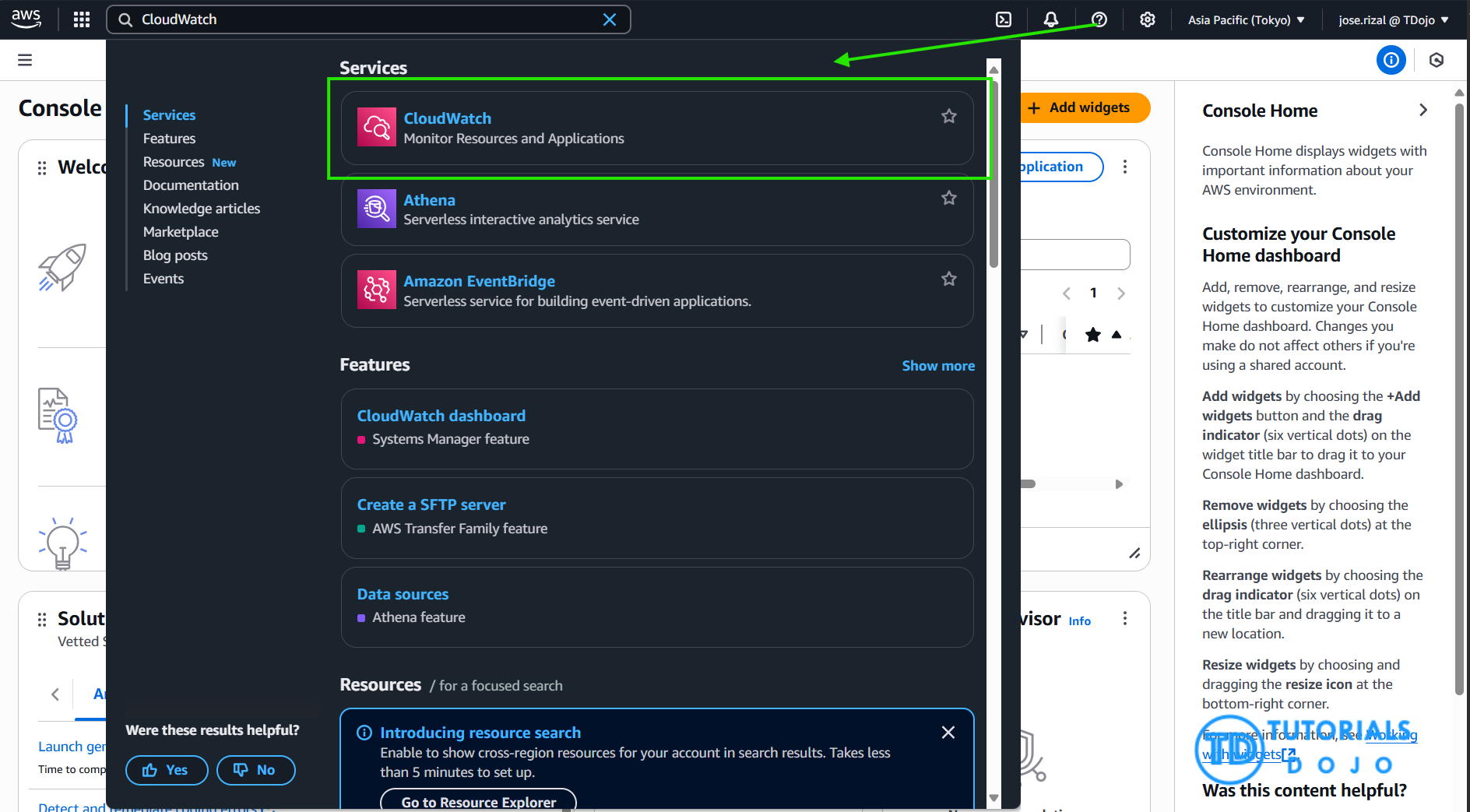

- Open Amazon CloudWatch

- In the AWS Console search bar, type CloudWatch and select CloudWatch from the results.

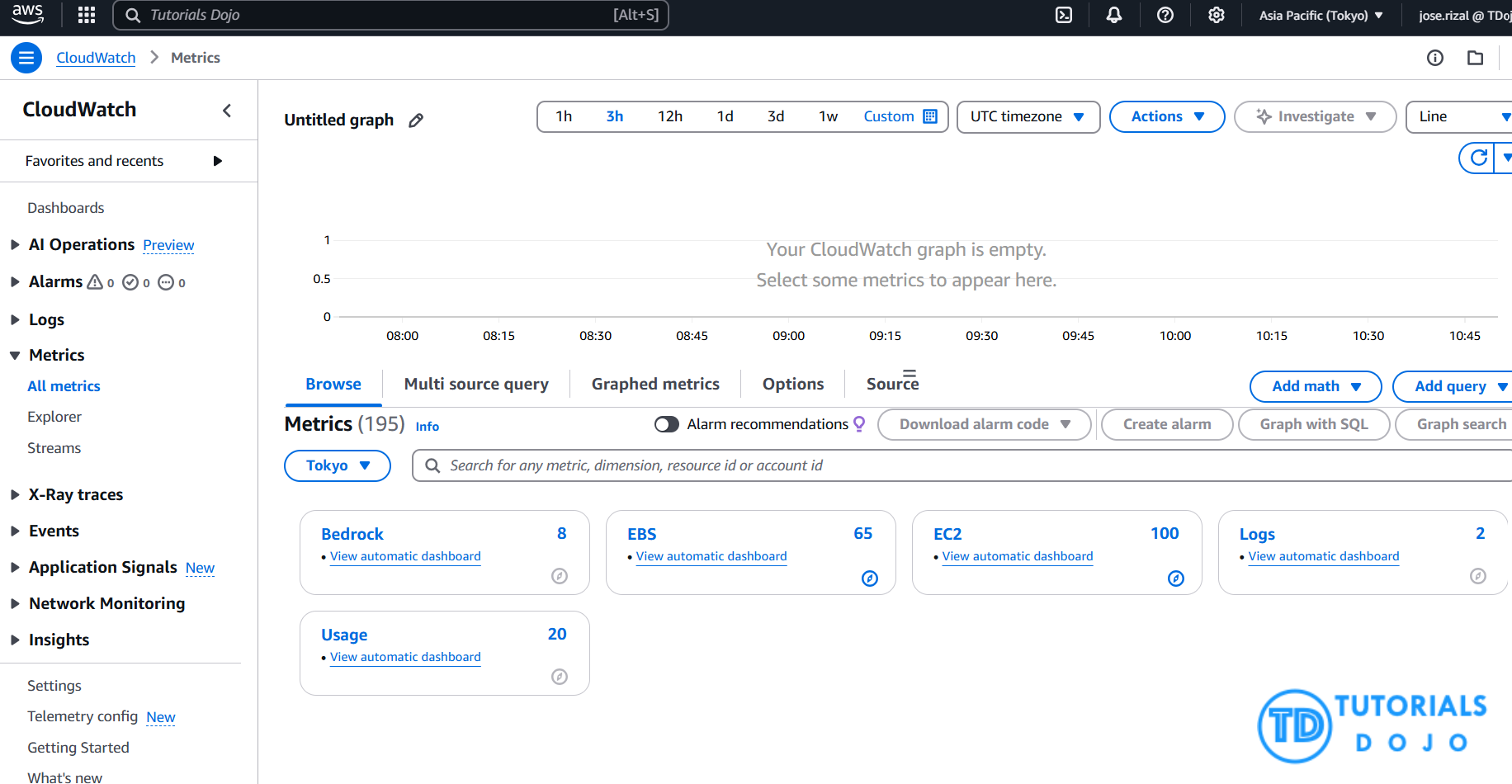

- Navigate to Metrics

- On the CloudWatch dashboard left sidebar, click Metrics.

- Locate Amazon Bedrock Metrics Namespace

- In the Metrics page, look for the namespace related to Amazon Bedrock. This is typically named something like:

- AWS/AmazonBedrock or

- AmazonBedrock (the exact namespace may vary, check the latest docs if unsure)

- Click on the namespace.

- In the Metrics page, look for the namespace related to Amazon Bedrock. This is typically named something like:

- Select Token Usage Metrics

- Within the Amazon Bedrock namespace, you will see available metrics such as:

- InputTokenCount — counts of tokens sent to the model

- OutputTokenCount — counts of tokens received from the model

- InvocationLatency — response times

- Errors — number of errors during invocations

- Click on the metric(s) you want to monitor.

- Within the Amazon Bedrock namespace, you will see available metrics such as:

You track Amazon Bedrock token usage by opening CloudWatch, navigating to the Amazon Bedrock metrics namespace, selecting the relevant token usage metrics (InputTokenCount and OutputTokenCount), and viewing their graphs or creating alarms for monitoring.

Best Practices to Save Tokens (and Money)

Here are some simple ways to manage tokens wisely:

- Keep prompts short and clear

- Don’t add too much fluff.

- Use prompt caching

- Reuse common prompts so you don’t reprocess them every time.

- Set usage alerts in CloudWatch

- Get notified before your usage gets out of hand.

- Review usage often

- Use dashboards or logs to spot heavy usage or spikes.

Don’t Forget Security

Ensure only the right people can use your models and see token data by setting up IAM roles and permissions. It’s like giving keys only to trusted team members.

Conclusions:

Managing Amazon Bedrock tokens is about understanding their use, tracking them, and optimizing your prompts. If you do this well, you can save money, speed up your app, and build more innovative AI tools.