Last updated on December 9, 2025

Here are 10 AWS Certified DevOps Engineer Professional DOP-C02 practice exam questions to help you gauge your readiness for the actual exam.

Question 1

An application is hosted in an Auto Scaling group of Amazon EC2 instances with public IP addresses in a public subnet. The instances are configured with a user data script which fetch and install the required system dependencies of the application from the Internet upon launch. A change was recently introduced to prohibit any Internet access from these instances to improve the security but after its implementation, the instances could not get the external dependencies anymore. Upon investigation, all instances are properly running but the hosted application is not starting up completely due to the incomplete installation.

Which of the following is the MOST secure solution to solve this issue and also ensure that the instances do not have public Internet access?

- Download all of the external application dependencies from the public Internet and then store them to an S3 bucket. Set up a VPC endpoint for the S3 bucket and then assign an IAM instance profile to the instances in order to allow them to fetch the required dependencies from the bucket.

- Deploy the Amazon EC2 instances in a private subnet and associate Elastic IP addresses on each of them. Run a custom shell script to disassociate the Elastic IP addresses after the application has been successfully installed and is running properly.

- Use a NAT gateway to disallow any traffic to the VPC which originated from the public Internet. Deploy the Amazon EC2 instances to a private subnet then set the subnet’s route table to use the NAT gateway as its default route.

- Set up a brand new security group for the Amazon EC2 instances. Use a whitelist configuration to only allow outbound traffic to the site where all of the application dependencies are hosted. Delete the security group rule once the installation is complete. Use AWS Config to monitor the compliance.

Question 2

A DevOps engineer has been tasked to implement a reliable solution to maintain all of their Windows and Linux servers both in AWS and in on-premises data center. There should be a system that allows them to easily update the operating systems of their servers and apply the core application patches with minimum management overhead. The patches must be consistent across all levels in order to meet the company’s security compliance.

Which of the following is the MOST suitable solution that you should implement?

- Configure and install AWS Systems Manager agent on all of the EC2 instances in your VPC as well as your physical servers on-premises. Use the Systems Manager Patch Manager service and specify the required Systems Manager Resource Groups for your hybrid architecture. Utilize a preconfigured patch baseline and then run scheduled patch updates during maintenance windows.

- Configure and install the AWS OpsWorks agent on all of your EC2 instances in your VPC and your on-premises servers. Set up an OpsWorks stack with separate layers for each OS then fetch a recipe from the Chef supermarket site (supermarket.chef.io) to automate the execution of the patch commands for each layer during maintenance windows.

- Develop a custom python script to install the latest OS patches on the Linux servers. Set up a scheduled job to automatically run this script using the cron scheduler on Linux servers. Enable Windows Update in order to automatically patch Windows servers or set up a scheduled task using Windows Task Scheduler to periodically run the python script.

- Store the login credentials of each Linux and Windows servers on the AWS Systems Manager Parameter Store. Use Systems Manager Resource Groups to set up one group for your Linux servers and another one for your Windows servers. Remotely login, run, and deploy the patch updates to all of your servers using the credentials stored in the Systems Manager Parameter Store and through the use of the Systems Manager Run Command.

Question 3

A fast-growing company has multiple AWS accounts which are consolidated using AWS Organizations and they expect to add new accounts soon. As the DevOps engineer, you were instructed to design a centralized logging solution to deliver all of their VPC Flow Logs and CloudWatch Logs across all of their sub-accounts to their dedicated Audit account for compliance purposes. The logs should also be properly indexed in order to perform search, retrieval, and analysis.

Which of the following is the MOST suitable solution that you should implement to meet the above requirements?

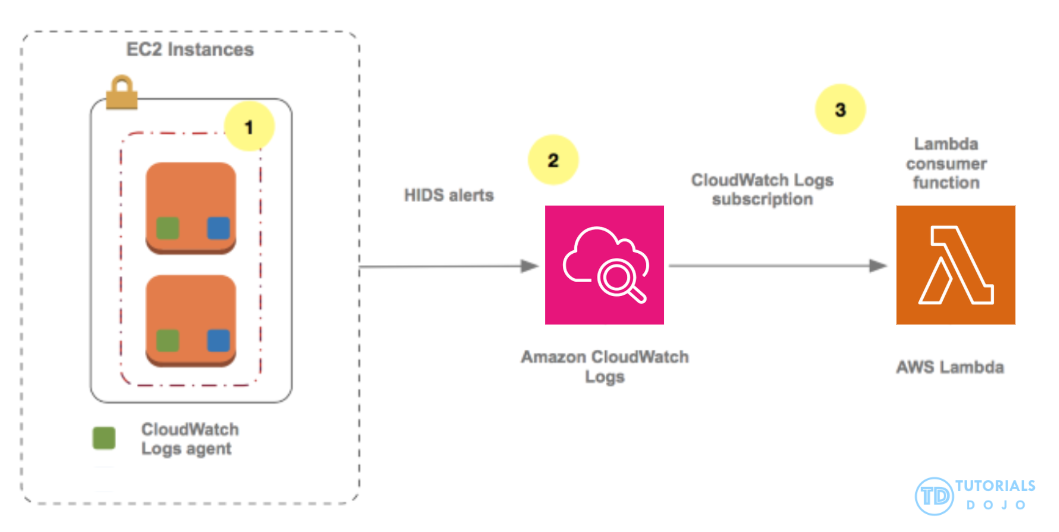

- In the Audit account, launch a new Lambda function which will send all VPC Flow Logs and CloudWatch Logs to an Amazon OpenSearch cluster. Use a CloudWatch subscription filter in the sub-accounts to stream all of the logs to the Lambda function in the Audit account.

- In the Audit account, create a new stream in Kinesis Data Streams and a Lambda function that acts as an event handler to send all of the logs to the Amazon OpenSearch cluster. Create a CloudWatch subscription filter and use Kinesis Data Streams to stream all of the VPC Flow Logs and CloudWatch Logs from the sub-accounts to the Kinesis data stream in the Audit account.

- In the Audit account, create an Amazon SQS queue that will push all logs to an Amazon OpenSearch cluster. Use a CloudWatch subscription filter to stream both VPC Flow Logs and CloudWatch Logs from their sub-accounts to the SQS queue in the Audit account.

- In the Audit account, launch a new Lambda function which will push all of the required logs to a self-hosted OpenSearch cluster in a large EC2 instance. Integrate the Lambda function to a CloudWatch subscription filter to collect all of the logs from the sub-accounts and stream them to the Lambda function deployed in the Audit account.

Question 4

A startup prioritizes a serverless approach, using AWS Lambda for new workloads to analyze performance and identify bottlenecks. The startup aims to transition to self-managed services on top of Amazon EC2 later if it is more cost-effective. To do this, a solution for granular monitoring of every component of the call graph, including services and internal functions, for all requests, is required. In addition, the startup wants engineers and other stakeholders to be notified of performance irregularities as soon as such irregularities arise.

Which option will meet these requirements?

-

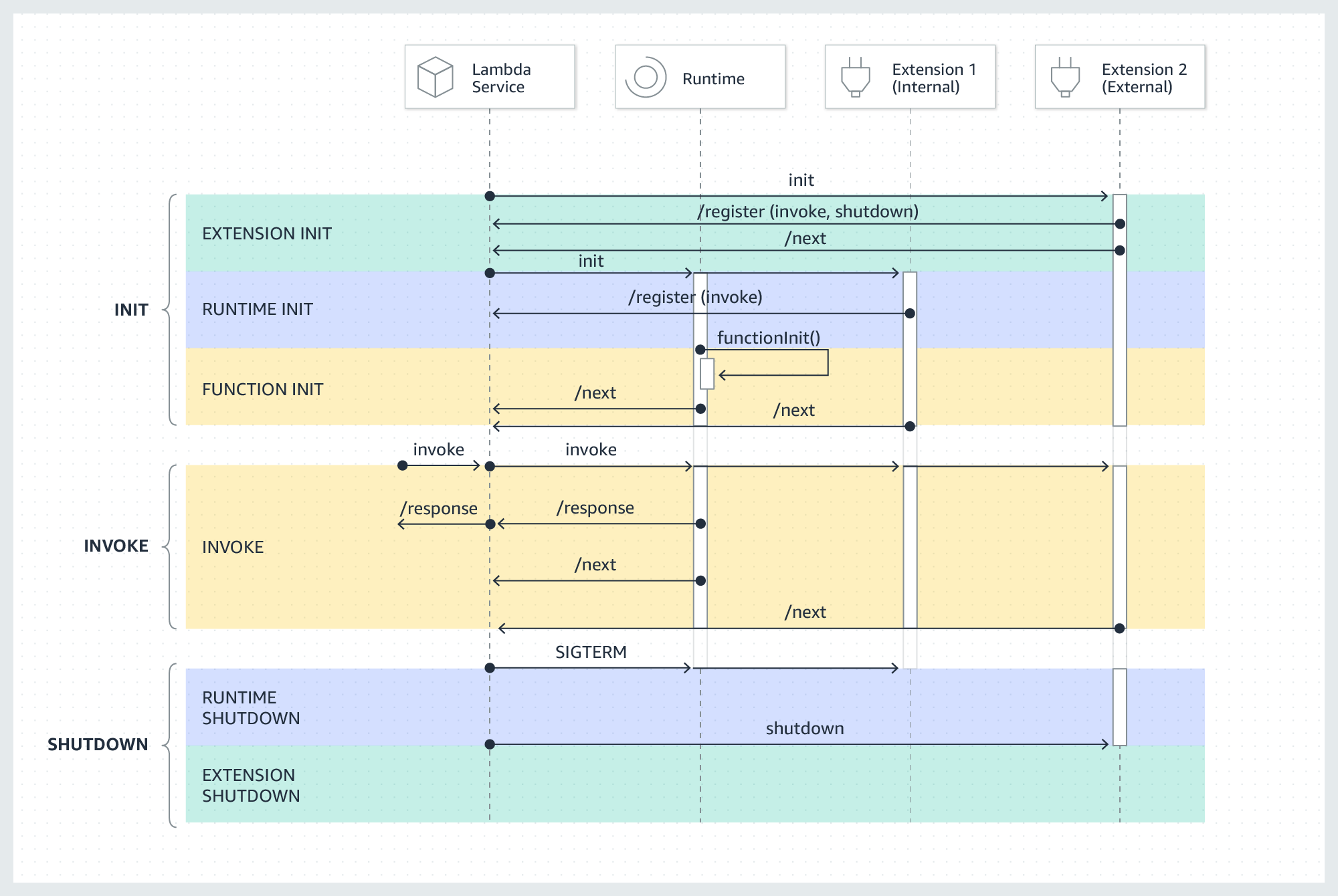

Create an internal extension and instrument Lambda workloads into X-Ray segments and subsegments. Enable X-Ray active tracing for Lambda. Assign an execution role allowing X-Ray actions. Set up X-Ray groups around workflows and enable X-Ray insights. Configure relevant EventBridge rules and CloudWatch alarms.

-

Consolidate workflows spanning multiple Lambda functions into 1 function per workflow. Create an external extension and enable AWS X-Ray active tracing to instrument functions into segments. Assign an execution role allowing X-Ray actions. Enable X-Ray insights and set up appropriate Amazon EventBridge rules and Amazon CloudWatch alarms.

-

Create an external extension and instrument Lambda workloads into AWS X-Ray segments and subsegments. Enable X-Ray active tracing for Lambda. Assign an execution role allowing X-Ray actions. Set up X-Ray groups around workflows and enable Amazon CloudWatch Logs insights. Configure relevant Amazon EventBridge rules and CloudWatch alarms.

-

Create an external extension and instrument Lambda workloads into AWS X-Ray segments and subsegments. Enable X-Ray active tracing for Lambda. Assign an execution role allowing X-Ray actions. Set up X-Ray groups around workflows and enable X-Ray insights. Configure relevant Amazon EventBridge rules and Amazon CloudWatch alarms.

Question 5

A DevOps engineer has been tasked with implementing configuration management for the company’s infrastructure in AWS. To adhere to the company’s strict security policies, the solution must include a near real-time dashboard that displays the compliance status of the systems and can detect any violations.

Which solution would be able to meet the above requirements?

- Use AWS Service Catalog to create the required resource configurations for its compliance posture. Monitor the compliance and violations of its cloud resources using a custom CloudWatch dashboard with an integrated Amazon SNS to send notifications.

- Use AWS Config to record all configuration changes and store the data reports to Amazon S3. Use Amazon QuickSight to analyze the dataset.

- Tag all the resources and use Trusted Advisor to monitor both the compliant and non-compliant resources. Use the AWS Management Console to monitor the status of the compliance posture.

-

Use Amazon Inspector to monitor the compliance posture of the systems and store the reports in Amazon CloudWatch Logs. Use a CloudWatch dashboard with a custom metric filter to monitor and view all of the specific compliance requirements.

Question 6

A recent production incident has caused a data breach in one of the company’s flagship applications, which is hosted in an Auto Scaling group of Amazon EC2 instances. In order to prevent this from happening again, a DevOps engineer was tasked to implement a solution that would automatically terminate any instance in production that was manually logged into via SSH. All of the EC2 instances that are being used by the application already have an Amazon CloudWatch Logs agent installed.

Which of the following is the MOST automated solution that the DevOps engineer should implement?

-

Set up and integrate a CloudWatch Logs subscription with AWS Step Functions to add a special

FOR_DELETIONtag to the specific EC2 instance that had an SSH login event. Create an Amazon EventBridge rule to trigger a second AWS Lambda function everyday at 12 PM that will terminate all of the EC2 instances with this tag. - Set up a CloudWatch Alarm that will be triggered when an SSH login event occurs and configure it also to send a notification to an Amazon SNS topic once the alarm is triggered. Instruct the Support and Operations team to subscribe to the SNS topic and then manually terminate the detected EC2 instance as soon as possible.

- Set up a CloudWatch Alarm that will be triggered when there is an SSH login event and configure it to send a notification to an Amazon Simple Queue Service (Amazon SQS) queue. Launch a group of EC2 worker instances to consume the messages from the SQS queue and terminate the detected EC2 instances.

-

Set up a CloudWatch Logs subscription with an AWS Lambda function which is configured to add a

FOR_DELETIONtag to the EC2 instance that produced the SSH login event. Run another Lambda function every day using the Amazon EventBridge rule to terminate all EC2 instances with the custom tag for deletion.

Question 7

A startup’s application is running in a single AWS region and utilizes Amazon DynamoDB as its backend.

The application is growing in popularity, and the startup just signed a deal to offer the application in other regions. The startup is looking for a solution that will satisfy the following requirements:

-

DynamoDB table must be available in all three regions to deliver low-latency data access.

-

When the table is updated in one Region, the change must seamlessly propagate to the other regions.

Which of the following solutions will meet the requirements with the LEAST operational overhead?

- Create DynamoDB global tables, then configure a primary table in one region and a read replica in the other regions.

- Provision a multi-Region, multi-active DynamoDB global table that includes the three Regions.

- Create DynamoDB tables in each of the three regions. Set up each table to use the same name.

- Provision three DynamoDB tables, one for each required region. Synchronize data changes among the tables using AWS SDK.

Question 8

Due to regional growth, an e-commerce company has decided to expand its global operations. The app’s REST API web services run in an Auto Scaling group of Amazon EC2 instances across multiple Availability Zones behind an Application Load Balancer. The application uses a single Amazon Aurora MySQL database instance in the AWS Region where it is based.

The company aims to consolidate and store product catalog data into a single data source across all regions. To comply with data privacy regulations, personal information, purchases, and financial data must be stored within each respective region.

Which of the following options can meet the above requirements and entail the LEAST amount of change to the application?

- Set up a new Amazon Redshift database to store the product catalog. Launch a new set of Amazon DynamoDB tables to store their customers’ personal information and financial data.

- Set up an Amazon DynamoDB global table to store the product catalog data of the e-commerce website. Use regional DynamoDB tables to store their customers’ personal information and financial data.

- Set up multiple read replicas in your Aurora cluster to store the product catalog data. Launch an additional local Aurora instance in each AWS Region to store customers’ personal information and financial data.

- Set up multiple read replicas in your Aurora cluster to store the product catalog data. Launch a new Amazon DynamoDB global table for storing their customers’ personal information and financial data.

Question 9

A company plans to implement a monitoring system to track the cost-effectiveness of its Amazon EC2 resources across multiple AWS accounts. All existing resources are appropriately tagged to reflect the corresponding environment, department, and business unit for cost allocation purposes.

The company has instructed its DevOps engineer to automate infrastructure cost optimization across various shared environments and accounts, including detecting EC2 instances with low utilization.

Which is the MOST suitable solution that the DevOps engineer should implement in this scenario?

- Develop a custom shell script on an EC2 instance that runs periodically to report the instance utilization of all instances and store the result in an Amazon DynamoDB table. Use an Amazon QuickSight dashboard with DynamoDB as the source data to monitor and identify the underutilized EC2 instances. Integrate QuickSight and AWS Lambda to trigger an EC2 termination command for the underutilized instances.

- Set up an Amazon CloudWatch dashboard for EC2 instance tags based on the environment, department, and business unit to track the instance utilization. Create a trigger using an Amazon EventBridge rule and AWS Lambda to terminate the underutilized EC2 instances.

- Utilize AWS Trusted Advisor through a Business Support plan and integrate it with Amazon EventBridge to detect EC2 instances with low utilization. Set up an AWS Lambda function to filter the Trusted Advisor data using environment, department, and business unit tags. Create another Lambda trigger to terminate the underutilized instances.

- Set up an AWS Systems Manager to track the utilization of all of your EC2 instances and report underutilized instances to Amazon CloudWatch. Filter the data in CloudWatch based on tags for each environment, department, and business unit. Create triggers in CloudWatch that will invoke an AWS Lambda function that will terminate underutilized EC2 instances.

Question 10

A company has several legacy systems which use both on-premises servers as well as EC2 instances in AWS. The cluster nodes in AWS are configured to have a local IP address and a fixed hostname in order for the on-premises servers to communicate with them. There is a requirement to automate the configuration of the cluster which consists of 10 nodes to ensure high availability across three Availability Zones. There should also be a corresponding elastic network interface in a specific subnet for each Availability Zone. Each of the cluster node’s local IP address and hostname must be static and should not change even if the instance reboots or gets terminated.

Which of the following solutions below provides the LEAST amount of effort to automate this architecture?

- Launch an Elastic Beanstalk application with 10 EC2 instances, each has a corresponding ENI, hostname, and AZ as input parameters. Use the Elastic Beanstalk health agent daemon process to configure the hostname of the instance and attach a specific ENI based on the current environment.

- Set up a CloudFormation child stack template which launches an Auto Scaling group consisting of just one EC2 instance then provide a list of ENIs, hostnames, and the specific AZs as stack parameters. Set both the

MinSizeandMaxSizeparameters of the Auto Scaling group to 1. Add a user data script that will attach an ENI to the instance once launched. Use CloudFormation nested stacks to provision a total of 10 nodes needed for the cluster, and deploy the stack using a master template. - Use a DynamoDB table to store the list of ENIs and hostnames subnets which will be used by the cluster. Set up a single AWS CloudFormation template to manage an Auto Scaling group with a minimum and maximum size of 10. Maintain the assignment of each local IP address and hostname of the instances by using Systems Manager.

- Develop a custom AWS CLI script to launch the EC2 instances, each with an attached ENI, a unique name and placed in a specific AZ. Replace the missing EC2 instance by running the script via AWS CloudShell in the event that one of the instances in the cluster got rebooted or terminated.

For more practice questions like these and to further prepare you for the actual AWS Certified DevOps Engineer Professional DOP-C02 exam, we recommend that you take our top-notch AWS Certified DevOps Engineer Professional Practice Exams, which have been regarded as the best in the market.

Also check out our AWS Certified DevOps Engineer Professional DOP-C02 Exam Study Guide here.