Last updated on January 27, 2026

Google Cloud Logging Cheat Sheet

- An exabyte-scale, fully managed service for real-time log management.

- Helps you to securely store, search, analyze, and alert on all of your log data and events.

Features

- Write any custom log, from any source, into Cloud Logging using the public write APIs.

- You can search, sort, and query logs through query statements, along with rich histogram visualizations, simple field explorers, and the ability to save the queries.

- Integrates with Cloud Monitoring to set alerts on the logs events and logs-based metrics you have defined.

- You can export data in real-time to BigQuery to perform advanced analytics and SQL-like query tasks.

- Cloud Logging helps you see the problems with your mountain of data using Error Reporting. It helps you automatically analyze your logs for exceptions and intelligently aggregate them into meaningful error groups.

Cloud Audit Logs

Cloud Audit Logs maintains audit logs for each Cloud project, folder, and organization. There are four types of logs you can use:

1. Admin Activity audit logs

- Contains log entries for API calls or other administrative actions that modify the configuration or metadata of resources.

- You must have the IAM role Logging/Logs Viewer or Project/Viewer to view these logs.

- Admin Activity audit logs are always written and you can’t configure or disable them in any way.

2. Data Access audit logs

- Contains API calls that read the configuration or metadata of resources, including user-driven API calls that create, modify, or read user-provided resource data.

- You must have the IAM roles Logging/Private Logs Viewer or Project/Owner to view these logs.

- You must explicitly enable Data Access audit logs to be written. They are disabled by default because they are large.

3. System Event audit logs

- Contains log entries for administrative actions taken by Google Cloud that modify the configuration of resources.

- You must have the IAM role Logging/Logs Viewer or Project/Viewer to view these logs.

- System Event audit logs are always written so you can’t configure or disable them.

- There is no additional charge for your System Event audit logs.

4. Policy Denied audit logs

- Contains logs when a Google Cloud service denies access to a user or service account triggered by a security policy violation.

- You must have the IAM role Logging/Logs Viewer or Project/Viewer to view these logs.

- Policy Denied audit logs are generated by default. Your cloud project is charged for the logs storage.

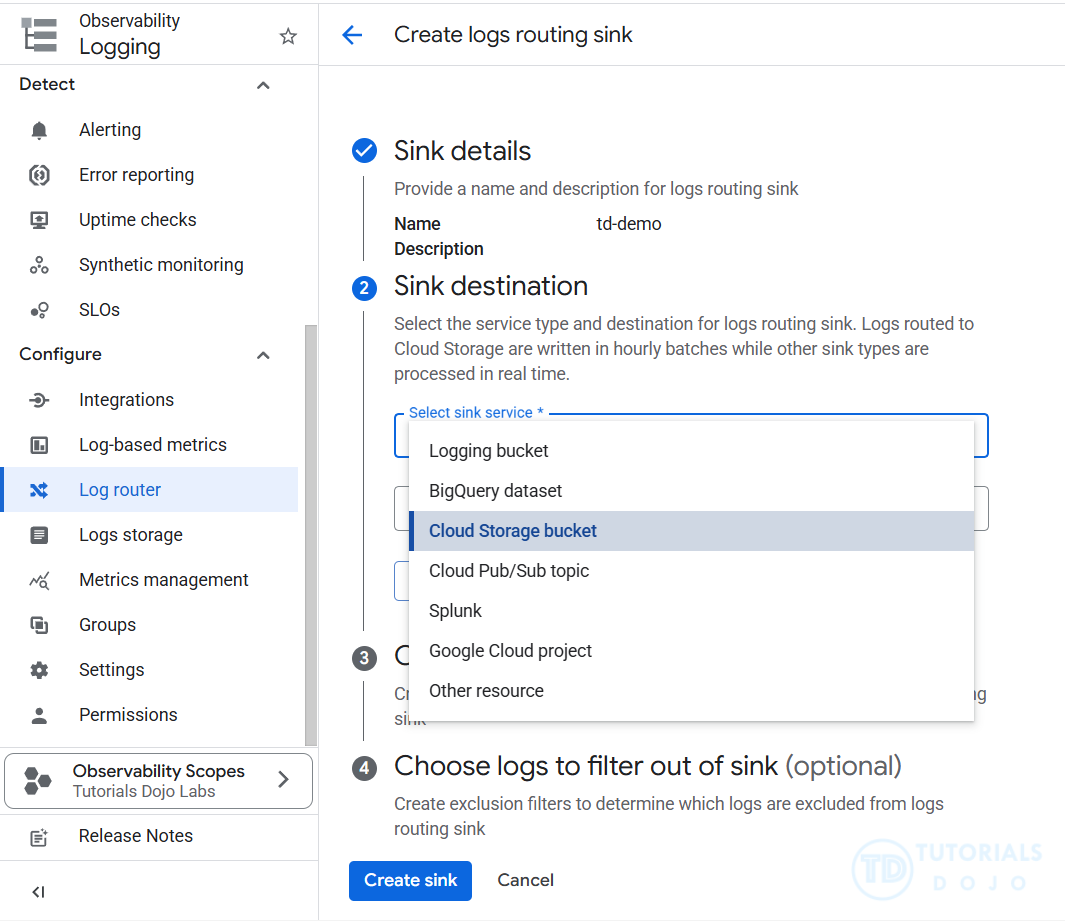

Exporting Audit Logs

- Log entries received by Logging can be exported to Cloud Storage buckets, BigQuery datasets, and Pub/Sub topics.

- To export audit log entries outside of Logging:

- Create a logs sink.

- Give the sink a query that specifies the audit log types you want to export.

- If you want to export audit log entries for a Google Cloud organization, folder, or billing account, review Aggregated sinks.

Pricing

- All features of Cloud Logging are free to use, and the charge is only applicable for ingested log volume over the free allotment. Free usage allotments do not come with upfront fees or commitments.

Validate Your Knowledge

Question 1

You are working as a Cloud Security Officer in your company. You are asked to log all read requests and activities on your Cloud Storage bucket where you store all of the company’s sensitive data. You need to enable this feature as soon as possible because this is also a compliance requirement that will be checked on the next audit.

What should you do?

- Enable Data Access audit logs for Cloud Storage

- Enable Identity-Aware Proxy feature on the Cloud Storage.

- Enable Certificate Authority (CA) Service on the bucket.

- Enable Object Versioning on the bucket.

Question 2

Your company runs hundreds of projects on the Google Cloud Platform. You are tasked with storing the company’s audit log files for three years for compliance purposes. You need to implement a solution to store these audit logs in a cost-effective manner.

What should you do?

- On the Logs Router, create a sink with Cloud BigQuery as a destination to save audit logs.

- Configure all resources to be a publisher on a Cloud Pub/Sub topic and publish all the message logs received from the topic to Cloud SQL to store the logs.

- Develop a custom script written in Python that utilizes the Logging API to duplicate the logs generated by Operations Suite to BigQuery.

- Create a Cloud Storage bucket using a Coldline storage class. Then, on the Logs Router, create a sink. Choose Cloud Storage as a sink service and select the bucket you previously created.

For more Google Cloud practice exam questions with detailed explanations, check out the Tutorials Dojo Portal:

Google Cloud Logging Cheat Sheet References:

https://cloud.google.com/logging

https://cloud.google.com/error-reporting/docs/

https://cloud.google.com/logging/docs/audit

https://cloud.google.com/logging/docs/export/configure_export_v2

https://cloud.google.com/stackdriver/pricing