Last updated on February 4, 2026

In today’s fast-growing world of artificial intelligence, Large Language Models (LLMs) like GPT, Claude, and Grok are becoming critical to digital transformation. These models can write human-like text, understand complex questions, and even automate business tasks.

As LLMs became smarter, they faced a major problem: managing context. Early versions struggled to maintain consistent conversations, use real-time data, and handle large workloads. To solve this, Model Context Protocol (MCP) Servers were introduced. They help LLMs manage context in a dynamic, secure, and intelligent way.

Early context management of LLMs before MCP Servers

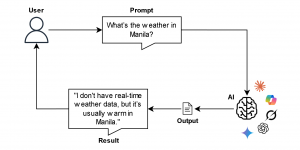

Before MCP Servers, most LLMs relied on static prompts and had only short-term memory. Developers manually added necessary information to each request, a process called prompt engineering. Each new question required reminding the model of the full context, which:

- Increased costs

- Slowed response times

- Caused inconsistent answers

Early LLMs also couldn’t connect to live data sources such as databases, APIs, or cloud storage. They could only use pre-trained knowledge, which quickly became outdated. While this worked for simple chatbots, it was impractical for modern, large-scale applications needing context-aware, real-time responses.

The challenge in Context Management

As organizations deployed LLMs in real-world applications, the limits of manual context handling became clear. LLMs needed to:

- Access real-time data

- Remember past interactions

- Handle multiple users at once

Older systems weren’t built for these demands. Key challenges included:

- Data Relevance: Models couldn’t access updated information.

- Session Continuity: LLMs “forgot” previous conversations.

- Scalability: Managing context for many users caused slowdowns.

- Security and Compliance: Sensitive data in prompts risk exposure.

These challenges showed the need for a better, more scalable, and cloud-based solution to manage context efficiently which led to the creation of Model Context Protocol (MCP) Servers.

What is a Model Context Protocol (MCP) Server?

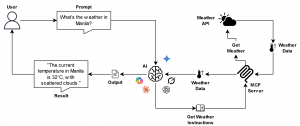

An MCP Server is a stand-alone service that implements the Model Context Protocol (MCP). It enables LLMs or agentic AI to access context, data, and tools in a standardized way. Essentially, it acts as a backend “tool” that enriches LLM responses beyond pre-trained knowledge.

Key Features of MCP Servers

- Standardized Interface – Every MCP Server follows a shared protocol. LLMs and agent applications send requests and receive responses in a consistent, structured format. This simplifies integration with different tools and systems.

- Exposed Capabilities (Tools) – MCP Servers define a list of capabilities or “tools” for example:

- Querying a database

- Fetching a file

- Calling an external API

The LLM only needs to know what task to perform. The MCP Server takes care of how that task is done behind the scenes.

- Transport-Agnostic Design – MCP Servers can communicate with clients (LLMs or agents) over different transport methods, such as:

- STDIO (standard input/output)

- HTTP or Server-Sent Events (SSE)

This flexibility means the same MCP Server can be used across various platforms and deployment environments.

- Context Delivery – When an LLM needs specific information for example, a customer’s order data or the latest stock price it sends a request to the MCP Server.

The server:- Retrieves the needed data from the source (like a database, API, or file storage).

- Packages it into a standard response format.

- Sends it back to the client, which passes it to the LLM.

This process allows the model to generate responses using fresh, relevant, and structured context.

- Extensible and Customizable – Developers can create their own MCP Servers to expose proprietary data or integrate with specialized systems. Refer to this GitHub repository for examples and templates that can help you build custom MCP Servers for various use cases, from document retrieval to API automation.

- Separation of Model and Tools – An MCP Server decouples the LLM from its tools and data sources.

This means:- The model stays lightweight and focused on reasoning and generation.

- The server handles logic, data fetching, and computation.

This design makes the system more modular, secure, and easier to maintain especially when building large-scale AI applications.

How MCP Servers Solve Context Management Challenges

An MCP Server serves as a dedicated link between a large language model (LLM) and the external resources of real-time data, tools and session history. It helps overcome major context-management hurdles in four key ways:

- Access to Accurate, Relevant Data

- Instead of relying on outdated training data, MCP Servers let LLMs request real-time information from databases, APIs, or other sources.

- Session Continuity and Memory of Interactions

- MCP Servers store user-specific data like preferences or previous queries so LLMs can “remember” past interactions. This improves multi-step workflows and conversational consistency.

- Scalability and Modular Integration of Context Sources

- MCP Servers allow many context sources to be connected through a standardized interface. LLMs can scale to handle many users without heavy prompt engineering. Adding new tools or data sources doesn’t require rebuilding the system.

- Improved Security and Governance of Context Usage

- MCP Servers act as a controlled gateway for sensitive data. They enforce access policies, filter irrelevant data, and log requests. This improves security, compliance, and auditability.