Last updated on October 29, 2025

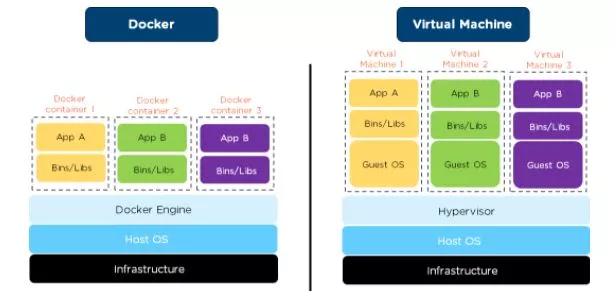

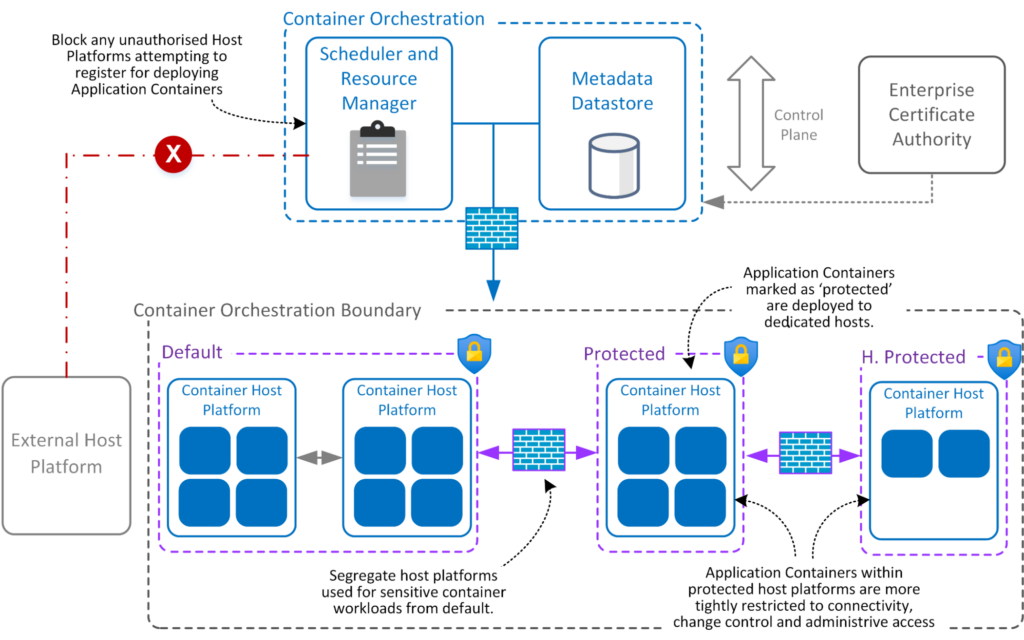

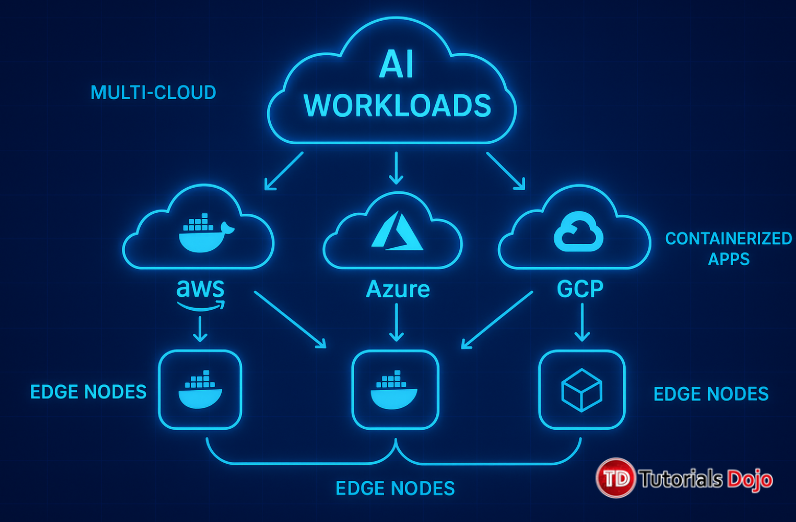

In 2025, software development is moving faster than ever. Businesses demand speed, reliability, and scalability. Cloud infrastructure is no longer optional. it’s the backbone of modern applications, powering everything from streaming platforms like Netflix to AI-driven fintech solutions. In this fast-paced environment, one technology has emerged as a game-changer for cloud engineers: Docker. An illustration of a developer using Docker for containerized applications. Picture this: a developer spends weeks building a web application. On their laptop, it runs flawlessly, smooth performance, no errors. But once deployed to production, chaos erupts. Dependencies don’t match, libraries are missing, the database won’t connect, and the system crashes under load. The infamous phrase echoes: “But it works on my machine.” This scenario, known as environment drift, has plagued engineers for decades. Docker doesn’t just solve this problem; it redefines how applications are built, shipped, and run, making containerization the foundation of modern cloud engineering. Containers package everything an app needs, code, libraries, configurations, into a self-contained unit that behaves consistently across any environment. Move it from your laptop to a cloud server, and it works the same way every time. If you’re planning to learn Docker in 2025, now is the perfect time to start. As cloud computing continues to dominate modern infrastructure, Docker remains a must-have skill for every cloud engineer. Learning Docker in 2025 not only gives you an edge in automation and scalability but also prepares you for the next wave of cloud-native innovation. For cloud engineers, mastering Docker is more than a skill — it’s a career catalyst that unlocks opportunities in DevOps, cloud architecture, AI/ML infrastructure, and microservices development. Think of your computer as a big house. Traditionally, installing multiple applications meant filling it with furniture, appliances, utilities, libraries, dependencies, and frameworks. Conflicts arose when two apps required different versions of the same software. It was like trying to combine a Victorian-style living room with a minimalist Scandinavian loft, messy and frustrating. Docker solves this problem with containers, which are portable, self-contained apartments for each application. Each container carries everything it needs: code, runtime, libraries, and system tools. Move it anywhere, your laptop, AWS, Azure, GCP, and it behaves consistently. Technically, Docker is an open-source platform that automates application deployment in lightweight, portable containers. It leverages Linux kernel features such as namespaces (for isolation) and cgroups (for resource control), ensuring containers are fast, efficient, and secure without the overhead of traditional virtual machines. A visual comparison of physical servers, virtual machines, and containers. To understand Docker, let’s compare it with what came before. Physical servers (the old way): Imagine running only one app on one machine. If you wanted to run three apps, you needed three separate servers. Expensive and inefficient. Virtual machines (the next step): VMs let you run multiple apps on a single physical server by splitting it into “mini-computers.” But each VM carries its own operating system, making them heavy and slow. Containers (the modern solution): Containers share the host operating system while isolating apps. This makes them lightweight, fast, and portable. Think of it this way: If VMs are like full houses — each with its own walls, plumbing, and wiring containers are like apartments in a high-rise. They share the same foundation but remain private, efficient, and easy to replicate. For more official resources, visit the Docker Documentation or check out AWS’s guide on container services. To truly understand why Docker has become such a cornerstone of modern cloud engineering, it helps to compare it with virtual machines (VMs), a technology many engineers are already familiar with. Both VMs and containers aim to isolate applications from the underlying hardware, but the way they do it and the efficiency they achieve are very different. A comparison of Docker containers and virtual machines, highlighting their differences in resource utilization and abstraction layers. Virtual machines are like mini-computers running on a physical host. Each VM includes its own operating system, virtual CPU, memory, storage, and network stack. While this provides strong isolation, one VM crashing usually doesn’t affect others, it comes at a cost: Resource-heavy: Each VM requires significant CPU, memory, and disk space, even for small applications. Slow startup: Booting a VM involves loading an entire operating system, which can take minutes. Maintenance overhead: Each VM OS must be updated, patched, and managed separately, adding operational complexity. Imagine you are running three different applications, each in its own VM. You now have three full operating systems running simultaneously, consuming massive resources and slowing down your host machine. Docker containers take a different approach. Instead of bundling an entire operating system for each application, containers share the host OS kernel while isolating the application and its dependencies. Think of containers as self-contained apartments inside a shared building — each unit is private, but the underlying infrastructure is shared efficiently. This design offers several advantages: Lightweight: Containers only include the essential libraries and files needed to run the application. This means hundreds of containers can run on a single host without overloading it. Fast startup: Containers can launch in seconds or even milliseconds, compared to minutes for VMs. Portability: A container behaves the same way whether it’s running on a developer’s laptop, a testing environment, or a cloud server. The efficiency of containers is why industry giants like Netflix, Spotify, and PayPal run thousands of Docker containers every day. They can scale applications rapidly in response to user demand without needing massive infrastructure. Deploying the same workloads with VMs would require much more hardware, take longer to spin up, and consume more energy, making containers the preferred choice in cloud-native architectures. Docker might seem complicated at first, but it’s easier when you think of it like building and running apps in pre-packaged “boxes”. Here’s what each part means: Think of a Docker image as a recipe or blueprint for an app. It contains everything the app needs to run: Operating system (like Linux) Required libraries (Python, Node.js, etc.) Configurations or settings For example, the official You don’t modify the original recipe; instead, you can add your own ingredients (your code, additional libraries) to create a custom image. Analogy: If cooking a cake, the image is like your cake recipe. It tells you exactly what ingredients and steps are needed. A container is a live cake baked from the recipe. It’s the running version of the image. Each container has: Its own filesystem → your app sees its own files separate from other apps. Isolated processes → the app runs independently without affecting other containers. Network interfaces & virtual IPs → it can communicate with other containers or the internet. Even though containers are separate, they share the host’s operating system kernel. This is why containers are much faster and lighter than virtual machines, which have to run their own OS. Analogy: If the image is a cake recipe, the container is the actual cake. You can have many cakes (containers) from the same recipe (image) without starting from scratch every time. The Docker Engine is what makes Docker work. It has three main parts: Docker Daemon (dockerd): Think of it as the “kitchen manager” that builds and runs containers. Docker CLI (Command Line Interface): The “chef’s tools” you use to tell Docker what to do ( REST API: A way for other programs (like Kubernetes) to communicate with Docker. Without the Docker Engine, images and containers wouldn’t function. It’s the heart of Docker. Docker images are built in layers, like stacking Lego bricks: Base OS (Ubuntu) Python runtime Flask framework Your app code Each layer is reused when possible, so if multiple apps use the same Python runtime, it doesn’t get duplicated. This saves disk space and makes downloading images faster. Analogy: Think of layers as building blocks. You don’t rebuild the entire block every time — you just add the pieces you need. Docker uses Linux features to keep containers isolated and resource-controlled: Namespaces: Make sure a container’s processes, network, and files don’t interfere with others. Cgroups (Control Groups): Limit how much CPU, memory, or storage a container can use, preventing one container from hogging all resources. Analogy: Namespaces are like private rooms for each app, and cgroups are the rules for how much electricity or water each room can use. Networking: Containers can communicate with each other or the outside world through virtual networks. You can map container ports to host ports (e.g., Registries: These are like app stores for images. You can get images from Docker Hub (public), or a company can have its private registry. Analogy: Networking is like connecting rooms in an apartment building, and registries are the grocery stores where you get your ingredients (images). Docker works by combining images, containers, and the Docker Engine into a workflow that makes applications portable, isolated, and fast. Here’s a concise explanation with commands you can try: Core Idea: Docker packages everything an app needs into a self-contained unit (container) that runs consistently anywhere, reducing setup issues, saving resources, and enabling fast scaling. In 2025, software development is faster, more distributed, and more cloud-driven than ever. Companies are embracing microservices, continuous deployment, and multi-cloud strategies, and Docker has become a key enabler for these trends. Docker provides lightweight, fast, portable, and scalable solutions for modern application deployment. Docker provides several advantages: Consistency Across Environments: Lightweight and Efficient: Scalability: Portability: Industry Adoption: For engineers in 2025, learning Docker isn’t just about keeping up — it’s about gaining a competitive edge in cloud engineering, DevOps, and modern software infrastructure roles. Docker is the backbone of modern CI/CD pipelines. Imagine a developer pushing code to a repository. Traditionally, the operations team manually configures servers, installs dependencies, and deploys the app — a slow and error-prone process. With Docker: Code is packaged into a container image Automated tests run inside containers identical to production Passing tests trigger automated deployment to staging or production This ensures “what works in testing works in production”, reducing downtime and frustration. Companies using Jenkins, GitLab CI/CD, or GitHub Actions with Docker can deploy updates to thousands of users within minutes. Learning Docker isn’t just about reading or watching tutorials — you need to practice. Tutorials Dojo PlayCloud AI offers a sandboxed, browser-based environment where you can experiment with Docker safely, without worrying about breaking your local system. Playcloud AI: Docker labs In these labs, you can: Run multiple containers simultaneously: See how different services interact in real time. Link web apps to databases: Practice connecting containers, just like in real production setups. Test container networking: Explore how containers communicate and manage ports. Use Docker volumes for persistent storage: Learn how to keep data even after a container stops or restarts. These hands-on exercises allow learners to gain confidence with Docker commands, container orchestration, and image management, bridging the gap between theory and real-world cloud scenarios. By practicing in PlayCloud AI, beginners and professionals alike can build the skills necessary to deploy and manage containerized applications efficiently, preparing them for roles in cloud engineering, DevOps, and modern software development. Even though containers are isolated, they are not automatically secure. Engineers must follow best practices to protect applications, data, and infrastructure. One key approach is using minimal base images, which reduces the number of unnecessary packages and therefore the attack surface. Running containers as non-root users adds another layer of protection, preventing processes inside the container from having full control over the host system. It’s also important to scan container images for vulnerabilities using tools like Trivy or Clair, ensuring that outdated libraries or known security flaws are caught before deployment. For sensitive workloads — such as in fintech or healthcare — storing images in private registries rather than public repositories helps maintain confidentiality and compliance with regulations. By following these practices, teams can maintain a secure and compliant container ecosystem, making Docker not just a tool for efficiency, but a reliable platform for enterprise-grade applications. Illustration of how container orchestration enforces security boundaries and protection levels for application containers Containers are no longer just a development convenience—they are shaping the very future of cloud engineering. Emerging trends show how Docker and containerization are expanding beyond traditional applications. Serverless containers allow workloads to run only when needed, optimizing resource usage and cost efficiency. In the AI and machine learning space, containers provide reproducible environments, ensuring that models can be trained, tested, and deployed consistently across different platforms. A futuristic diagram of AI workloads running in containers across AWS, Azure, GCP, and edge nodes, highlighting portability and scalability. Edge computing is another frontier where containers are making a major impact. By deploying containers closer to users, applications can achieve low-latency performance, improving user experience for real-time services like gaming, IoT, or streaming analytics. Mastering Docker today means engineers are prepared for the demands of 2025 and beyond: they can deploy scalable applications, adapt to multi-cloud environments, and maintain reliability under heavy workloads. Containers are no longer optional—they are becoming the backbone of modern, cloud-native software engineering. Docker isn’t just a tool — it’s a paradigm shift in how applications are built, deployed, and scaled. From solving the “it works on my machine” problem to enabling microservices, AI workloads, and edge computing, containers have become the backbone of modern cloud engineering. For engineers in 2025, mastering Docker means more than keeping up with technology trends; it means leading the future of software development. It opens doors to careers in DevOps, cloud engineering, and platform architecture, while equipping teams to deliver reliable, scalable, and portable applications across any environment. In a world where speed, efficiency, and adaptability define success, Docker empowers you to build once, run anywhere, and scale endlessly. The future of cloud engineering is containerized—and those who embrace it today will be the architects of tomorrow’s technology landscape. Merkel, Dirk. Docker: Lightweight Linux Containers for Consistent Development and Deployment. Linux Journal, 2014. Boettiger, Carl. An Introduction to Docker for Reproducible Research. ACM SIGOPS Operating Systems Review, 2015. Docker Documentation. https://docs.docker.com Tutorials Dojo PlayCloud AI Labs. https://portal.tutorialsdojo.com Pahl, Claus. Containerization and the PaaS Cloud. IEEE Cloud Computing, 2015.

BUT FIRST, WHAT IS DOCKER?

Docker vs Virtual Machines

Virtual Machines (VMs)

Docker Containers

Understanding Docker’s Core Components

1. Docker Images – The Blueprints

python:3.12 image is like a recipe for a Python environment: it has a small Linux OS plus Python 3.12 installed.2. Containers – Running Apps in Isolation

3. Docker Engine – The Core Technology

docker run, docker ps, etc.).4. Union File System & Layers – Efficiency

5. Isolation with Namespaces & Cgroups

6. Networking & Registries

docker run -p 8080:80 nginx lets you access a web server at localhost:8080).How Docker Works

Step

What Happens

Docker Command Example

1. Build Image

Create a Dockerfile describing your app and dependencies. Docker builds a layered image containing OS, libraries, and your app.

docker build -t myapp:latest .

2. Run Container

Docker launches a container, a live instance of the image. The container has its own filesystem, processes, and network, but shares the host OS kernel.

docker run -d -p 8080:80 myapp:latest

3. Isolated Execution

Each container runs independently. Linux namespaces isolate processes and network, and cgroups limit resources.

docker stats → check CPU, memory usage per container

4. Networking

Containers communicate with each other through virtual networks. Map container ports to the host machine to access apps externally.

docker network create mynetwork docker run --network mynetwork -d myapp

5. Pull & Push from Registry

Pull images from public/private registries or push your custom images for sharing.

docker pull python:3.12 docker push myrepo/myapp:latest

6. Scale & Deploy Anywhere

The same container can run on your laptop, staging server, or cloud server without changes. You can spin up multiple containers instantly.

docker-compose up --scale web=3 → runs 3 instances of your web appWhy Docker Matters in 2025

An application that runs on a developer’s laptop will run exactly the same on staging servers or cloud clusters, eliminating the dreaded “it works on my machine” problem.

Containers share the host OS kernel, so they start in seconds, use fewer resources than virtual machines, and allow hundreds of containers to run on a single server.

Perfect for microservices architecture — you can scale specific services independently, spin up multiple containers instantly, and handle high traffic efficiently.

Docker containers can be moved seamlessly across AWS, Azure, GCP, or local development environments, making multi-cloud strategies easier and reducing vendor lock-in.

Companies like Netflix, Spotify, and PayPal rely on Docker to deploy thousands of containers daily, ensuring speed, reliability, and scalability.Docker in DevOps & CI/CD

Hands-On Practice: Tutorials Dojo PlayCloud AI

Security Best Practices

The Future of Containerization

Conclusion

References