Last updated on October 17, 2025

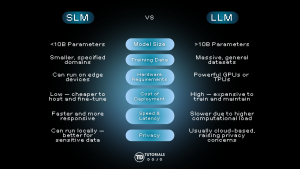

Have you ever needed to find a new charger for your device, only to discover that its voltage wasn’t compatible, causing it not to work or even risking damage? Without checking the actual needs of your device, you’ve probably thought that you could go with what the seller recommends as the “highest quality” rather than your device’s fitting needs. With the current utilization of AI for businesses, bigger doesn’t always mean better. Large Language Models (LLMs) like GPT, Gemini, Claude, etc. have been in the spotlight for their high-performing power for computational tasks and content generation capabilities. But let’s be honest, not all businesses actually need them. LLMs perform well at a cost. They demand a high GPU or TPU for training and development, a large amount of energy, expensive operational costs, and, because of its size, it may introduce latency issues. In short, LLMs are resource-intensive, which can sometimes be a problem for small and medium-sized businesses. This is where Small Language Models come into light. Small Language Models (SLMs) are compact transformer-based language models whose parameter counts range from a few million to several billion. There isn’t a single industry definition, but in practice, SLMs are commonly the lighter end of the model-size spectrum (models in the ~100M-8B range are frequently described as SLMs). Compared with large LLMs, they aim to provide strong task performance while reducing compute, memory, and energy requirements. Examples: SLMs are based on LLMs. Like LLMs, they also employ a neural-network-based architecture known as transformer models. Transformers use encoders to convert text into embeddings, a self-attention mechanism to identify the essential words, and decoders to generate the most probable output. To create practical yet compact models, model compression techniques are applied to retain accuracy while decreasing size: To simplify, the difference between small and large language models is shown below: Whether you use SLMs or LLMs still depends on your business needs. There’s no denying that LLMs are an excellent choice for complex tasks. Though they may cost a lot, they may benefit large enterprises that do not only focus on one department in the long run. On the other hand, small language models are ideal for specific domains requiring efficiency and precision, but are also cost-effective, like the medical, legal, and finance fields. Small language models are suitable if businesses want their models to run on local or edge devices while delivering practical solutions catered to their needs. When to pick an SLM When to pick an LLM Small language models can be fine-tuned on specific datasets, which allows enterprises to use them for their specialized applications. Their compact yet efficient characteristic makes them suitable for the following real-world use cases: Recent papers and benchmark reports indicate that SLMs can match or outperform larger models on specific tasks (reasoning, instruction following, code generation at scale) when well-trained, distilled, and tuned. For example, a 2025 comparative study found families of SLMs (sizes in the low billions) performing competitively with much larger models on several benchmarks; however, results vary by task and benchmark. That means SLMs are increasingly comparable for many production use cases but are not universally superior to LLMs. In the study “Small Language Models are the Future of Agentic AI,” the authors claimed that SLMs are sufficient to provide for AI agents. They extensively compared SLMs with LLMs by assessing their reasoning ability, tool calling, code generation, and instruction following. Here are some of their gathered results: If you’re planning to use a Small Language Model in your project or business, here are few things to keep in mind before deploying it: Small language models are no longer just “baby” models. They are practical, production-ready tools that bridge the gap between expensive LLMs and constrained real-world deployments. For many businesses, especially those prioritizing cost, privacy, and latency, SLMs offer a powerful alternative. With that being said, evaluate models against your specific tasks and business constraints: run benchmarks, validate safety, and combine techniques (distillation, LoRA, quantization) for the best results.

What are SLMs?

How do SLMs work?

SLMs vs LLMs

So, LLMs for large businesses and SLMs for small businesses?

Use-cases of SLMs

Can SLMs really match bigger models?

Deployment Considerations

Conclusion

References: