You’re scrolling. One second it’s a heartfelt story, the next it’s a video of someone saying something that feels… off.

Except… they didn’t.

Note: This photo is generated thru Sora

Welcome to the era of synthetic media where online content is changing fast. From AI-written news articles to deepfake videos that look and sound real, it’s becoming harder to spot.

Let’s talk about the flood of synthetic media, the blur between authentic and AI, and why this matters now more than ever. Not to be scared, but to understand and to open a conversation. If the internet becomes mostly “machine-made”, how do we stay grounded in what’s real and navigate that responsibly?

Why are we seeing so much AI content?

Tools like ChatGPT, DALL·E, Sora make it easier than ever to generate content in seconds. We’re talking blog posts, product descriptions, art, scripts, and even full videos that are all created without human hands ever touching a keyboard or camera.

As more people tap into AI tools for speed and convenience, it’s clear we’re entering a phase where synthetic content isn’t just common… it’s everywhere. It’s creative, sure. But it also means more content competing for our attention and less time to figure out what’s genuine.

So many AI tools but what’s the impact?

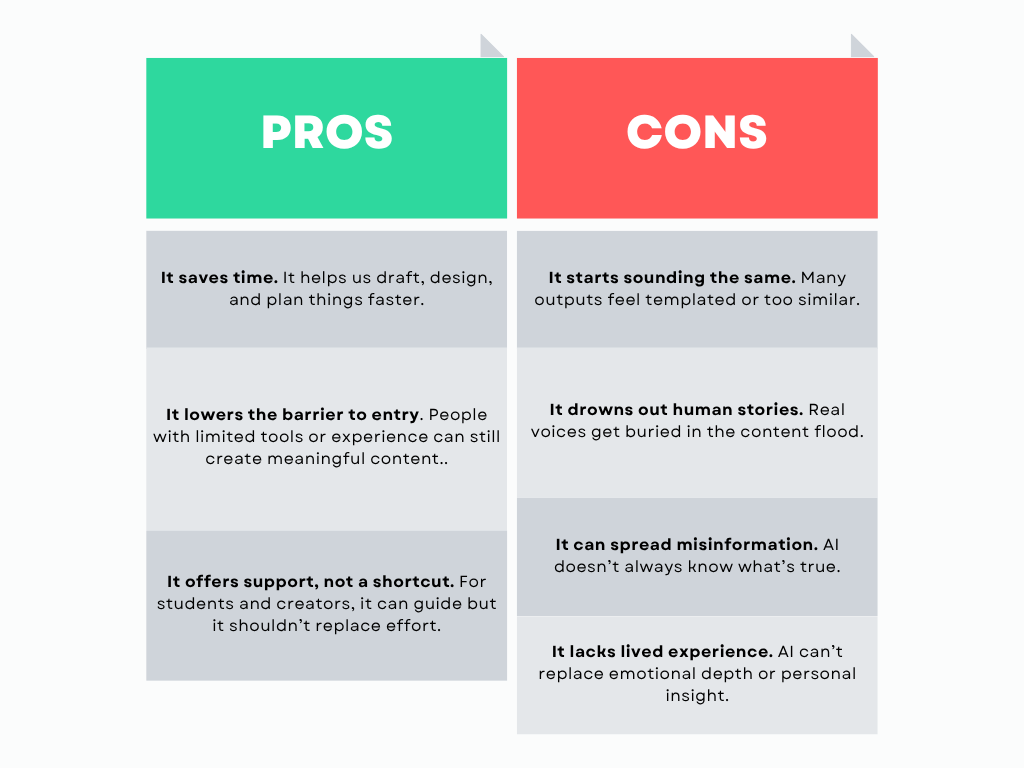

Let’s be honest… AI tools like ChatGPT, DALL·E, and Sora are super helpful. We use them to get things done faster, organize our thoughts, create visuals, even bring ideas to life we might not have been able to before. But as with anything powerful, it comes with a mix of good and not-so-good.

Is Authenticity Getting Lost?

Let’s talk about something called “signal-to-noise ratio.” The idea is that in a world full of information (noise), the real and important stuff (signal) can be hard to hear.

AI models don’t really “understand” the content it generates because it remix data scraped from blogs, books, tweets, videos, even Reddit threads. That means synthetic content can echo misinformation or be filled with fluff.

And when search engines and social platforms boost what’s popular, not what’s true… well, the signal gets buried.

Are we getting better at spotting truth or just better at scrolling past it?

Are Deepfakes just Fun Filters, or Something More Serious?

A deepfake is a piece of media usually video or audio that uses AI to mimic real people. It can be really scary.

Some of it’s fun or artistic. Deepfakes have been used in films to de-age actors. Others are used to create accessible voice-overs or even interactive avatars. And honestly? Some of them look too real.

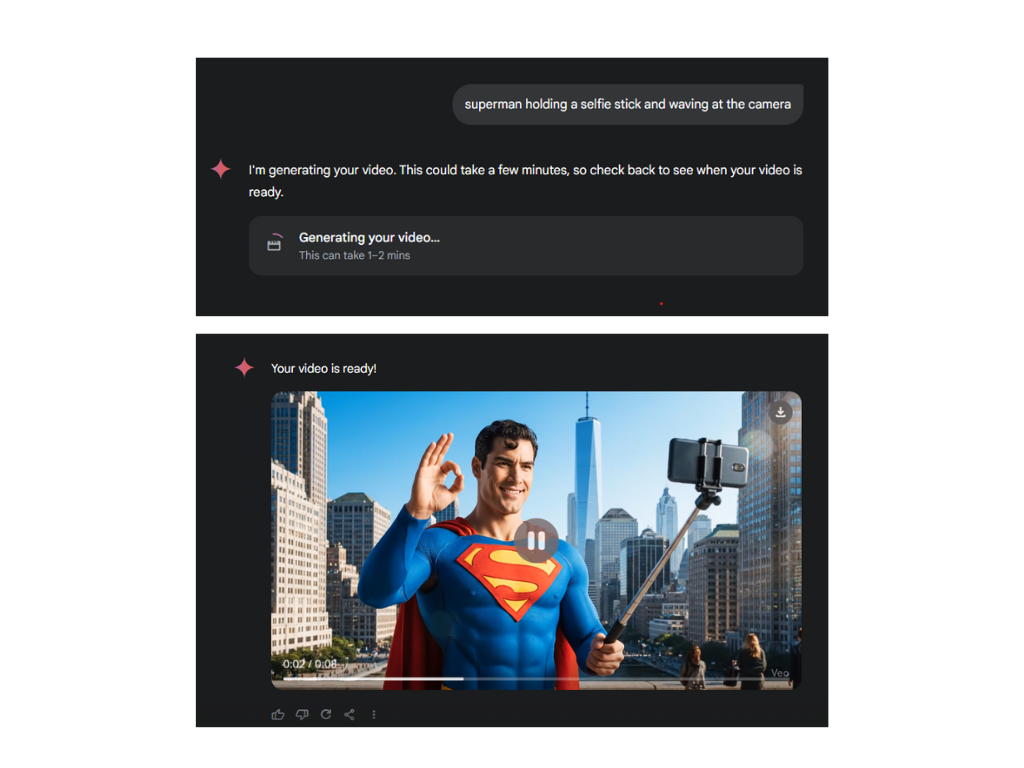

Take Google Veo, for example. It’s one of the newest text-to-video models capable of producing HD, cinematic-quality videos just from a short written idea.

e.g. Prompt to Generate a Video

But there’s a darker side. In 2024, a group in Hong Kong fell for a $25 million scam where fraudsters used deepfake video calls to trick employees. In the UAE, a similar scam cloned a voice to steal $35 million (Global News).

Can We Still Trust What We See and Hear?

There was a time when video or audio was all the proof we needed. A clip or recording felt like undeniable evidence. But now, with just a prompt and an AI tool, you can create a completely fake interview.

In a report by CBS News, scammers used AI to clone voices and trick people especially older adults into thinking their loved ones were in trouble. Some families are now using safe words because these fake calls sound so real.

The hard part is that we aren’t always taught how to check what’s real anymore. We hear something convincing, and we react. We trust what’s familiar.

But maybe the better question to ask is:

Are we taking the time to figure out what’s real, or are we just moving too fast to notice?

We don’t have to be experts, but we do need to pay attention. In a world where almost anything can be faked, our best tool isn’t an app or extension. It’s how we think, how we pause, and how we question what we see.

Because the one thing AI can’t do is care about the truth the way we do.

So… What Can We Do?

We don’t need to panic, but we do need to stay alert.

Here are a few realistic ways to cut through the noise and stay grounded in what’s real:

- Check the source

Ask yourself: Who published this? Is the account verified? Have you seen this person or outlet before? - Look for content signals

Some AI-generated images and videos now include digital watermarks or metadata, like Content Credentials from Adobe. Pay attention to timestamps, original author tags, and other context clues. - Use tools wisely

Free browser tools can help, like Hive AI Detector for text and images, Deepware Scanner for suspicious audio or video, or Google’s “About this Image” to trace where a photo came from. Just remember, no tool is perfect. Use them to guide you, not as the final word. - Pause before you react

If something feels off, it probably is. Take a moment before you share it. Cross-check the information and ask yourself, “Does this actually make sense coming from this person or source?”

In the end, a little curiosity and caution go a long way.

Conclusion

The internet is changing fast. And with so much AI-generated content out there, it’s easy to feel overwhelmed or unsure about what’s real.

But here’s the truth, authenticity still matters. Real voices, real stories, and real experiences still cut through especially when we choose to pay attention to them.

We don’t have to become tech experts. But we do need to become more intentional. More mindful. More curious. Because in a world full of copies, being real is one of the boldest things we can be.

So take a moment, trust your judgment, and hold onto what’s real because that’s how we keep truth alive.