Last updated on November 16, 2025

-

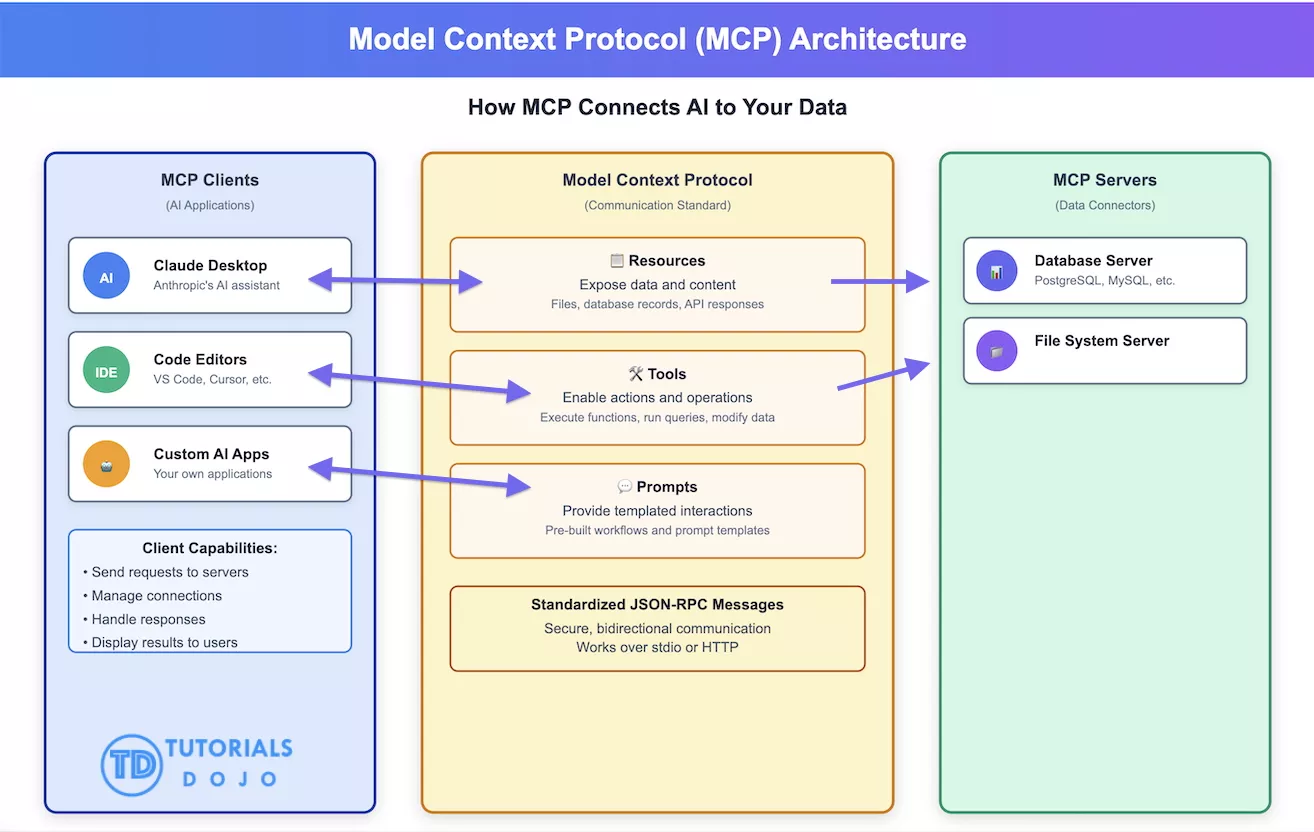

An open, model‑agnostic protocol introduced by Anthropic in November 2024, designed to standardize how AI systems (huge language models, LLMs) connect with external data sources and tools via a JSON‑RPC interface.

- Often likened to a “USB‑C port for AI,” offering a universal interface rather than bespoke integrations per system.

Key Benefits of MCP

-

Provides a standardized interface so LLMs can easily connect to multiple tools and data sources without custom adapters.

-

Solves the “N×M” problem, removing the need to build a unique connector for every AI–tool combination.

-

Ensures structured and validated exchanges, supporting better debugging, version control, and reliability in multi-agent systems.

-

Gives developers access to a growing set of pre-built servers (e.g., GitHub, Slack, Google Drive) that can be reused across AI apps.

-

Enables secure, offline-capable MCP servers, allowing agents to run locally without exposing sensitive data.

-

Supports OAuth 2.1 and authorization safeguards, ensuring secure communication and controlled resource access.

-

Allows LLMs to autonomously orchestrate multi-step tasks, deciding which tools to use and chaining actions together.

Examples

-

Pre-built servers: GitHub, Google Drive, Slack, PostgreSQL, Puppeteer.

-

Enterprise adoption: Block (Square), Replit, Codeium, Sourcegraph.

-

Platform support:

-

OpenAI → ChatGPT desktop app, Agents SDK, Responses API.

-

Google DeepMind → Gemini models.

-

Microsoft → Windows AI Foundry, Copilot Studio.

-

-

Claude Desktop → Can browse local files via MCP.

Use Cases

-

Replit/Zed assistants read project context.

-

Pull CRM, docs, and knowledge bases into workflows.

-

Natural-language queries → SQL → results.

-

Search docs → query DB → send Slack message.

-

LLMs plan & execute resource functions.