Last updated on July 8, 2025

Recall-Oriented Understudy for Gisting Evaluation (ROUGE) Cheat Sheet

-

ROUGE is a family of metrics designed to assess the similarity between machine-generated text (candidate) and human-written reference text (ground truth) in NLP tasks like text summarization and machine translation.

-

Measures how well generated text captures key information and structure from reference text, emphasizing recall (proportion of relevant information preserved).

-

Score Range: 0 to 1, where higher scores indicate greater similarity between candidate and reference texts.

-

Key Use Cases:

-

Evaluating text summarization systems.

-

Assessing machine translation quality.

-

Analyzing content accuracy in generated text.

-

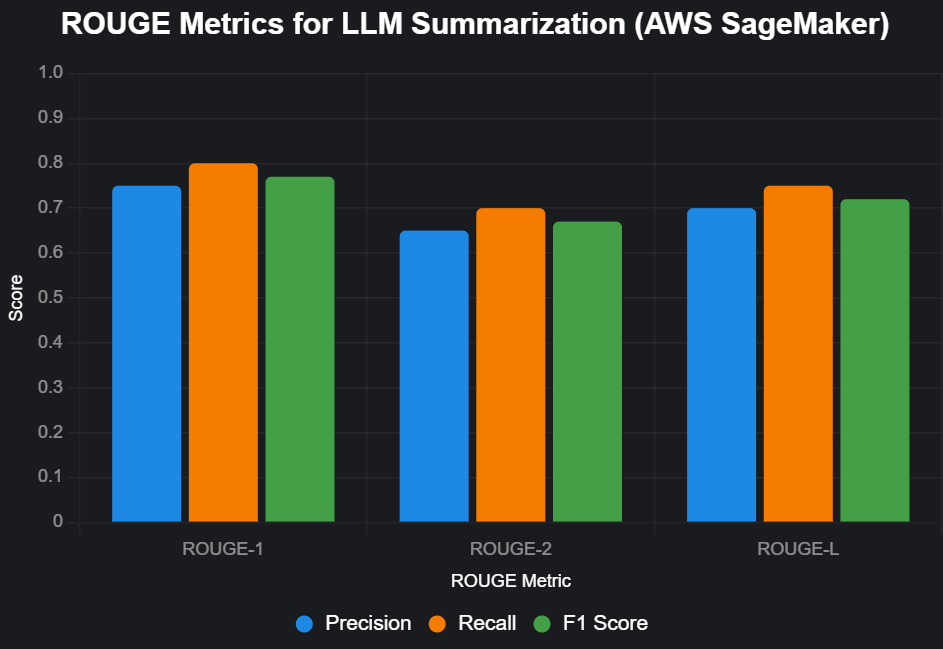

Types of ROUGE Metrics

-

ROUGE-N:

-

Measures the overlap of n-grams (sequences of n words) between candidate and reference texts.

-

Variants:

-

ROUGE-1: Unigram (single word) overlap.

-

ROUGE-2: Bigram (two consecutive words) overlap.

-

ROUGE-3, ROUGE-4, ROUGE-5: Trigram, 4-gram, and 5-gram overlaps, respectively.

-

-

Useful for assessing word-level and phrase-level similarity.

-

-

ROUGE-L:

-

Based on the Longest Common Subsequence (LCS) between candidate and reference texts.

-

Captures sentence-level structure and fluency by focusing on the longest in-sequence (but not necessarily consecutive) word matches.

-

Does not require consecutive matches, making it suitable for evaluating reordered but coherent text.

-

-

ROUGE-L-SUM:

-

Specifically designed for text summarization tasks.

-

Measures the LCS at the summary level, considering the order of words to evaluate how well the summary preserves the reference’s structure.

-

-

Other Variants (Less Common):

-

ROUGE-W: Weighted LCS, favoring longer consecutive matches.

-

ROUGE-S: Skip-bigram co-occurrence, allowing gaps between words in matching pairs.

-

ROUGE-SU: Combines skip-bigrams and unigrams for a more comprehensive evaluation.

-

Key Evaluation Measures

-

Recall: Proportion of n-grams (or LCS) from the reference text that appear in the candidate text.

-

Formula (ROUGE-N): (Number of overlapping n-grams) / (Total n-grams in reference)

-

Formula (ROUGE-L): (Length of LCS) / (Total words in reference)

-

-

Precision: Proportion of n-grams (or LCS) in the candidate text that appear in the reference text.

-

Formula (ROUGE-N): (Number of overlapping n-grams) / (Total n-grams in candidate)

-

Formula (ROUGE-L): (Length of LCS) / (Total words in candidate)

-

-

F1 Score: Harmonic mean of precision and recall, balancing both measures.

-

Formula: 2 * (Precision * Recall) / (Precision + Recall)

-

-

Interpretation:

-

High recall: Candidate captures most of the reference’s content.

-

High precision: Candidate includes few irrelevant elements.

-

High F1: Good balance of recall and precision, indicating overall similarity.

-

Advantages of ROUGE

-

Recall-Oriented: Prioritizes capturing all critical information from the reference, crucial for summarization tasks.

-

Flexible: Multiple variants (ROUGE-N, ROUGE-L, etc.) allow evaluation at different granularity levels (word, phrase, sentence).

-

Language-Independent: Works with any language, as it relies on syntactic overlaps.

-

Fast and Scalable: Computationally inexpensive, suitable for large-scale evaluations in AWS environments.

-

Correlates with Human Judgment: ROUGE scores often align with human assessments of content coverage and fluency.

Limitations

-

Syntactic Focus: Relies on word/phrase overlaps, missing semantic similarities (e.g., synonyms or paraphrases).

-

Reference Dependency: Requires high-quality human references, which may not always be available.

-

Context Insensitivity: Does not account for the broader context or domain of the text (e.g., legal vs. casual).

-

Preprocessing Sensitivity: Results can vary based on text normalization (e.g., case sensitivity, stop word removal).

-

Not Comprehensive Alone: Should be used alongside other metrics (e.g., BLEU, BERTScore) for a holistic evaluation.

Best Practices in AWS

-

Choose the Right ROUGE Variant:

-

Use ROUGE-1 for keyword presence in domains like legal or medical texts.

-

Use ROUGE-2 or higher for phrase-level accuracy.

-

Use ROUGE-L for sentence-level coherence in news or narrative summaries.

-

Use ROUGE-L-SUM for evaluating multi-sentence summaries.

-

-

Normalize Text: Ensure consistent preprocessing (e.g., lowercasing, removing punctuation) for candidate and reference texts to avoid skewed scores.

-

Set Thresholds: Adjust precision, recall, and F1 thresholds based on task requirements (e.g., higher recall for critical content preservation).

-

Combine Metrics: Use ROUGE with perplexity, BLEU, or human evaluations to capture fluency, precision, and ethical considerations (e.g., toxicity).

-

Leverage SageMaker:

-

Integrate ROUGE evaluations into SageMaker workflows using MLflow or rouge-score Python libraries.

-

Automate evaluations post-fine-tuning to streamline model validation.

-