Last updated on February 12, 2025

The AWS Certified Data Engineer – Associate (DEA-C01) certification exam evaluates a candidate’s ability to implement data pipelines and address performance and cost issues while adhering to AWS best practices. The certification validates the candidate’s proficiency in designing and implementing scalable and secure data solutions using various AWS services. The target candidate for this certification should have at least 2-3 years of experience in data engineering and a solid understanding of volume, variety, and velocity’s impact on data ingestion, transformation, modeling, security, governance, privacy, schema design, and optimal data store design. Additionally, you should have at least 1-2 years of hands-on experience with AWS services. The exam consists of 65 multiple-choice and multiple-response questions and lasts for 130 minutes. The standard version of the AWS Certified Data Engineer – Associate exam was released on March 12, 2024, and it is priced at $150 USD. The exam assesses the candidate’s skills in completing the following tasks:

• Developing data ingestion and transformation techniques and coordinating data pipelines with programming concepts.

• Identifying the most effective data store, creating models, organizing schemas, and managing lifecycles.

• Maintaining, operating, and monitoring data pipelines. Evaluating data quality and analyzing data.

• Implementing proper authentication, authorization, data encryption, and governance. Enabling logging for security purposes.

If you plan to take the AWS Certified Data Engineer – Associate exam, we suggest using the official AWS Exam Guide, AWS Documentation, Exam Prep Official Practice Question Set, our AWS Certified Data Engineer Associate Practice Exam and AWS Whitepapers as your study materials. The exam guide provides a detailed breakdown of the topics covered in the exam, while the training resources offer additional assistance in honing the necessary skills. This certification can be a significant career boost for you as a data engineering professional, as it validates your expertise in designing and implementing effective data solutions using AWS services.

Recommended general IT and AWS knowledge

The ideal candidate for the AWS Certified Data Engineer – Associate exam should possess the following general IT knowledge:

• Competence in configuring and maintaining extract, transform, and load (ETL) pipelines, covering the entire data journey from ingestion to the destination.

• Application of high-level, language-agnostic programming concepts tailored to the requirements of the data pipeline.

• Proficiency in utilizing Git commands for effective source code control, ensuring versioning and collaboration within the development process.

• Utilizing data lakes to store data.

• Having general concepts related to networking, storage, and computing, providing a foundation for designing and implementing robust data engineering solutions.

For AWS-specific knowledge, the candidate should demonstrate proficiency in the following areas:

• Using AWS services to accomplish tasks outlined in the exam guide’s introduction section

• Understanding AWS services for encryption, governance, protection, and logging of data within pipelines

• The capability to compare AWS services based on cost, performance, and functionality differences allows for an optimized selection of services for specific use cases.

• Structuring and executing SQL queries on AWS services.

• An understanding of how to analyze data, verify data quality, and ensure data consistency using AWS services.

Job tasks that are out of scope for the target candidate

The exam assesses a specific set of skills and competencies related to AWS Certified Data Engineer – Associate, and as such, the target candidate is not expected to perform specific tasks. This non-exhaustive list clarifies the out-of-scope tasks for the exam:

• Perform artificial intelligence and machine learning (AI/ML) tasks.

• Demonstrate knowledge of programming language-specific syntax.

• Draw business conclusions based on data.

For a comprehensive list of AWS services and features that are within or outside the scope of the exam, you are encouraged to refer to the official DEA-C01 exam study guide in the appendix. This guide provides detailed information to help you focus on the relevant topics and ensure your readiness for the AWS Certified Data Engineer – Associate exam.

AWS Certified Data Engineer Associate DEA-C01 Exam Domains

The official exam guide for the AWS Certified Data Engineer Associate DEA-C01 provides a comprehensive list of exam domains, relevant topics, and services that require your focus. The certification test comprises four(4) exam domains, each with a corresponding coverage percentage, as shown in the table below:

It is important to note that the first domain, “Data Ingestion and Transformation,” holds the highest exam coverage of 34%. Therefore, you should focus on studying the topics in this section. Domains 2 and 3 have 26% and 22% coverage in the DEA-C01 exam, respectively. Lastly, the domain with the lowest coverage percentage in this exam is Domain 4, which focuses on Data Security and Governance.

DEA-C01 Exam Domain 1: Data Ingestion and Transformation

-

Task Statement 1.1: Perform data ingestion.

The candidate should have a deep knowledge of essential concepts, encompassing throughput and latency characteristics for AWS services in data ingestion, data ingestion patterns, and the replayability of data pipelines. Understanding both streaming and batch data ingestion, along with stateful and stateless data transactions, is crucial. On the skills front, you should excel in reading data from diverse sources like Amazon Kinesis and AWS Glue, configuring batch ingestion, consuming data APIs, setting up schedulers with tools like Amazon EventBridge, and establishing event triggers such as Amazon S3 Event Notifications. Additionally, you should be adept at calling Lambda functions from Amazon Kinesis, creating IP address allowlists, implementing throttling for overcoming rate limits, and effectively managing fan-in and fan-out for streaming data distribution.

-

Task Statement 1.2: Transform and process data.

In terms of knowledge, you should understand the creation of ETL pipelines aligned with business requirements and have insights into the volume, velocity, and variety of data, covering both structured and unstructured forms. Familiarity with cloud computing and distributed computing is crucial, as well as proficiency in utilizing Apache Spark for effective data processing in various scenarios. On the skills front, you should be proficient at optimizing container usage for performance needs, employing services like Amazon EKS and ECS. Connecting to different data sources like Java Database Connectivity (JDBC) and Open Database Connectivity (ODBC,) integrating data from multiple sources, and optimizing costs during data processing are vital skills. Furthermore, it would be best to have experience implementing data transformation services using tools like Amazon EMR, AWS Glue, Lambda, and Amazon Redshift. Transforming data between formats, troubleshooting common transformation failures and performance issues, and creating data APIs to make data accessible to other systems using AWS services round out your skill set in data transformation and processing.

-

Task Statement 1.3: Orchestrate data pipelines.

A candidate knows how to integrate various AWS services to craft ETL pipelines, grasp event-driven architecture principles, and configure AWS services for data pipelines based on schedules or dependencies. Furthermore, a comprehension of serverless workflows is essential. On the skills side, you should know to use orchestration services like AWS Lambda, Amazon EventBridge, Amazon Managed Workflows for Apache Airflow, AWS Step Functions, and AWS Glue workflows to construct efficient workflows for data ETL pipelines. You should possess the expertise to design data pipelines focusing on performance, availability, scalability, resiliency, and fault tolerance. Implementing and maintaining serverless workflows is a key skill, along with utilizing notification services such as Amazon SNS and SQS to send alerts, ensuring effective monitoring and response mechanisms in orchestrated data workflows.

-

Task Statement 1.4: Apply programming concepts.

As a candidate, you should know various technical aspects such as continuous integration and delivery, SQL queries for data transformation, and infrastructure as code for repeatable deployments. You should also be well-versed in distributed computing, data structures, algorithms, and SQL query optimization. Your skills include the ability to optimize code for data ingestion and transformation, configure Lambda functions to meet performance needs, and perform SQL queries to transform data. You should also know how to structure SQL queries to meet data pipeline requirements, use Git commands for repository actions, and package and deploy serverless data pipelines using the AWS Serverless Application Model. Lastly, you should proficiently use and mount storage volumes from Lambda functions.

DEA-C01 Exam Domain 2: Data Store Management

-

Task Statement 2.1: Choose a data store.

A candidate should possess knowledge of storage platforms and their characteristics, including understanding storage services and configurations tailored to specific performance demands. Familiarity with data storage formats such as .csv, .txt, and Parquet is crucial, along with the ability to align data storage with migration requirements and determine the suitable storage solution for distinct access patterns. Moreover, knowledge in managing locks to prevent unauthorized access to data, especially in platforms like Amazon Redshift and Amazon RDS, is vital. On the skills side, you should have experience implementing storage services that align with specific cost and performance requirements, configuring them according to access patterns and needs. Applying storage services effectively to diverse use cases, integrating migration tools like AWS Transfer Family into data processing systems, and implementing data migration or remote access methods, such as Amazon Redshift federated queries, materialized views, and Redshift Spectrum.

-

Task Statement 2.2: Understand data cataloging systems.

A candidate should know how to create a data catalog and perform data classification based on specific requirements, understanding the key components of metadata and data catalogs. On skills, you should know to use data catalogs to consume data directly from its source and build and reference a data catalog using tools like AWS Glue Data Catalog and Apache Hive metastore. Expertise in discovering schemas and employing AWS Glue crawlers to populate data catalogs is essential. Additionally, candidates should be skilled in synchronizing partitions with a data catalog and adept at creating new source or target connections for cataloging, exemplified by proficiency in AWS Glue.

-

Task Statement 2.3: Manage the lifecycle of data.

A candidate should know about selecting appropriate storage solutions that cater to hot and cold data requirements and optimizing storage costs based on the data lifecycle. Understanding how to strategically delete data to align with business and legal requirements, as well as familiarity with data retention policies and archiving strategies, is crucial. Furthermore, expertise in safeguarding data with appropriate resiliency and availability measures is essential. On the skills side, you should know how to perform load and unload operations for seamless data movement between Amazon S3 and Amazon Redshift. Proficiency in managing S3 Lifecycle policies to dynamically change the storage tier of S3 data, expiring data based on specific age criteria using S3 Lifecycle policies, and effectively managing S3 versioning and DynamoDB TTL showcase your skills in efficiently overseeing the complete lifecycle of data within the AWS environment.

-

Task Statement 2.4: Design data models and schema evolution.

A candidate should possess knowledge of fundamental data modeling concepts and ensure data accuracy and trustworthiness by utilizing data lineage. Familiarity with best practices related to indexing, partitioning strategies, compression, and other data optimization techniques is crucial. Furthermore, understanding how to model diverse data types, including structured, semi-structured, and unstructured data, and proficiency in schema evolution techniques is essential. On the skills front, the specialist should excel in designing schemas tailored for Amazon Redshift, DynamoDB, and Lake Formation. These key skills include addressing changes to data characteristics and performing schema conversion, leveraging tools like the AWS Schema Conversion Tool (AWS SCT) and AWS DMS Schema Conversion. Establishing data lineage, mainly using AWS tools like Amazon SageMaker ML Lineage Tracking.

DEA-C01 Exam Domain 3: Data Operations and Support

-

Task Statement 3.1: Automate data processing by using AWS services.

A candidate should know about maintaining and troubleshooting data processing for repeatable business outcomes, understanding API calls for data processing, and identifying services that accept scripting, such as Amazon EMR, Amazon Redshift, and AWS Glue. On the skills side, you should have an in-depth understanding of orchestrating data pipelines through tools like Amazon MWAA and Step Functions, troubleshooting Amazon-managed workflows, and utilizing SDKs to access Amazon features from code. Proficiency in leveraging the features of AWS services like Amazon EMR, Redshift, and Glue for data processing, consuming and maintaining data APIs, preparing data transformations with tools like AWS Glue DataBrew, querying data using services like Amazon Athena, using Lambda to automate data processing, and effectively managing events and schedulers with tools like EventBridge.

-

Task Statement 3.2: Analyze data by using AWS services.

A candidate should understand the tradeoffs between provisioned and serverless services, enabling informed choices based on specific requirements. Moreover, a solid grasp of SQL queries, including SELECT statements with multiple qualifiers or JOIN clauses, is crucial for practical data analysis. Knowledge of visualizing data and the judicious application of cleansing techniques are essential components. On the skills side, you should excel in visualizing data using AWS services and tools such as AWS Glue DataBrew and Amazon QuickSight. You should be adept at verifying and cleaning data through tools like Lambda, Athena, QuickSight, Jupyter Notebooks, and Amazon SageMaker Data Wrangler. Proficiency in using Athena to query data or create views and utilizing Athena notebooks with Apache Spark for data exploration.

-

Task Statement 3.3: Maintain and monitor data pipelines.

A candidate should know how to log application data effectively, implement best practices for performance tuning, and log access to AWS services using tools such as Amazon Macie, AWS CloudTrail, and Amazon CloudWatch. On the skills side, the specialist should excel in extracting logs for audits, deploying logging and monitoring solutions to enhance auditing and traceability, and employing notifications for real-time alerts during monitoring. Troubleshooting performance issues, tracking API calls with CloudTrail, and maintaining pipelines, especially with services like AWS Glue and Amazon EMR, are crucial skills. You should have experience using Amazon CloudWatch Logs to log application data, focusing on configuration and automation. Additionally, analyzing logs using various AWS services such as Athena, Amazon EMR, Amazon OpenSearch Service, CloudWatch Logs Insights, and big data application logs demonstrates your expertise in maintaining, monitoring, and optimizing data pipelines with a robust emphasis on data analysis and problem resolution.

-

Task Statement 3.4: Ensure data quality.

A candidate should know data sampling techniques to implement data skew mechanisms effectively. Additionally, expertise in data validation, completeness, consistency, accuracy, integrity, and a grasp of data profiling techniques are essential. On the skills side, you should excel in running data quality checks during the data processing phase, such as verifying empty fields. You must be proficient at defining data quality rules, utilizing tools like AWS Glue DataBrew, and investigating data consistency to ensure the overall quality and reliability of the processed data.

DEA-C01 Exam Domain 4: Data Security and Governance

-

Task Statement 4.1: Apply authentication mechanisms.

A candidate has knowledge of VPC security networking concepts and an understanding of the distinctions between managed and unmanaged services. Additionally, familiarity with authentication methods, encompassing password-based, certificate-based, and role-based approaches, is crucial. Understanding the differences between AWS-managed policies and customer-managed policies is also essential. On the skills side, you must be knowledgeable in updating VPC security groups and creating and maintaining IAM groups, roles, endpoints, and services. They should be adept at creating and rotating credentials for effective password management, leveraging tools like AWS Secrets Manager. Setting up IAM roles for access, whether for Lambda functions, Amazon API Gateway, AWS CLI, or CloudFormation, should be within the specialist’s skill set. Moreover, applying IAM policies to roles, endpoints, and services, including technologies like S3 Access Points and AWS PrivateLink.

-

Task Statement 4.2: Apply authorization mechanisms.

A candidate possesses knowledge of various authorization methods, including role-based, policy-based, tag-based, and attribute-based approaches. Understanding the principle of least privilege within the context of AWS security and familiarity with role-based access control, along with an awareness of expected access patterns, is crucial. Additionally, knowledge of methods to safeguard data from unauthorized access across services is essential. On the skills, you must be proficient in creating custom IAM policies tailored to specific needs, mainly when managed policies fall short. Proficiency in securely storing application and database credentials using tools like Secrets Manager and AWS Systems Manager Parameter Store is key. Furthermore, as a candidate, you should be knowledgeable at providing database users, groups, and roles with access and authority within a database, as exemplified in Amazon Redshift scenarios. The ability to manage permissions effectively through Lake Formation, covering services like Amazon Redshift, Amazon EMR, Athena, and Amazon S3.

-

Task Statement 4.3: Ensure data encryption and masking.

A candidate should know various data encryption options in AWS analytics services, including Amazon Redshift, Amazon EMR, and AWS Glue. Understanding the distinctions between client-side and server-side encryption is essential, as is a grasp of techniques for protecting sensitive data and implementing data anonymization, masking, and key salting. On the skills side, you should know how to apply data masking and anonymization in adherence to compliance laws or company policies. Utilizing encryption keys, particularly through AWS Key Management Service (AWS KMS), for encrypting or decrypting data is a vital skill. Configuring encryption across AWS account boundaries and enabling encryption in transit for data showcases your skills in safeguarding data through robust encryption and masking practices within the AWS analytics ecosystem.

-

Task Statement 4.4: Prepare logs for audit.

A candidate should possess knowledge in logging application data and access to AWS services, emphasizing centralized AWS logs. On the skills side, you must be proficient in using CloudTrail for tracking API calls, leveraging CloudWatch Logs to store application logs, and utilizing AWS CloudTrail Lake for centralized logging queries. Additionally, candidates should be adept at analyzing records using AWS services such as Athena, CloudWatch Logs Insights, and Amazon OpenSearch Service.

-

Task Statement 4.5: Understand data privacy and governance.

A candidate should know how to safeguard personally identifiable information (PII) and understand data sovereignty implications. On the skills, you must be proficient in granting permissions for data sharing, exemplified by expertise in data sharing for Amazon Redshift. Additionally, implementing PII identification using tools like Macie integrated with Lake Formation showcases the specialist’s capability to ensure data privacy. Proficiency extends to implementing data privacy strategies that prevent backups or replications of data to disallowed AWS Regions, a crucial aspect of governance. Furthermore, you should be knowledgeable at managing configuration changes within an account, exemplified by their proficiency in using AWS Config, ensuring a robust approach to data privacy and governance in AWS environments.

AWS Certified Data Engineer Associate DEA-C01 Exam Topics

The DEA-C01 Exam Guide provides a breakdown of the exam domains and a comprehensive list of important tools, technologies, and concepts covered in the DEA-C01 exam. Below is a non-exhaustive list of AWS services and features that should be studied for the DEA-C01 exam based on the information provided in the official exam guide. It’s important to remember that this list is subject to change, but it can still be useful in identifying the AWS services that require more attention.

In-scope AWS services and features

Analytics:• Amazon Athena Application Integration:• Amazon AppFlow Cloud Financial Management:• AWS Budgets Compute:• AWS Batch Containers:• Amazon Elastic Container Registry (Amazon ECR) Database:• Amazon DocumentDB (with MongoDB compatibility) Developer Tools:• AWS CLI Frontend Web and Mobile:• Amazon API Gateway Machine Learning:• Amazon SageMaker |

Management and Governance:• AWS CloudFormation Migration and Transfer:• AWS Application Discovery Service Networking and Content Delivery:• Amazon CloudFront Security, Identity, and Compliance:• AWS Identity and Access Management (IAM) Storage:• AWS Backup |

Out-of-scope AWS services and features

Analytics:• Amazon FinSpace Business Applications:• Alexa for Business Compute:• AWS App Runner Containers:• Red Hat OpenShift Service on AWS (ROSA) Database:• Amazon Timestream Developer Tools:• AWS Fault Injection Simulator (AWS FIS) Frontend Web and Mobile:• AWS Amplify Internet of Things (IoT):• FreeRTOS |

Machine Learning:• Amazon CodeWhisperer Management and Governance:• AWS Activate Machine Learning:• Amazon CodeWhisperer Management and Governance:• AWS Activate Media Services:• Amazon Elastic Transcoder Migration and Transfer:• AWS Mainframe Modernization Storage:• EC2 Image Builder |

Validate Your DEA-C01 Knowledge

Once you have finished your review and you are more than confident in your knowledge, test yourself with some practice exams available online. AWS offers a practice exam that you can try out at their AWS SkillBuilder portal. Tutorials Dojo also offers a top-notch set of AWS Certified Data Engineer Associate Practice tests. Each test contains unique questions that will surely help verify if you have missed out on anything important that might appear on your exam.

Additional Resources:

- FREE AWS Digital Courses available in the Tutorials Dojo Portal (in collaboration with AWS)

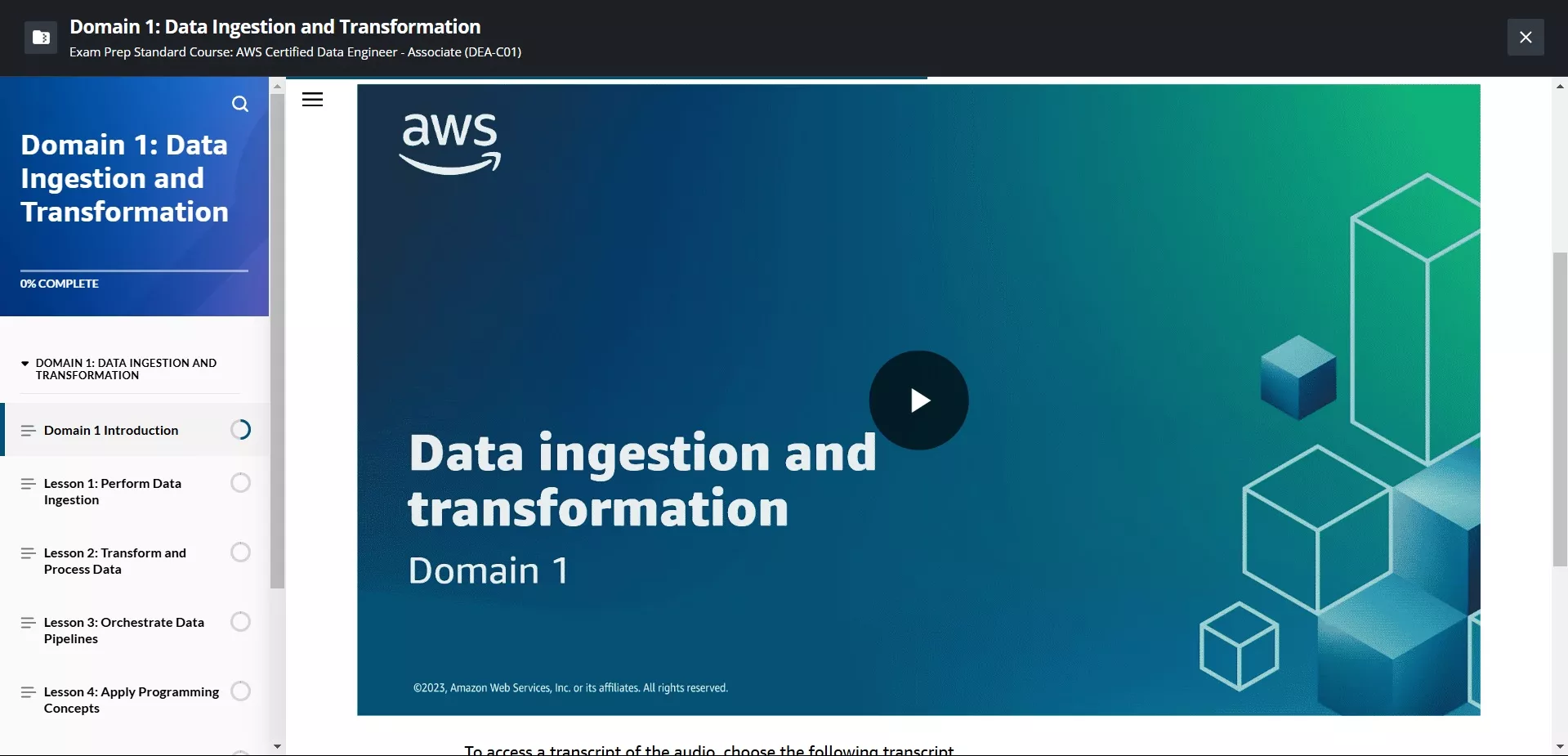

- Courses from AWS SkillBuilder site which includes:

- Standard Exam Prep Plan – only free resources; and

- Enhanced Exam Prep Plan – includes additional content for AWS SkillBuilder subscribers, such as AWS Builder Labs, game-based learning, Official Pretests, and more exam-style questions.

Is taking the AWS Certified Data Engineer Associate DEA-C01 Beta Exam worth it?

The introduction of the new AWS Certified Data Engineer – Associate (DEA-C01) exam marks a significant milestone, being the first AWS certification to feature a beta since the AWS Certified Advanced Networking – Specialty exams in 2022. AWS employs beta exams to test and evaluate questions before the official release of a new or updated certification, making it a unique opportunity for candidates. Let’s discuss into the key factors if the AWS Certified Data Engineer Associate DEA-C01 Beta Exam is worth taking.

Exam Experience

The AWS Certified Data Engineer – Associate (DEA-C01) exam has a pass or fail grading system. It consists of 65 multiple-choice or multiple-response questions and is scored on a scale from 100 to 1,000. The passing score is 720. The scaled scoring system helps to standardize scores across different exam versions that may vary slightly in difficulty. Exam taker needs to wait within 1 to 5 business days after completing the exam.

Learn about the AWS Certified Data Engineer Associate DEA-C01 Exam Topics

To excel in the AWS Certified Data Engineer Associate exam, it’s crucial to complement your theoretical understanding with practical skills. Here are some free AWS ATP courses that can significantly aid in your preparation:

- AWS Cloud Practitioner Essentials: Start your journey with this essential course that provides a solid foundation for understanding AWS services and principles.

- Data Analytics Fundamentals: Dive into the fundamentals of data analytics, a key aspect of data engineering, to strengthen your knowledge base.

- AWS Analytics Services Overview: Gain insights into the various analytics services offered by AWS, understanding their applications and use cases.

- Engage in the Best of Re:Invent Analytics 2022: Keep abreast of the latest developments in AWS analytics by exploring the highlights of the Re: Invent Analytics conference.

- A Day in the Life of a Data Engineer: Walk through a data engineer’s daily responsibilities and tasks, gaining practical insights into the role.

- Building Batch Data Analytics Solutions on AWS: Delve into classroom training provided by AWS, focusing on building batch data analytics solutions—an integral part of the exam topics.

Combining theoretical knowledge with hands-on experience through these courses, you’ll be well-prepared to tackle the AWS Certified Data Engineer Associate exam. Remember to practice regularly on the AWS platform to reinforce your understanding and boost your confidence for the certification journey. Good luck!