Last updated on July 9, 2025

Creating an AI Chatbot with LangChain for FREE (No API Keys, No Tokens)

The Big Question: Can You Build AI Without Breaking the Bank?

We asked ourselves a simple question: Can you build a chatbot without using any paid API at all?

Most tutorials on AI chatbots start the same way: “First, grab your OpenAI API key and add your credit card…” But what if you don’t have one? Or don’t want to use one?

Maybe you’re a student on a tight budget. Maybe you’re tired of hitting rate limits just when your chatbot gets interesting. Or maybe, just maybe, you want to build something that runs completely offline and respects your privacy.

In this guide, we’ll show you exactly how to do that using LangChain, React, and TypeScript, with zero cloud dependencies.

Understanding the Landscape – APIs, Tokens, and Why They Matter

Photo by cottonbro studio: https://www.pexels.com/photo/close-up-of-waiter-holding-tray-with-silver-cover-5371583/

What’s an API, Really?

Think of an API (Application Programming Interface) as a waiter in a restaurant. Here’s how it works:

- You (the app) place an order: “What’s the capital of France?”

- The waiter (API) takes your order to the kitchen

- The kitchen (AI model) prepares your answer

- The waiter brings back your piping hot result: “Paris!”

That’s exactly how OpenAI’s GPT works. You don’t have the AI model running on your device. Instead, you send your query across the internet to OpenAI’s servers via their API, and they respond with an answer. The smarter the kitchen, the more it costs to use.

The Token Economy: Why Every Word Costs Money

Photo by Sharon Snider: https://www.pexels.com/photo/close-up-of-multicolored-puzzles-5142463/

Imagine your sentence is a puzzle. Each word (or part of a word) is a puzzle piece and the AI needs to put the whole puzzle together to understand or respond.

-

Simple phrases like “Hello” might only be one or two pieces.

-

Complex sentences like “The economic implications of decentralized AI are vast and nuanced” could be 12–15 pieces.

-

The bigger the message (or response), the more pieces a.k.a. token the AI must handle.

Each of those puzzle pieces costs money when using APIs like OpenAI. The more tokens in, and the more tokens out, the higher the cost.

That’s why long prompts, detailed conversations, and memory-based bots can become expensive fast — you’re not paying per message, you’re paying per piece of the puzzle.

When using GPT-based APIs, you’re not billed by the number of messages, you’re billed by tokens. This is where things get expensive fast.

What exactly is a token? Think of tokens as text credits. A token can be a word or part of a word:

- “Hello” = 1 token

- “Artificial Intelligence is awesome” = ~5–6 tokens

- A full conversation? 500+ tokens easily

- Upload a document? Thousands of tokens

- Have a long chat with memory? Tens of thousands of tokens

Here’s a real example: Let’s say you’re building a customer service chatbot. A typical conversation might look like this:

User: "Hi, I'm having trouble with my account login"

Bot: "I'd be happy to help you with your login issues. Can you tell me what specific error message you're seeing?"

User: "It says 'invalid credentials' but I'm sure my password is correct"

Bot: "I understand how frustrating that can be. Let's try a few troubleshooting steps..."That simple exchange? About 50-80 tokens. Now multiply that by hundreds of users per day, and you’re looking at thousands of tokens daily. At current OpenAI pricing (roughly $0.002 per 1K tokens), costs add up quickly.

The Hidden Costs of API-Dependent Chatbots

Beyond the obvious per-token costs, API-only chatbots come with hidden expenses:

Scaling Costs

- Development: $50/month for testing

- Small business: $200-500/month

- Enterprise: $2,000-10,000+/month

Infrastructure Dependencies

- Your app breaks when the API is down

- Latency issues affect user experience

- Internet connectivity is required everywhere

Privacy Trade-offs

- Every conversation passes through third-party servers

- Data retention policies you can’t control

- Compliance nightmares for sensitive industries

Reliability Concerns

- Rate limits that throttle your app during peak usage

- Service outages that leave your users stranded

- Pricing changes that can break your business model

Normally this is where you’d start reaching for your wallet, but not today!

The Game Changer – Enter the LangChain!

What is LangChain, Really?

LangChain is an open-source framework designed to help developers build context-aware, tool-using, reasoning-capable AI applications. But here’s the revolutionary part: LangChain doesn’t force you to use cloud APIs.

Think of LangChain as LEGO blocks for AI apps. You get different types of blocks:

- Language Model blocks (the “brain”)

- Memory blocks (to remember conversations)

- Tool blocks (calculators, web search, file access)

- Logic blocks (decision-making and reasoning)

- Data blocks (document search, databases)

Whether your “brain” is GPT-4, a local model like Llama, or something running entirely on your computer, LangChain helps you snap all these pieces together into a working chatbot.

Here’s what makes LangChain special: you can use open-source, local models instead of cloud APIs. This means:

- No API key needed

- No tokens – Chat as much as you want

- No billing surprises – Completely free after initial setup

- Full privacy – Nothing leaves your machine

- Offline capability – Works without internet

- Complete control – Customize everything

Why Should You Use LangChain? (The Big Deal)

LangChain isn’t just about saving money; it’s about empowerment and flexibility. Here’s why it’s a game-changer for students and beginners, and frankly, anyone building AI applications.

Photo by Christina Morillo: https://www.pexels.com/photo/woman-using-macbook-1181288/

Simplified Development. LangChain abstracts away a lot of the complex boilerplate code involved in building LLM applications. Instead of figuring out how to connect different models, manage prompts, or handle memory from scratch, LangChain provides standardized interfaces and components. This means you can focus on what you want your AI to do, rather than how to make the underlying models work. It’s like having a pre-built toolbox for AI.

Modular and Flexible Architecture: Remember the LEGO blocks? That’s LangChain’s strength. Its modular design allows you to easily swap out components. Want to try a different local language model? Just change a few lines of code. Need to add a new data source? Plug in a different “data block.” This makes experimentation and iteration incredibly fast and easy.

Photo by Digital Buggu: https://www.pexels.com/photo/monitor-displaying-computer-application-374559/

Context Awareness and Memory: Building a chatbot that remembers previous turns in a conversation is crucial for a natural user experience. LangChain provides built-in memory management, so your AI can maintain context across interactions, leading to more coherent and helpful conversations.

Agentic Capabilities: This is where LangChain truly shines for complex applications. LangChain allows you to build “agents” – AI systems that can reason, make decisions, and use tools to achieve a goal. Imagine a chatbot that can not only answer questions but also search the web, run calculations, or even execute code based on the user’s request. LangChain makes this kind of intelligent, multi-step workflow possible.

Community and Ecosystem: Despite being relatively new, LangChain has a rapidly growing and supportive community. This means abundant resources, tutorials, and examples available online. If you get stuck, chances are someone else has faced a similar problem, and the community is there to help.

Setting Up LangChain for Local Models (The Basics)

For a true “no API key, no tokens” experience, we’ll leverage tools that allow you to run powerful open-source language models directly on your machine. This usually involves:

-

Python Installation: Make sure you have Python installed on your system. LangChain is primarily a Python library (though a JavaScript version exists too).

-

Installing LangChain: Open your terminal or command prompt and run:

pip install langchain langchain-communityThe

langchain-communitypackage contains many of the integrations for open-source and local models -

Choosing a Local LLM Runner: To run a model locally, you’ll need a way to serve it. Popular options include:

-

Ollama: This is often the most user-friendly way for beginners. Ollama makes it super easy to download and run various open-source models (like Llama 2, Mistral, etc.) with a single command. You download the Ollama application, then pull models like

ollama pull llama2. Once a model is running, LangChain can easily connect to it. -

Llama.cpp / GPT4All: These are more technical but highly efficient libraries for running quantized LLMs on your CPU. They involve downloading model files (often in GGUF format) and then using a Python wrapper (like

llama-cpp-pythonorgpt4all) that LangChain can integrate with. -

Hugging Face Transformers: For those with a stronger GPU, you can directly load models from Hugging Face’s vast library using their

transformerslibrary, which LangChain can then utilize viaHuggingFacePipeline.

-

Example (Conceptual – using Ollama for simplicity):

First, ensure Ollama is installed and running, and you’ve pulled a model like Llama 2 (ollama pull llama2).

Then, in your Python code:

from langchain_community.llms import Ollama

from langchain.prompts import PromptTemplate

from langchain.chains import LLMChain

# Initialize the local Ollama model

# Ensure Ollama is running and 'llama2' model is pulled

llm = Ollama(model="llama2")

# Define a simple prompt template

prompt_template = PromptTemplate(

input_variables=["topic"],

template="Tell me a fun fact about {topic}."

)

# Create a simple chain

chain = LLMChain(llm=llm, prompt=prompt_template)

# Invoke the chain

response = chain.invoke({"topic": "cats"})

print(response['text'])

This is just a basic example, but it shows how you can use LangChain to interact with a locally running model without any API keys.

Limitations of LangChain

While LangChain is a powerful and versatile framework, it’s important to be aware of its limitations, especially for students and beginners.

Photo by Tim Gouw: https://www.pexels.com/photo/man-in-white-shirt-using-macbook-pro-52608/

Complexity and Abstraction Overload: For very simple tasks, LangChain might feel like overkill. Its modularity and layers of abstraction, while powerful, can sometimes lead to a steeper learning curve. Understanding how all the “LEGO blocks” fit together and interact can be challenging initially, especially when debugging.

Rapid Evolution and Breaking Changes: LangChain is under active and rapid development. This means frequent updates, which can sometimes introduce breaking changes or deprecate certain functionalities. While this indicates progress, it can be frustrating for developers who need to constantly adapt their code.

Performance Overhead: While LangChain helps orchestrate LLMs, it introduces its own layer of abstraction. In some highly performance-critical applications, this abstraction might add a slight overhead compared to directly interacting with the LLM API or model. When running local models, your hardware resources (CPU, RAM, GPU) will be the primary bottleneck, and complex LangChain chains can exacerbate this.

Debugging Challenges: When you chain multiple components together, tracing errors can become difficult. If something goes wrong in a complex agentic workflow, pinpointing the exact component causing the issue can take time and effort.

Photo by Markus Spiske: https://www.pexels.com/photo/black-laptop-computer-turned-on-showing-computer-codes-177598/

Dependency Bloat (for some integrations): While we’re focusing on local, free models, LangChain’s strength lies in its vast number of integrations. If you start adding various tools, databases, and external services, your project’s dependencies can grow significantly, potentially increasing project size and complexity.

Not a Replacement for Core ML Skills: LangChain simplifies building AI applications, but it doesn’t replace the need for understanding fundamental AI/ML concepts. To truly build effective and robust applications, a grasp of prompt engineering, model limitations, and data handling is still essential.

Start Building Your Own ChatBot Locally!

The fun starts when you bring things to life! The good news is you can build your own, and it’s easier than you might think.

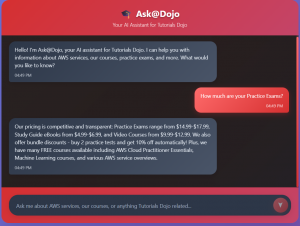

Ready to dive in and get your hands dirty? You can kickstart your journey by pulling the code directly from my GitHub repository: https://github.com/asiguiang/ChatbotUsingLangchain

This isn’t just a pre-built solution; it’s your starting block! Feel free to clone the repo, play around with the code, and truly make it your own. Want to experiment with a different local model? Go for it! Curious about adding new tools or memory types? Dive in and customize to your heart’s content. This hands-on experience is the best way to truly grasp LangChain’s power and flexibility. Don’t be afraid to break things (you can always git clone again!)

The core dataset used in this example is also available in the repository: https://github.com/asiguiang/ChatbotUsingLangchain/blob/main/public/tutorials_dojo_shop_normalized.txt. Taking a look at this tutorials_dojo_shop_normalized.txt file will give you insights into how real-world data is structured for these types of applications, helping you understand how your chatbot “learns” and retrieves information. You can even try swapping it out with your own text files to create a chatbot on a completely different topic!

Feel free to reach me out on my socials if you are a little lost with the process.

Final Thoughts?

LangChain represents more than just a cost-saving measure — it’s a fundamental shift toward democratized AI. By running models locally, you’re not just saving money; you’re gaining freedom, control, and the ability to build AI tools that truly serve your needs.

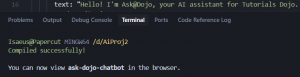

Ask@Dojo-Local Prototype Chatbot

The landscape is evolving rapidly. Local models are getting better, hardware is getting cheaper, and frameworks like LangChain are making it easier than ever to build sophisticated AI applications without depending on cloud APIs.

Whether you’re a student building your first chatbot, a business looking to cut costs, or a developer who values privacy and control, LangChain offers a compelling alternative to the API-dependent world.

The future of AI isn’t just about having the smartest models — it’s about having models that work for you, on your terms, without compromise.

Start building today and join the local-first AI revolution.