Every day, billions of lines of text, emails, articles, and messages are created online. Making sense of all this unstructured data is one of the toughest challenges in modern AI. Document embedding is a fundamental concept that overcomes this problem. These are dense, numerical vectors that transform words, sentences, or entire documents into meaningful points in a high-dimensional space. These vectors capture the meaning and context of the original text. Because of this, machine learning models can measure similarity and perform tasks like topic classification, semantic search, and recommendation. Document embeddings convert text into numerical representations, enabling computers to understand and compare the meanings of texts. In the past, simple methods like Bag-of-Words only counted the frequency of each word’s appearance, without understanding the actual meaning of the words. Embeddings improve on this by representing each document as a group of numbers (a vector) that captures its meaning and context. Think of each document as a point on an invisible map, where similar topics cluster together. “Solar energy” and “photovoltaics” sit near each other, while “sports” and “finance” are far apart. Because of this, computers can more easily measure the relationship between different texts and perform in-depth analysis on their content. Document embeddings derive their strength from their ability to represent meaning through geometry. The system represents each document as a point in a multi-dimensional space, and the distance between two documents indicates their similarity. If two texts discuss the same idea or share a similar tone, their points will be closely related. We often measure this closeness using cosine similarity, which assesses how the directions of the two vectors align. Because of this setup, embeddings can even capture deeper patterns—like analogies or relationships—based on how these points arrange themselves in space. Even though document embeddings perform very well, experts often refer to them as a “black box” because we find it challenging to understand how they represent meaning. The system converts each document into a lengthy list of numbers (often hundreds). Unlike older methods, however, no clear meaning defines each individual number—no “word count” or label exists for any position. Instead, every number forms part of a pattern that only makes sense when combined with all the others. The way the whole vector fits and relates to others in the space matters, not what each single number means on its own. Early embeddings focused on individual words. Methods like Word2Vec and GloVe learn word meanings by examining nearby words, assigning each word a fixed vector. To represent a whole document, these word vectors were usually averaged together. This approach worked well, but it had a significant limitation: it did not understand the context. For example, the word “bank” would have the same meaning in “river bank” and “money bank,” even though they refer to very different things. The modern standard is set by Transformer-based architectures, such as BERT (Bidirectional Encoder Representations from Transformers) and its successors. These models generate contextualized embeddings using self-attention mechanisms, meaning the vector for the word “bank” changes dynamically based on the other words in the sentence. For document embedding, the transformer processes the entire text and produces a special classification token vector (often the output of the [CLS] token) that represents the document’s complete semantic meaning. These deep, bidirectional models offer unparalleled accuracy in complex downstream ML tasks, fundamentally changing how natural language is encoded. The most direct application of document embeddings is powering next-generation search. Traditional search engines rely on simple keyword matching; however, embeddings enable more advanced semantic search. When a user enters a query, the system converts that query into a vector (a query embedding). It then finds the documents whose vectors are closest in the embedding space, retrieving results that are relevant in meaning, even if they do not share the exact keywords. This transformation has made search engines, customer support bots, and internal knowledge base systems vastly more accurate and context-aware. In text classification, machine learning models are tasked with assigning a label or category to a document (e.g., spam/not-spam, positive/negative review, or assigning a news article to “Finance” or “Sports”). Specifically, when a document is represented as a dense, numerical vector, a simple classifier (like a logistic regression or a neural network) can be trained on these vectors. Because the embedding itself organizes documents by topic and sentiment, the machine learning task is simplified. This simplification leads to high-accuracy results for automatic content moderation, sentiment analysis, and general topic categorization. Document embeddings form the backbone of many “content-based” recommendation systems. By generating an embedding for a piece of content (such as a movie script, article, or product description) and an embedding for a user’s profile (derived from the documents they have previously liked), the system can calculate the similarity between the two vectors. Documents that are semantically close to the user’s past preferences are highly recommended. This process allows platforms to deliver highly personalized content feeds without relying solely on simple user-to-user purchase history. Document embeddings form the bridge between human language and machine understanding. From static word models like Word2Vec to dynamic transformers like BERT, embeddings have reshaped how AI reads and reasons with text. As vector databases and retrieval-augmented models evolve, this foundation will only grow more powerful—pushing AI closer to truly understanding meaning. To dive deeper into this topic, here are a few recommended areas to explore next:What are Document Embeddings?

Key properties

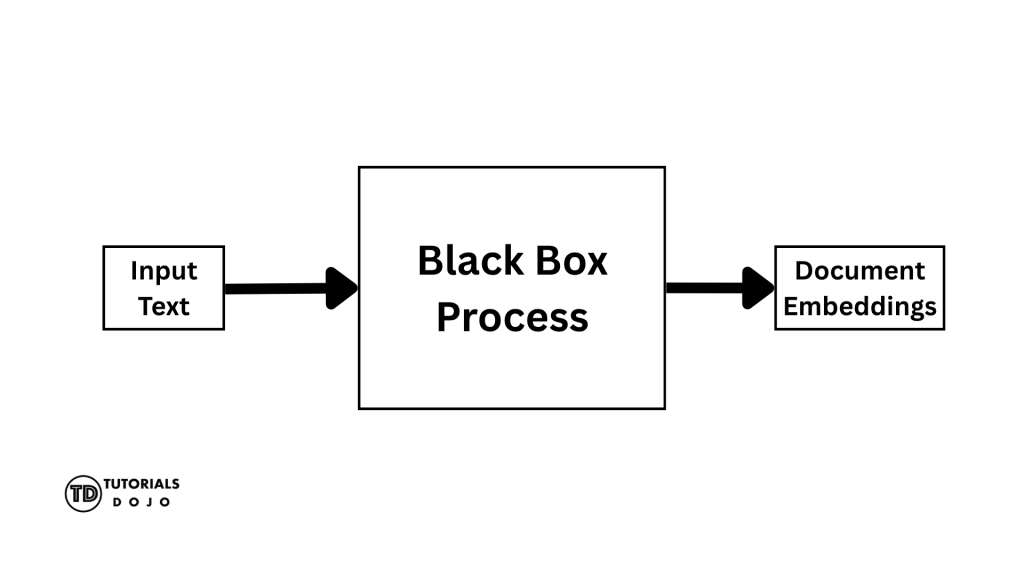

The “Black Box” Analogy

Methods for Creating Embeddings

Early Word Embeddings

Document-Specific Extensions

Contextual Revolution

Machine Learning Applications

Semantic Search and Information Retrieval

Text Classification and Categorization

Content Recommendation Systems

Conclusion

Next Steps

References