Last updated on February 12, 2025

This certification is the pinnacle of your DevOps career in AWS. The AWS Certified DevOps Engineer Professional (or AWS DevOps Pro) is the advanced certification of both AWS SysOps Administrator Associate and AWS Developer Associate. This is similar to how the AWS Solutions Architect Professional role is a more advanced version of the AWS Solutions Architect Associate.

Generally, AWS recommends that you first take (and pass) both AWS SysOps Administrator Associate and AWS Developer Associate certification exams before taking on this certification. Previously, it was a prerequisite that you obtain the associate level certifications before you are allowed to go for the professional level. Last October 2018, AWS removed this ruling to provide customers a more flexible approach to the certifications.

DOP-C02 Study Materials

The FREE AWS Exam Readiness course, official AWS sample questions, Whitepapers, FAQs, AWS Documentation, Re:Invent videos, forums, labs, AWS cheat sheets, practice tests, and personal experiences are what you will need to pass the exam. Since the DevOps Pro is one of the most difficult AWS certification exams out there, you have to prepare yourself with every study material you can get your hands on. If you need a review on the fundamentals of AWS DevOps, then do check out our review guides for the AWS SysOps Administrator Associate and AWS Developer Associate certification exams. Also, visit this AWS exam blueprint to learn more details about your certification exam.

For virtual classes, you can attend the DevOps Engineering on AWS and Systems Operations on AWS classes since they will teach you concepts and practices that are expected to be in your exam.

For whitepapers, focus on the following:

- Running Containerized Microservices on AWS

- Implementing Microservices on AWS

- Infrastructure as Code

- Introduction to DevOps

- Practicing Continuous Integration and Continuous Delivery on AWS

- Blue/Green Deployments on AWS whitepaper

- Development and Test on AWS

- Disaster Recovery of Workloads on AWS: Recovery in the Cloud

- AWS Multi-Region Fundamentals

Almost all online training you need can be found on the AWS web page. One digital course that you should check out is the Exam Readiness: AWS Certified DevOps Engineer – Professional course. This digital course contains lectures on the different domains of your exam, and they also provide a short quiz right after each lecture to validate what you have just learned.

Lastly, do not forget to study the AWS CLI, SDKs, and APIs. Since the DevOps Pro is also an advanced certification for Developer Associate, you need to have knowledge of programming and scripting in AWS. Go through the AWS documentation to review the syntax of CloudFormation template, Serverless Application Model template, CodeBuild buildspec, CodeDeploy appspec, and IAM Policy.

AWS Services to Focus On for the DOP-C02 Exam

Since this exam is a professional level one, you should already have a deep understanding of the AWS services listed under our SysOps Administrator Associate and Developer Associate review guides. In addition, you should familiarize yourself with the following services since they commonly come up in the DevOps Pro exam:

- AWS CloudFormation

- AWS Lambda

- Amazon EventBridge

- Amazon CloudWatch Alarms

- AWS CodePipeline

- AWS CodeDeploy

- AWS CodeBuild

- AWS CodeCommit

- AWS Config

- AWS Systems Manager

- Amazon ECS

- Amazon Elastic Beanstalk

- AWS CloudTrail

- AWS OpsWorks

- AWS Trusted Advisor

The FAQs provide a good summary of each service, however, the AWS documentation contains more detailed information that you’ll need to study. These details will be the deciding factor in determining the correct choice from the incorrect choices in your exam. To supplement your review of the services, we recommend that you take a look at Tutorials Dojo’s AWS Cheat Sheets. Their contents are well-written and straight to the point, which will help reduce the time spent going through FAQs and documentation.

Common Exam Scenarios for DOP-C02

|

Scenario |

Solution |

| Software Development and Lifecycle (SDLC) Automation | |

|

Automatically detect and prevent hardcoded secrets within AWS CodeCommit repositories. |

Link the CodeCommit repositories to Amazon CodeGuru Reviewer to detect secrets in source code or configuration files, such as passwords, API keys, SSH keys, and access tokens. |

|

An Elastic Beanstalk application must not have any downtime during deployment and requires an easy rollback to the previous version if an issue occurs. |

Set up Blue/Green deployment, deploy a new version on a separate environment then swap environment URLs on Elastic Beanstalk. |

|

A new version of an AWS Lambda application is ready to be deployed and the deployment should not cause any downtime. A quick rollback to the previous Lambda version must be available. |

Publish a new version of the Lambda function. After testing, use the production Lambda Alias to point to this new version. |

|

In an AWS Lambda application deployment, only 10% of the incoming traffic should be routed to the new version to verify the changes before eventually allowing all production traffic. |

Set up Canary deployment for AWS Lambda. Create a Lambda Alias pointed to the new Version. Set Weighted Alias value for this Alias as 10%. |

|

An application hosted in Amazon EC2 instances behind an Application Load Balancer. You must provide a safe way to upgrade the version on Production and allow easy rollback to the previous version. |

Launch the application in Amazon EC2 that runs the new version with an Application Load Balancer (ALB) in front. Use Route 53 to change the ALB A-record Alias to the new ALB URL. Rollback by changing the A-record Alias to the old ALB. |

|

An AWS OpsWorks application needs to safely deploy its new version on the production environment. You are tasked to prepare a rollback process in case of unexpected behavior. |

Clone the OpsWorks Stack. Test it with the new URL of the cloned environment. Update the Route 53 record to point to the new version. |

|

A development team needs full access to AWS CodeCommit but they should not be able to create/delete repositories. |

Assign the developers with the |

|

During the deployment, you need to run custom actions before deploying the new version of the application using AWS CodeDeploy. |

Add lifecycle hook action |

| You need to run custom verification actions after the new version is deployed using AWS CodeDeploy. |

Add lifecycle hook action |

|

You need to set up AWS CodeBuild to automatically run after a pull request has been successfully merged using AWS CodeCommit |

Create an Amazon EventBridge rule to detect pull requests and action set to trigger CodeBuild Project. Use AWS Lambda to update the pull request with the result of the project Build |

|

You need to use AWS CodeBuild to create artifact and automatically deploy the new application version |

Set CodeBuild to save artifact to S3 bucket. Use CodePipeline to deploy using CodeDeploy and set the build artifact from the CodeBuild output. |

|

You need to upload the AWS CodeBuild artifact to Amazon S3 |

S3 bucket needs to have versioning and encryption enabled. |

|

You need to review AWS CodeBuild Logs and have an alarm notification for build results on Slack |

Send AWS CodeBuild logs to CloudWatch Log group. Create an Amazon EventBridge rule to detect the result of your build and target a Lambda function to send results to the Slack channel (or SNS notification) |

|

Need to get a Slack notification for the status of the application deployments on AWS CodeDeploy |

Create an Amazon EventBridge rule to detect the result of CodeDeploy job and target a notification to AWS SNS or a Lambda function to send results to Slack channel |

|

Need to run an AWS CodePipeline every day for updating the development progress status |

Create an Amazon EventBridge rule to run on schedule every day and set a target to the AWS CodePipeline ARN |

|

Automate deployment of a Lambda function and test for only 10% of traffic for 10 minutes before allowing 100% traffic flow. |

Use CodeDeploy and select deployment configuration |

|

Deployment of Elastic Beanstalk application with absolutely no downtime. The solution must maintain full compute capacity during deployment to avoid service degradation. |

Choose the “Rolling with additional Batch” deployment policy in Elastic Beanstalk |

|

Deployment of Elastic Beanstalk application where the new version must not be mixed with the current version. |

Choose the “Immutable deployments” deployment policy in Elastic Beanstalk |

| Configuration Management and Infrastructure-as-Code | |

|

The resources on the parent CloudFormation stack need to be referenced by other nested CloudFormation stacks |

Use |

|

On which part of the CloudFormation template should you define the artifact zip file on the S3 bucket? |

The artifact file is defined on the |

|

Need to define the AWS Lambda function inline in the CloudFormation template |

On the |

|

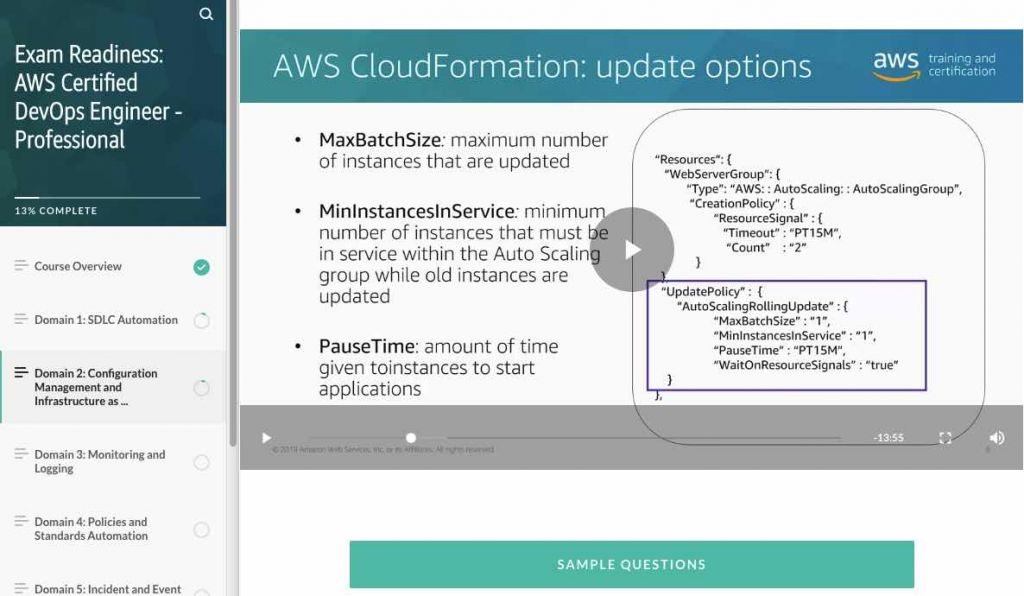

Use CloudFormation to update Auto Scaling Group and only terminate the old instances when the newly launched instances become fully operational |

Set |

|

You need to scale-down the EC2 instances at night when there is low traffic using OpsWorks. |

Create Time-based instances for automatic scaling of predictable workload. |

|

Can’t install an agent on on-premises servers but need to collect information for migration |

Deploy the Agentless Discovery Connector VM on your on-premises data center to collect information. |

|

Syntax for CloudFormation with an Amazon ECS cluster with ALB |

Use the |

| Monitoring and Logging | |

|

Need to centralize audit and collect configuration setting on all regions of multiple accounts |

Setup an Aggregator on AWS Config. |

|

Consolidate CloudTrail log files from multiple AWS accounts |

Create a central S3 bucket with bucket policy to grant cross-account permission. Set this as destination bucket on the CloudTrail of the other AWS accounts. |

|

Ensure that CloudTrail logs on the S3 bucket are protected and cannot be tampered with. |

Enable Log File Validation on CloudTrail settings |

|

Need to collect/investigate application logs from EC2 or on-premises server |

Install the unified CloudWatch Agent to send the logs to CloudWatch Logs for storage and viewing. |

|

Need to review logs from running ECS Fargate tasks |

Enable |

|

Need to run real-time analysis for collected application logs |

Send logs to CloudWatch Logs, create a Lambda subscription filter, Elasticsearch subscription filter, or Kinesis stream filter. |

|

Need to be automatically notified if you are reaching the limit of running EC2 instances or limit of Auto Scaling Groups |

Track service limits with Trusted Advisor on CloudWatch Alarms using the |

|

Need a near real-time dashboard with a feature to detect violations for compliance |

Use AWS Config to record all configuration changes and store the data reports to Amazon S3. Use Amazon QuickSight to analyze the dataset. |

| Security and Compliance | |

|

Need to monitor the latest Common Vulnerabilities and Exposures (CVE) of EC2 instances. |

Use Amazon Inspector to automate security vulnerability assessments to test the network accessibility of the EC2 instances and the security state of applications that run on the instances. |

|

Need to secure the |

Store these values as encrypted parameter on SSM Parameter Store. |

|

Using default IAM policies for |

Attach additional policy with |

|

You need to secure an S3 bucket by ensuring that only HTTPS requests are allowed for compliance purposes. |

Create an S3 bucket policy that |

|

Need to store a secret, database password, or variable, in the most cost-effective solution |

Store the variable on SSM Parameter Store and enable encryption |

|

Need to generate a secret password and have it rotated automatically at regular intervals |

Store the secret on AWS Secrets Manager and enable key rotation. |

|

Several team members, with designated roles, need to be granted permission to use AWS resources |

Assign AWS managed policies on the IAM accounts such as, |

|

Apply latest patches on EC2 and automatically create an AMI |

Use Systems Manager automation to execute an Automation Document that installs OS patches and creates a new AMI. |

|

Need to have a secure SSH connection to EC2 instances and have a record of all commands executed during the session |

Install SSM Agent on EC2 and use SSM Session Manager for the SSH access. Send the session logs to S3 bucket or CloudWatch Logs for auditing and review. |

|

Ensure that the managed EC2 instances have the correct application version and patches installed. |

Use SSM Inventory to have a visibility of your managed instances and identify their current configurations. |

|

Apply custom patch baseline from a custom repository and schedule patches to managed instances |

Use SSM Patch Manager to define a custom patch baseline and schedule the application patches using SSM Maintenance Windows |

| Incident and Event Response | |

|

There are missing assets in File Gateway but exist directly in the S3 bucket |

Run the RefreshCache command for Storage Gateway to refresh the cached inventory of objects for the specified file share. |

|

Need to filter a certain event in CloudWatch Logs |

Set up a CloudWatch metric filter to search the particular events. |

|

Need to get a notification if somebody deletes files in your S3 bucket |

Setup Amazon S3 Event Notifications to get notifications based on specified S3 events on a particular bucket. |

|

Need to be notified when an RDS Multi-AZ failover happens |

Setup Amazon RDS Event Notifications to detect specific events on RDS. |

|

Get a notification if somebody uploaded IAM access keys on any public GitHub repositories |

Create an Amazon EventBridge rule for the |

|

Get notified on Slack when your EC2 instance is having an AWS-initiated maintenance event |

Create an Amazon EventBridge rule for the AWS Health Service to detect EC2 Events. Target a Lambda function that will send a notification to the Slack channel |

|

Get notified of any AWS maintenance or events that may impact your EC2 or RDS instances |

Create an Amazon EventBridge rule for detecting any events on AWS Health Service and send a message to an SNS topic or invoke a Lambda function. |

|

Monitor scaling events of your Amazon EC2 Auto Scaling Group such as launching or terminating an EC2 instance. |

Use Amazon EventBridge Events for monitoring the Auto Scaling Service and monitor the |

|

View object-level actions of S3 buckets such as upload or deletion of object in CloudTrail |

Set up Data events on your CloudTrail trail to record object-level API activity on your S3 buckets. |

|

Execute a custom action if a specific CodePipeline stage has a |

Create an Amazon EventBridge rule to detect failed state on the CodePipeline service, and set a target to SNS topic for notification or invoke a Lambda function to perform custom action. |

|

Automatically rollback a deployment in AWS CodeDeploy when the number of healthy instances is lower than the minimum requirement. |

On CodeDeploy, create a deployment alarm that is integrated with Amazon CloudWatch. Track the |

|

Need to complete QA testing before deploying a new version to the production environment |

Add a Manual approval step on AWS CodePipeline, and instruct the QA team to approve the step before the pipeline can resume the deployment. |

|

Get notified for OpsWorks auto-healing events |

Create an Amazon EventBridge rule for the OpsWorks Service to track the |

| Resilient Cloud Solutions | |

|

Need to deploy stacks across multiple AWS accounts and regions |

Use AWS CloudFormation StackSets to extend the capability of stacks to create, update, or delete stacks across multiple accounts and AWS Regions with a single operation. |

|

Need to ensure that both the application and the database are running in the event that one Availability Zone becomes unavailable. |

Deploy your application on multiple Availability Zones and set up your Amazon RDS database to use Multi-AZ Deployments. |

|

In the event of an AWS Region outage, you have to make sure that both your application and database will still be running to avoid any service outages. |

Create a copy of your deployment on the backup AWS region. Set up an RDS Read-Replica on the backup region. |

|

Automatically switch traffic to the backup region when your primary AWS region fails |

Set up Route 53 Failover routing policy with health check enabled on your primary region endpoint. |

|

Need to ensure the availability of a legacy application running on a single EC2 instance |

Set up an Auto Scaling Group with |

|

Ensure that every EC2 instance on an Auto Scaling group downloads the latest code first before being attached to a load balancer |

Create an Auto Scaling Lifecycle hook and configure the |

|

Ensure that all EC2 instances on an Auto Scaling group upload all log files in the S3 bucket before being terminated. |

Use the Auto Scaling Lifecycle and configure the |

Validate Your Knowledge

After your review, you should take some practice tests to measure your preparedness for the real exam. AWS offers a sample practice test for free which you can find here. You can also opt to buy the longer AWS sample practice test at aws.training, and use the discount coupon you received from any previously taken certification exams. Be aware though that the sample practice tests do not mimic the difficulty of the real DevOps Pro exam.

Therefore, we highly encourage using other mock exams such as our very own AWS Certified DevOps Engineer Professional Practice Exam course which contains high-quality questions with complete explanations on correct and incorrect answers, visual images and diagrams, YouTube videos as needed, and also contains reference links to official AWS documentation as well as our cheat sheets and study guides. You can also pair our practice exams with our AWS Certified DevOps Engineer Professional Exam Study Guide eBook to further help in your exam preparations.

Sample Practice Test Questions For DOP-C02:

Question 1

An application is hosted in an Auto Scaling group of Amazon EC2 instances with public IP addresses in a public subnet. The instances are configured with a user data script which fetch and install the required system dependencies of the application from the Internet upon launch. A change was recently introduced to prohibit any Internet access from these instances to improve the security but after its implementation, the instances could not get the external dependencies anymore. Upon investigation, all instances are properly running but the hosted application is not starting up completely due to the incomplete installation.

Which of the following is the MOST secure solution to solve this issue and also ensure that the instances do not have public Internet access?

- Download all of the external application dependencies from the public Internet and then store them to an S3 bucket. Set up a VPC endpoint for the S3 bucket and then assign an IAM instance profile to the instances in order to allow them to fetch the required dependencies from the bucket.

- Deploy the Amazon EC2 instances in a private subnet and associate Elastic IP addresses on each of them. Run a custom shell script to disassociate the Elastic IP addresses after the application has been successfully installed and is running properly.

- Use a NAT gateway to disallow any traffic to the VPC which originated from the public Internet. Deploy the Amazon EC2 instances to a private subnet then set the subnet’s route table to use the NAT gateway as its default route.

- Set up a brand new security group for the Amazon EC2 instances. Use a whitelist configuration to only allow outbound traffic to the site where all of the application dependencies are hosted. Delete the security group rule once the installation is complete. Use AWS Config to monitor the compliance.

Question 2

A DevOps engineer has been tasked to implement a reliable solution to maintain all of their Windows and Linux servers both in AWS and in on-premises data center. There should be a system that allows them to easily update the operating systems of their servers and apply the core application patches with minimum management overhead. The patches must be consistent across all levels in order to meet the company’s security compliance.

Which of the following is the MOST suitable solution that you should implement?

- Configure and install AWS Systems Manager agent on all of the EC2 instances in your VPC as well as your physical servers on-premises. Use the Systems Manager Patch Manager service and specify the required Systems Manager Resource Groups for your hybrid architecture. Utilize a preconfigured patch baseline and then run scheduled patch updates during maintenance windows.

- Configure and install the AWS OpsWorks agent on all of your EC2 instances in your VPC and your on-premises servers. Set up an OpsWorks stack with separate layers for each OS then fetch a recipe from the Chef supermarket site (supermarket.chef.io) to automate the execution of the patch commands for each layer during maintenance windows.

- Develop a custom python script to install the latest OS patches on the Linux servers. Set up a scheduled job to automatically run this script using the cron scheduler on Linux servers. Enable Windows Update in order to automatically patch Windows servers or set up a scheduled task using Windows Task Scheduler to periodically run the python script.

- Store the login credentials of each Linux and Windows servers on the AWS Systems Manager Parameter Store. Use Systems Manager Resource Groups to set up one group for your Linux servers and another one for your Windows servers. Remotely login, run, and deploy the patch updates to all of your servers using the credentials stored in the Systems Manager Parameter Store and through the use of the Systems Manager Run Command.

Click here for more AWS Certified DevOps Engineer Professional practice exam questions.

More AWS reviewers can be found here:

To get more in-depth insights on the hardcore concepts that you should know to pass the DevOps Pro exam, we do highly recommend that you also get our DevOps Engineer Professional Study Guide eBook.

At this point, you should already be very knowledgeable on the following domains:

- CI/CD, Application Development and Automation

- Configuration Management and Infrastructure as Code

- Security, Monitoring and Logging

- Incident Mitigation and Event Response

- Implementing High Availability, Fault Tolerance, and Disaster Recovery

Additional Training Materials For DOP-C02

There are a few AWS Certified DevOps Engineer – Professional video courses that you can check out as well, which can complement your exam preparations:

- AWS Certified DevOps Engineer – Professional by Adrian Cantrill

- Courses from AWS SkillBuilder site which includes:

- Standard Exam Prep Plan – only free resources; and

- Enhanced Exam Prep Plan – includes additional content for AWS SkillBuilder subscribers, such as AWS Builder Labs, game-based learning, Official Pretests, and more exam-style questions.

As an AWS DevOps practitioner, you shoulder a lot of roles and responsibilities. Many professionals in the industry have attained proficiency through continuous practice and producing results of value. Therefore, you should properly review all the concepts and details that you need to learn so that you can also achieve what others have achieved.

The day before your exam, be sure to double-check the schedule, location, and items to bring for your exam. During the exam itself, you have 180 minutes to answer all questions and recheck your answers. Be sure to manage your time wisely. It will also be very beneficial for you to review your notes before you go in to refresh your memory. The AWS DevOps Pro certification is very tough to pass, and the choices for each question can be very misleading if you do not read them carefully. Be sure to understand what is being asked in the questions, and what options are offered to you. With that, we wish you all the best in your exam!