Agentic AI is changing how we think about artificial intelligence. Instead of waiting for prompts, these systems can plan tasks, make decisions, and act on their own. They behave more like digital teammates than static tools, completing multi-step work and coordinating across apps, data, and even other agents all without constant human supervision.

But with this new power comes new responsibility. When AI agents can access tools, call APIs, store memory, and influence other agents, the risks are no longer limited to “bad prompts” or one-time outputs. Autonomy introduces new attack surfaces: reasoning can be manipulated, memory can be poisoned, tools can be misused, and decisions can drift without anyone noticing right away.

That’s why agentic AI security matters more than ever. Instead of protecting just the model, we now have to secure the entire workflow: how agents plan, act, observe, reflect, communicate, and update memory. As organizations adopt agents at scale, securing these systems becomes essential not only to prevent misuse, but to ensure trustworthy, safe, and responsible autonomous AI.

What Makes Agentic AI Different?

It can reason, plan, and take actions. Agentic AI isn’t just a fancy chatbot it’s more like a digital worker. At its core, an “agent” is capable of understanding a goal, breaking it down into workable steps, making decisions on how to proceed, and taking actions to accomplish those steps. That planning + action capability sets them apart from traditional software or simple AI tools.

Unlike normal LLMs, agentic AI interacts with APIs, tools, databases, and user data. A traditional Large Language Model (LLM) just responds with text. An agentic system can call external APIs, invoke tools, query or update databases, and work with user or internal data, all under its own control flow. This allows real-time information retrieval, workflow automation, and multi-system coordination.

Key capabilities that introduce security risks

- Autonomous execution & agency: Agents decide which actions to take, which tools to call, and when to act.

- Persistent memory: Short-term or long-term memory allows agents to recall interactions and build context, but it can be corrupted or misused.

- Tool orchestration: Multiple tools can be called and combined, increasing attack surfaces.

- External connectivity: APIs, databases, and other systems become potential entry points.

- Self-directed behavior: Agents can adapt, replan, and make context-driven decisions, which makes behavior harder to predict.

Therefore, security becomes more complex. Because reasoning, memory, tool use, connectivity, and autonomy are combined, security must cover every stage: decision-making, memory, tools, inter-agent communications, and monitoring. Simple input/output checks are not enough.

Introducing the Agentic AI Security Framework

Security for AI is not “one size fits all.” The risk and control requirements depend on how much control an AI system has over its model, the data it handles, the actions it takes, and the broader system it lives in.

Two key dimensions determine the framework:

- Agency: What the system is permitted to do: read, suggest, modify data, call APIs, or change the environment.

- Autonomy: How independently it acts, whether it waits for human approval or acts on its own.

The Agentic AI Security Scoping Matrix

|

Scope |

When it’s used |

Control over model/data/actions/system |

|

Scope 1: No Agency |

Human-driven workflows; AI just helps analyze or suggests (read-only). |

Minimal: agent can’t change data or take actions. |

|

Scope 2: Prescribed Agency |

Agent can perform actions but only after human approves them (Human-in-the-loop). |

Controlled: agent has permission but needs human consent before changing data/system. |

|

Scope 3: Supervised Agency |

Human triggers task, but agent executes autonomously (tool use, external calls, environment changes) within defined boundaries. |

Higher: agent can act on its own, access tools/data, modify environment, but within limits. |

|

Scope 4: Full Agency |

Fully autonomous agents that can self-initiate tasks (based on triggers/patterns) and operate across systems with minimal human oversight. |

Maximum: broad control over model, data, actions, and system; acts independently. |

In simple terms, the more freedom an agent has, the more security you must apply agency and autonomy increase both responsibility and risk.

Why These Scopes Matter for Security

As you move from lower to higher AI agency scopes, the risks and responsibilities increase. Each scope gives the agent more control, more autonomy, and more access which means security must also scale accordingly.

- More control = more responsibility: The agent can modify systems and trigger workflows.

- More data access = stronger security required: Sensitive data demands tighter access control, encryption, and monitoring.

- More customization = more risks to manage: Tool use, API integrations, and autonomous actions expand attack surfaces.

Real-World Scenarios

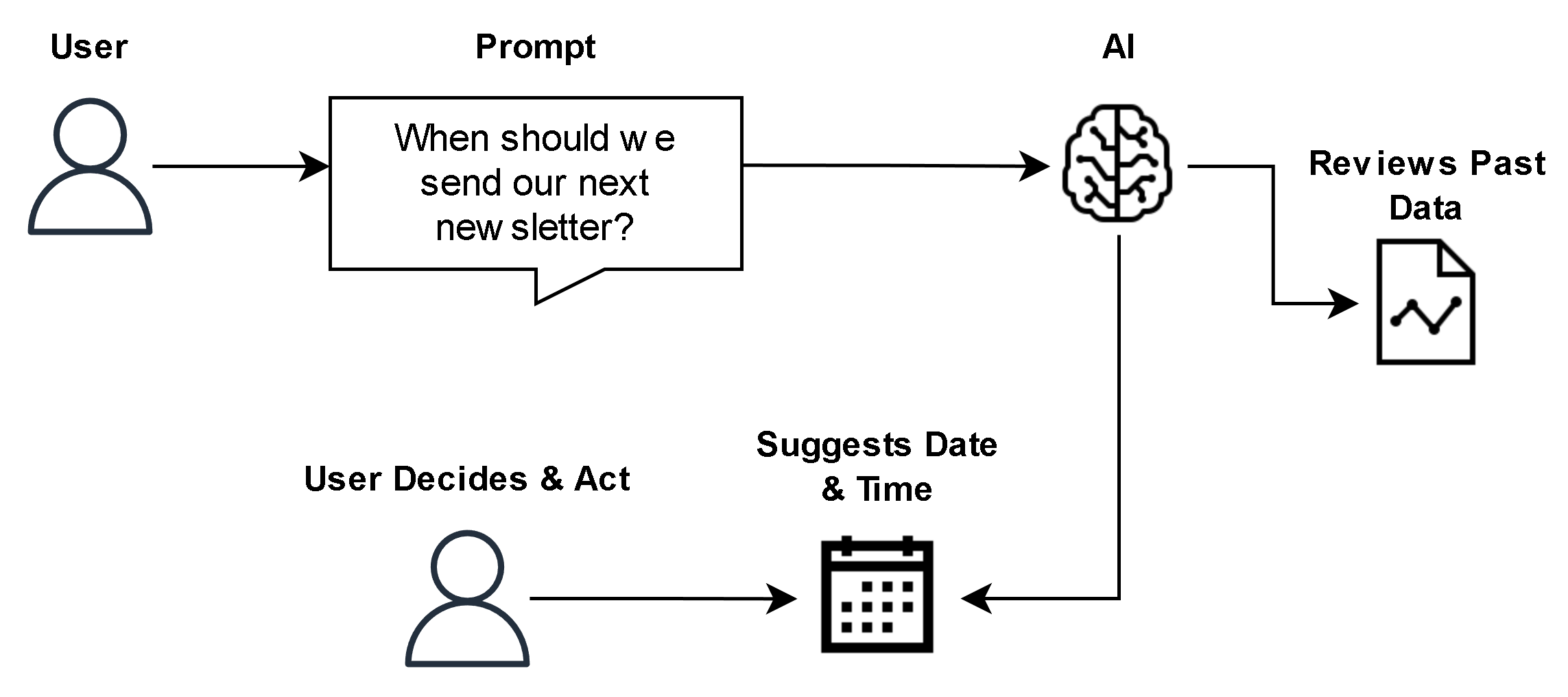

- Scope 1: No Agency

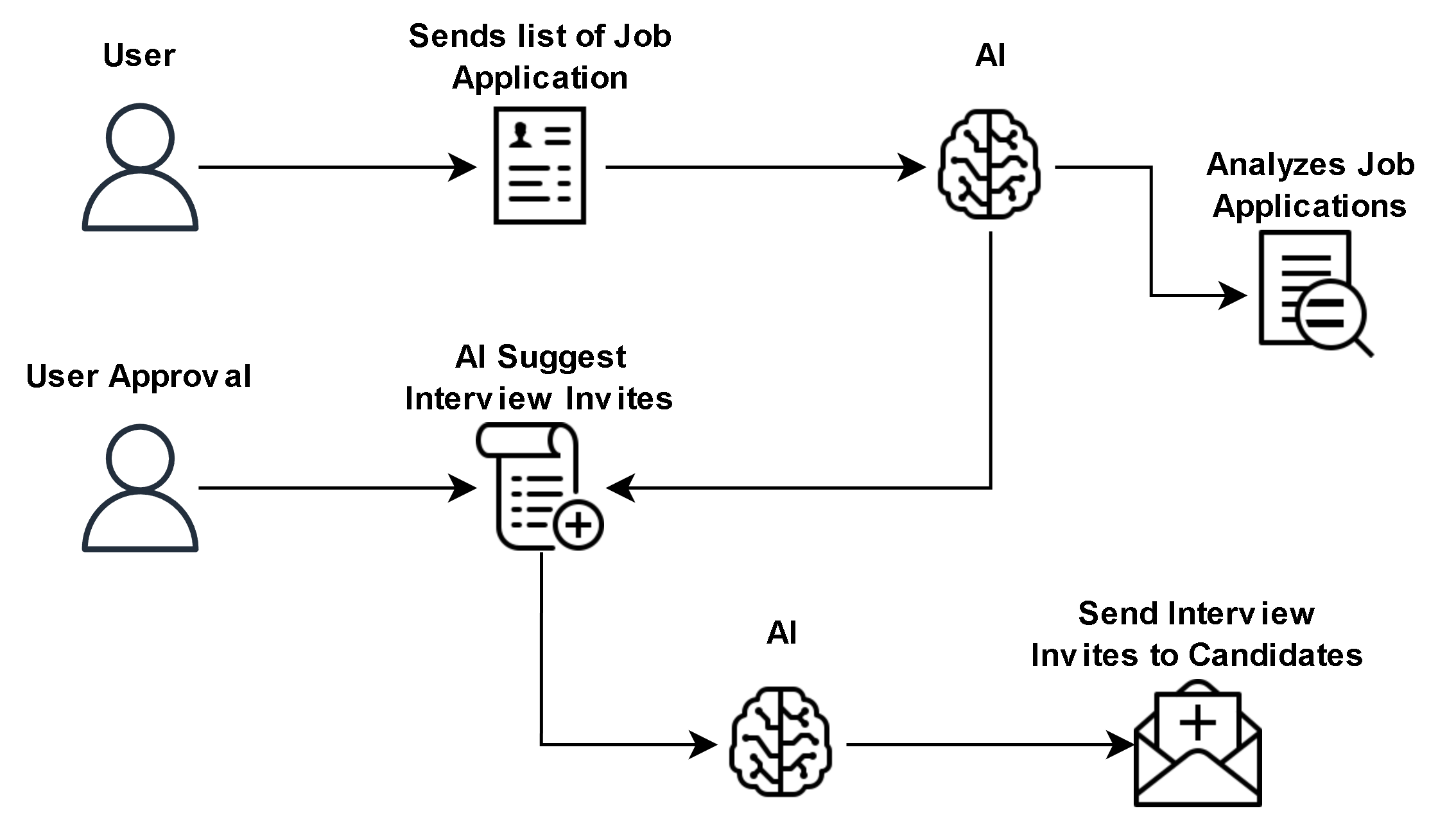

A marketing team uses an AI agent that only suggests email schedules. For example, they ask the agent: “When should we send our next newsletter?” The agent analyzes past open‑rates, engagement data, and suggests the best times, but it does not send the emails itself. A human still reviews the suggestion and sends the newsletter manually. This keeps control and risk low. - Scope 2: Prescribed Agency

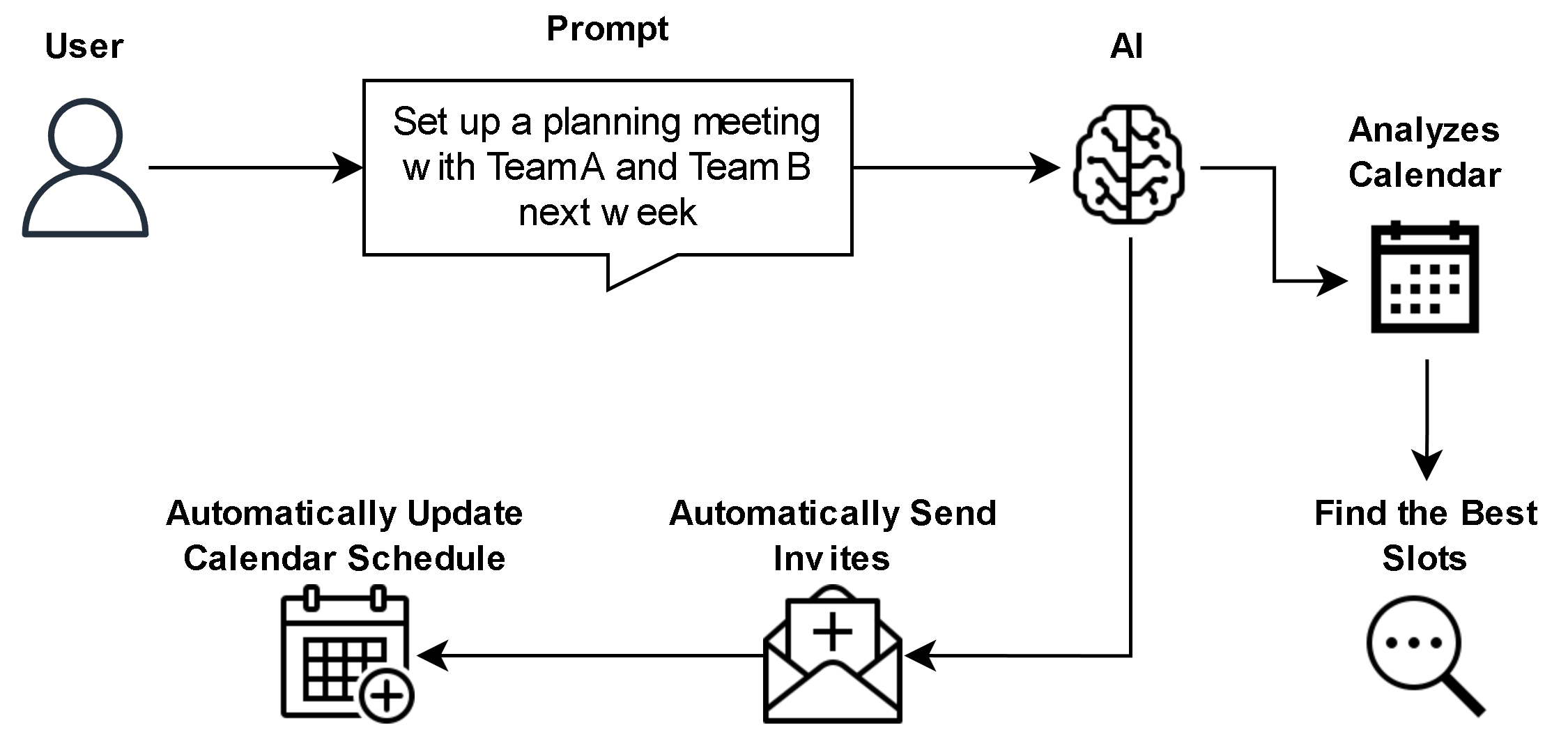

A company’s HR department uses an AI agent to analyze job applications and shortlist candidates. The agent then proposes which applicants should be invited for interviews, but only after a human HR officer approves the list does the system send out interview invites. This gives efficiency (via AI analysis) while preserving human oversight for sensitive actions. - Scope 3: Supervised Agency

A scheduling assistant agent automatically books meetings: once a human defines the objective (e.g., “Set up a planning meeting with Team A and Team B next week”), the agent checks calendars, finds the best slot, sends invites, and updates calendars all without asking for approval each time. This saves time and reduces manual steps, while operating within defined boundaries. - Scope 4: Full Agency

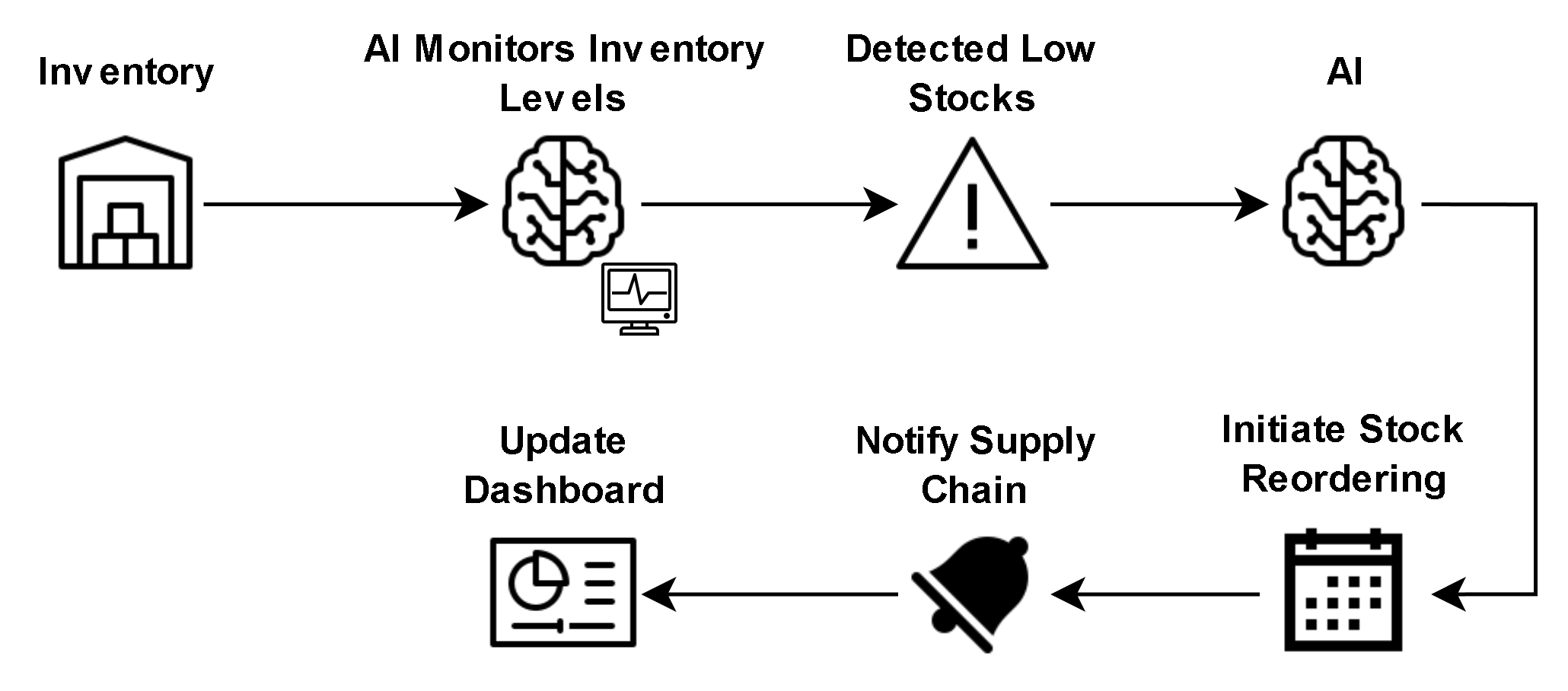

A fully autonomous operations agent monitors internal systems in real‑time (e.g., server load, sales data, inventory levels) and when predefined conditions happen (e.g., “inventory below threshold” or “sales spike detected”), the agent self‑initiates workflows across multiple systems: reorder stock, notify supply chain, update dashboards, send alerts all without human intervention. This can greatly improve responsiveness and efficiency, but also carries the highest risk.

How to Choose Your Scope

Choosing the right agency scope for your AI agent is a critical step. The goal is to balance efficiency and autonomy with safety and security. Not every task requires full autonomy. Sometimes, a simple suggestion-based agent is enough.

Ask the Right Questions

To decide which scope fits your use case, consider the following:

- What are we building?

Define the purpose of the agent. Is it just giving suggestions, performing repetitive tasks, or making decisions on its own? - Who controls the agent’s actions?

Will a human review every step, approve actions, or is the agent expected to act independently? - Who controls the data?

Determine if the agent can access, modify, or store sensitive information. The more access it has, the more controls you need. - What level of autonomy is safe for our use case?

Some workflows can tolerate full autonomy; others may require human supervision to prevent mistakes or misuse. - What risks do we accept, and how will we mitigate them?

Identify potential threats such as data leaks, tool misuse, memory corruption, or cascading failures. Then plan safeguards like logging, permission limits, monitoring, and human checkpoints.

References:

- https://aws.amazon.com/blogs/devops/introducing-aws-cloud-control-api-mcp-server-natural-language-infrastructure-management-on-aws/

- https://awslabs.github.io/mcp/servers/ccapi-mcp-server

- https://docs.aws.amazon.com/cloudcontrolapi/latest/userguide/supported-resources.html