Last updated on February 10, 2026

For decades, audio post-production followed a rigid, linear path: record, edit, mix, and master. It was often the “final hurdle” in a project—a phase where creators spent hours hunting through generic libraries for a door slam that didn’t sound like a cartoon or a music track that didn’t feel like corporate elevator background. But as we move through 2026, the silence between the frames is being filled by something far more intelligent. We are witnessing a fundamental shift from static assets to generative intelligence. Audio is no longer just a “layer” added on top of a video; it has become a reactive, living component of the storytelling process. In this new landscape, sound doesn’t just accompany the visual—it anticipates it. We are moving from the era of “finding the right sound” to “designing the right atmosphere” through AI-driven synthesis. “Film is 50% visual and 50% sound,” David Lynch famously remarked. For decades, the “audio half” was a labor-intensive process of manual layering and surgical editing. In 2026, this equation has been supercharged. The barrier between “finding a track” and “creating a world” has dissolved. Modern Generative Audio Engines allow creators to synthesize custom scores and complex soundscapes in real-time. This isn’t just about speed; it’s about a new level of creative cohesion where the sound adapts to the story as fluidly as a shadow follows its subject. We have moved into the era of Scene-Aware Audio. AI agents, powered by benchmarks like FoleyBench, can now “watch” a video clip and identify materials—glass, gravel, silk, or steel. The Tech: Tools automatically suggest Foley (footsteps, wind, door creaks) that matches the precise textures on screen. The Result: A car door slamming doesn’t just sound like a car door; it sounds like that specific 1967 Mustang door, perfectly synchronized with the speed of the actor’s hand. Global distribution no longer requires the logistical nightmare of international ADR (Automated Dialogue Replacement). Emotional Persistence: High-end tools like Respeecher and ElevenLabs can take a “Hero Voice” and translate it into dozens of languages. The Innovation: Unlike the robotic dubs of the past, 2026 AI maintains the exact emotional inflection, micro-pauses, and timbre of the original performance, ensuring the “soul” of the actor’s work isn’t lost in translation. Traditional editing often forces a creator to cut their video to match a 3-minute music track. Generative workstations like Suno Studio and Udio have flipped this workflow. Modular Scores: Editors can now generate scores that are “beat-aware.” If a scene needs to be ten seconds longer, the AI intelligently extends the melody and bridge without a jarring loop. Interactive Stems: Music is increasingly delivered as “live stems” that can shift from a calm ambient mood to high-tension percussion based on the narrative beats of the edit. Despite the raw power of AI, a machine still cannot feel the “goosebumps” of a perfect crescendo. While AI can generate a technically flawless melody, it can often feel clinical or “mathematically perfect.” The role of the Audio Engineer in 2026 has evolved from technical operator to Emotional Tuner. Their value lies in psychological mixing—ensuring the AI’s output aligns with the subtext of a scene. They manage the “uncanny valley” of sound, ad

The Invisible Half of Multimedia

The Pillars of 2026 Audio Production

1. Predictive Sound Design & “Vision-to-Audio” (V2A)

2. Vocal Resynthesizing & “Hero Voice” Cloning

3. Infinite, Adaptive Soundtracks

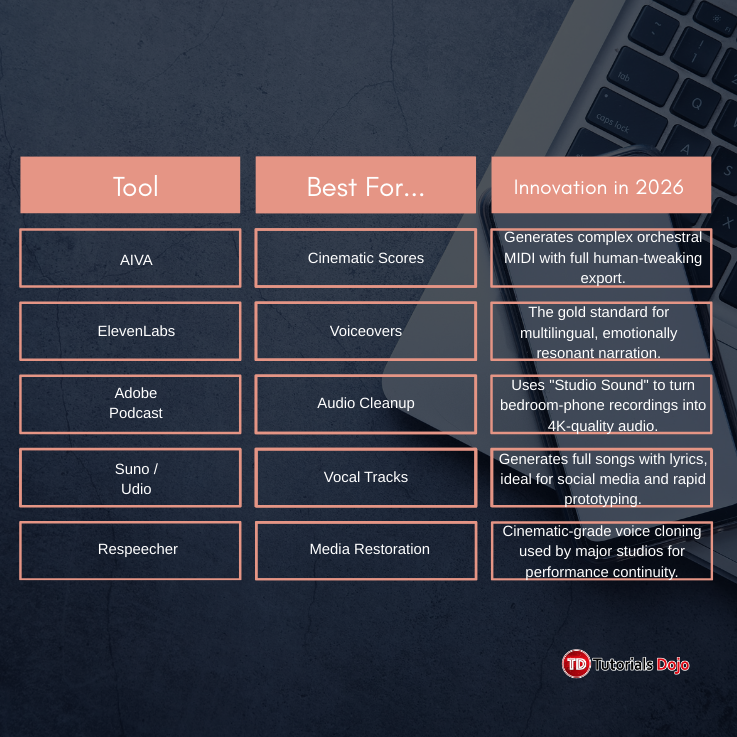

The 2026 Audio Tech Stack

The Human Touch: From Engineer to “Emotional Tuner”

References: